Cloudflare has confirmed that the large service outage yesterday was not brought on by a safety incident and no information has been misplaced.

The problem has been largely mitigated. It began 17:52 UTC yesterday when the Employees KV (Key-Worth) system went fully offline, inflicting widespread service losses throughout a number of edge computing and AI companies.

Employees KV is a globally distributed, constant key-value retailer utilized by Cloudflare Employees, the corporate’s serverless computing platform. It’s a elementary piece in lots of Cloudflare companies and a failure may cause cascading points throughout many elements.

The disruption additionally impacted different companies utilized by tens of millions, most notably the Google Cloud Platform.

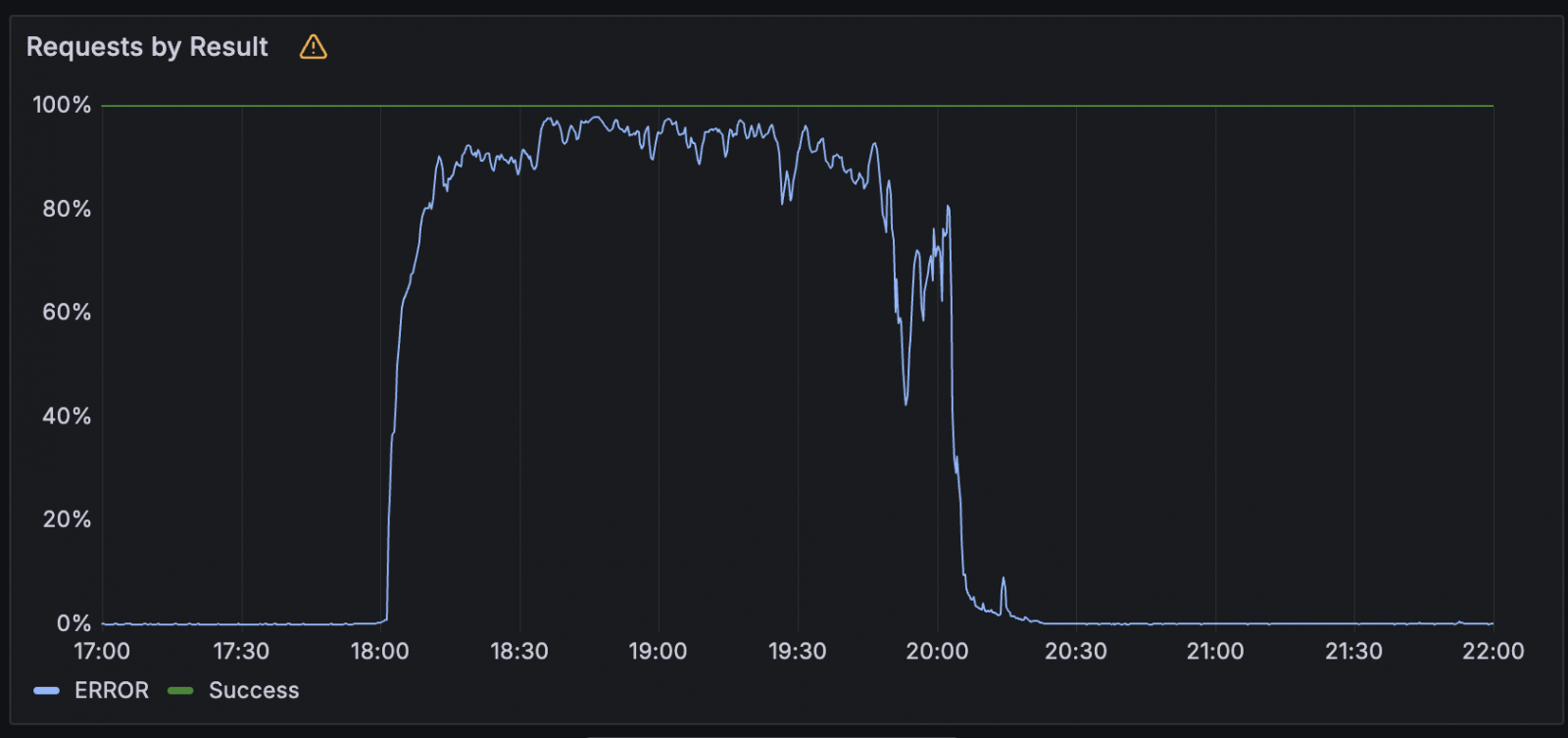

Supply: Cloudflare

In a publish mortem, Cloudflare explains that the outage lasted virtually 2.5 hours and the foundation trigger was a failure within the Employees KV underlying storage infrastructure as a consequence of a third-party cloud supplier outage.

“The reason for this outage was as a consequence of a failure within the underlying storage infrastructure utilized by our Employees KV service, which is a vital dependency for a lot of Cloudflare merchandise and relied upon for configuration, authentication, and asset supply throughout the affected companies,” Cloudflare says.

“A part of this infrastructure is backed by a third-party cloud supplier, which skilled an outage at the moment and immediately impacted the supply of our KV service.”

Cloudflare has decided the impression of the incident on every service:

- Employees KV – skilled a 90.22% failure charge as a consequence of backend storage unavailability, affecting all uncached reads and writes.

- Entry, WARP, Gateway – all suffered vital failures in identity-based authentication, session dealing with, and coverage enforcement as a consequence of reliance on Employees KV, with WARP unable to register new units, and disruption of Gateway proxying and DoH queries.

- Dashboard, Turnstile, Challenges – skilled widespread login and CAPTCHA verification failures, with token reuse danger launched as a consequence of kill swap activation on Turnstile.

- Browser Isolation & Browser Rendering – didn’t provoke or preserve link-based periods and browser rendering duties as a consequence of cascading failures in Entry and Gateway.

- Stream, Photos, Pages – skilled main useful breakdowns: Stream playback and reside streaming failed, picture uploads dropped to 0% success, and Pages builds/serving peaked at ~100% failure.

- Employees AI & AutoRAG – had been fully unavailable as a consequence of dependence on KV for mannequin configuration, routing, and indexing features.

- Sturdy Objects, D1, Queues – companies constructed on the identical storage layer as KV suffered as much as 22% error charges or full unavailability for message queuing and information operations.

- Realtime & AI Gateway – confronted near-total service disruption as a consequence of incapacity to retrieve configuration from Employees KV, with Realtime TURN/SFU and AI Gateway requests closely impacted.

- Zaraz & Employees Belongings – noticed full or partial failure in loading or updating configurations and static property, although end-user impression was restricted in scope.

- CDN, Employees for Platforms, Employees Builds – skilled elevated latency and regional errors in some places, with new Employees builds failing 100% in the course of the incident.

In response to this outage, Cloudflare says it will likely be accelerating a number of resilience-focused adjustments, primarily eliminating reliance on a single third-party cloud supplier for Employees KV backend storage.

Progressively, the KV’s central retailer will probably be migrated to Cloudflare’s personal R2 object storage to cut back exterior dependency.

Cloudflare additionally plans to implement cross-service safeguards and develop new tooling to progressively restore companies throughout storage outages, stopping site visitors surges that would overwhelm recovering programs and trigger secondary failures.

Patching used to imply advanced scripts, lengthy hours, and infinite fireplace drills. Not anymore.

On this new information, Tines breaks down how fashionable IT orgs are leveling up with automation. Patch quicker, scale back overhead, and concentrate on strategic work — no advanced scripts required.