Introduction – What Makes Nvidia GH200 the Star of 2026?

Fast Abstract: What’s the Nvidia GH200 and why does it matter in 2026? – The Nvidia GH200 is a hybrid superchip that merges a 72‑core Arm CPU (Grace) with a Hopper/H200 GPU utilizing NVLink‑C2C. This integration creates as much as 624 GB of unified reminiscence accessible to each CPU and GPU, enabling reminiscence‑sure AI workloads like lengthy‑context LLMs, retrieval‑augmented era (RAG) and exascale simulations. In 2026, as fashions develop bigger and extra advanced, the GH200’s reminiscence‑centric design delivers efficiency and value effectivity not achievable with conventional GPU playing cards. Clarifai presents enterprise‑grade GH200 internet hosting with sensible autoscaling and cross‑cloud orchestration, making this expertise accessible for builders and companies.

Synthetic intelligence is evolving at breakneck velocity. Mannequin sizes are rising from hundreds of thousands to trillions of parameters, and generative functions corresponding to retrieval‑augmented chatbots and video synthesis require large key–worth caches and embeddings. Conventional GPUs just like the A100 or H100 present excessive compute throughput however can turn out to be bottlenecked by reminiscence capability and information motion. Enter the Nvidia GH200, usually nicknamed the Grace Hopper superchip. As a substitute of connecting a CPU and GPU through a sluggish PCIe bus, the GH200 fuses them on the identical package deal and hyperlinks them by NVLink‑C2C—a excessive‑bandwidth, low‑latency interconnect that delivers 900 GB/s of bidirectional bandwidth. This structure permits the GPU to entry the CPU’s reminiscence instantly, leading to a unified reminiscence pool of as much as 624 GB (when combining the 96 GB or 144 GB HBM on the GPU with 480 GB LPDDR5X on the CPU).

This information presents an in depth have a look at the GH200: its structure, efficiency, very best use circumstances, deployment fashions, comparability to different GPUs (H100, H200, B200), and sensible steering on when and the way to decide on it. Alongside the best way we are going to spotlight Clarifai’s compute options that leverage GH200 and supply greatest practices for deploying reminiscence‑intensive AI workloads.

Fast Digest: How This Information Is Structured

- Understanding the GH200 Structure – We study how the hybrid CPU–GPU design and unified reminiscence system work, and why HBM3e issues.

- Benchmarks & Price Effectivity – See how GH200 performs in inference and coaching in contrast with H100/H200, and the impact on price per token.

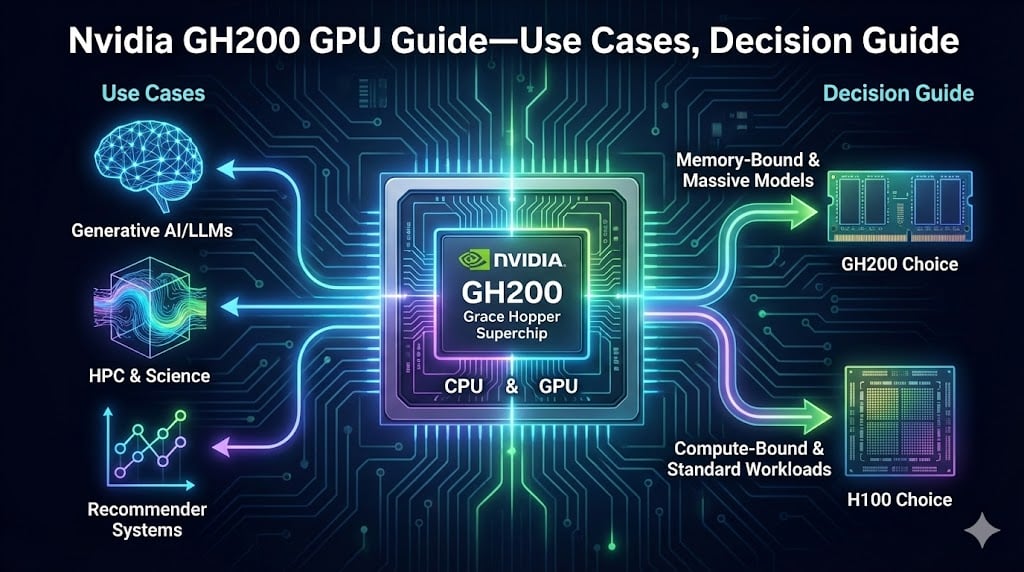

- Use Instances & Workload Match – Study which AI and HPC workloads profit from the superchip, together with RAG, LLMs, graph neural networks and exascale simulations.

- Deployment Fashions & Ecosystem – Discover on‑premises DGX methods, hyperscale cloud situations, specialist GPU clouds, and Clarifai’s orchestration options.

- Resolution Framework – Perceive when to decide on GH200 vs H100/H200 vs B200/Rubin based mostly on reminiscence, bandwidth, software program and price range.

- Challenges & Future Developments – Take into account limitations (ARM software program, energy, latency) and sit up for HBM3e, Blackwell, Rubin and new supercomputers.

Let’s dive in.

GH200 Structure and Reminiscence Improvements

Fast Abstract: How does the GH200’s structure differ from conventional GPUs? – Not like standalone GPU playing cards, the GH200 integrates a 72‑core Grace CPU and a Hopper/H200 GPU on a single module. The 2 chips talk through NVLink‑C2C delivering 900 GB/s bandwidth. The GPU contains 96 GB HBM3 or 144 GB HBM3e, whereas the CPU offers 480 GB LPDDR5X. NVLink‑C2C permits the GPU to instantly entry CPU reminiscence, making a unified reminiscence pool of as much as 624 GB. This eliminates expensive information transfers and is vital to the GH200’s reminiscence‑centric design.

Hybrid CPU–GPU Fusion

At its core, the GH200 combines a Grace CPU and a Hopper GPU. The CPU options 72 Arm Neoverse V2 cores (or 72 Grace cores), delivering excessive reminiscence bandwidth and power effectivity. The GPU relies on the Hopper structure (used within the H100) however could also be upgraded to the H200 in newer revisions, including quicker HBM3e reminiscence. NVLink‑C2C is the key sauce: a cache‑coherent interface enabling each chips to share reminiscence coherently at 900 GB/s – roughly 7× quicker than PCIe Gen5. This design makes the GH200 successfully a large APU or system‑on‑chip tailor-made for AI.

Unified Reminiscence Pool

Conventional GPU servers depend on discrete reminiscence swimming pools: CPU DRAM and GPU HBM. Knowledge have to be copied throughout the PCIe bus, incurring latency and overhead. The GH200’s unified reminiscence eliminates this barrier. The Grace CPU brings 480 GB of LPDDR5X reminiscence with bandwidth of 546 GB/s, whereas the Hopper GPU contains 96 GB HBM3 delivering 4 000 GB/s bandwidth. The upcoming HBM3e variant will increase reminiscence capability to 141–144 GB and boosts bandwidth by over 25 %. Mixed with NVLink‑C2C, this offers a shared reminiscence pool of as much as 624 GB, enabling the GPU to cache huge datasets and key–worth caches for LLMs with out repeatedly fetching from CPU reminiscence. NVLink can also be scalable: NVL2 pairs two superchips to create a node with 288 GB HBM and 10 TB/s bandwidth, and the NVLink swap system can join 256 superchips to behave as one big GPU with 1 exaflop efficiency and 144 TB unified reminiscence.

HBM3e and Rubin Platform

The GH200 began with HBM3 however is already evolving. The HBM3e revision provides 144 GB of HBM for the GPU, elevating efficient reminiscence capability by round 50 % and rising bandwidth from 4 000 GB/s to about 4.9 TB/s. This improve helps massive fashions retailer extra key–worth pairs and embeddings totally in on‑chip reminiscence. Wanting forward, Nvidia’s Rubin platform (introduced 2025) will introduce a brand new CPU with 88 Olympus cores, 1.8 TB/s NVLink‑C2C bandwidth and 1.5 TB LPDDR5X reminiscence, doubling reminiscence capability over Grace. Rubin will even assist NVLink 6 and NVL72 rack methods that scale back inference token price by 10× and coaching GPU rely by 4× in contrast with Blackwell—an indication that reminiscence‑centric design will proceed to evolve.

Skilled Insights

- Unified reminiscence is a paradigm shift – By exposing GPU reminiscence as a CPU NUMA node, NVLink‑C2C eliminates the necessity for specific information copying and permits CPU code to entry HBM instantly. This simplifies programming and accelerates reminiscence‑sure duties.

- HBM3e vs HBM3 – The 50 % enhance in capability and 25 % enhance in bandwidth of HBM3e considerably extends the dimensions of fashions that may be served on a single chip, pushing the GH200 into territory beforehand reserved for multi‑GPU clusters.

- Scalability through NVLink swap – Connecting lots of of superchips through NVLink swap leads to a single logical GPU with terabytes of shared reminiscence—essential for exascale methods like Helios and JUPITER.

- Grace vs Rubin – Whereas Grace presents 72 cores and 480 GB reminiscence, Rubin will ship 88 cores and as much as 1.5 TB reminiscence with NVLink 6, hinting that future AI workloads might require much more reminiscence and bandwidth.

Efficiency Benchmarks & Price Effectivity

Fast Abstract: How does GH200 carry out relative to H100/H200, and what does this imply for price? – Benchmarks reveal that the GH200 delivers 1.4×–1.8× increased MLPerf inference efficiency per accelerator than the H100. In sensible exams on Llama 3 fashions, GH200 achieved 7.6× increased throughput and diminished price per token by 8× in contrast with H100. Clarifai stories a 17 % efficiency acquire over H100 of their MLPerf outcomes. These features stem from unified reminiscence and NVLink‑C2C, which scale back latency and allow bigger batches.

MLPerf and Vendor Benchmarks

In Nvidia’s MLPerf Inference v4.1 outcomes, the GH200 delivered as much as 1.4× extra efficiency per accelerator than the H100 on generative AI duties. When configured in NVL2, two superchips achieved 3.5× extra reminiscence and 3× extra bandwidth than a single H100, translating into higher scaling for big fashions. Clarifai’s inside benchmarking confirmed a 17 % throughput enchancment over H100 for MLPerf duties.

Actual‑World Inference (LLM and RAG)

In a broadly shared weblog submit, Lambda AI in contrast GH200 to H100 for single‑node Llama 3.1 70B inference. GH200 delivered 7.6× increased throughput and 8× decrease price per token than H100, due to the power to dump key–worth caches to CPU reminiscence. Baseten ran comparable experiments with Llama 3.3 70B and located that GH200 outperformed H100 by 32 % as a result of the reminiscence pool allowed bigger batch sizes. Nvidia’s technical weblog on RAG functions confirmed that GH200 offers 2.7×–5.7× speedups in contrast with A100 throughout embedding era, index construct, vector search and LLM inference.

Price‑Per‑Hour & Cloud Pricing

Price is a essential issue. An evaluation of GPU rental markets discovered that GH200 situations price $4–$6 per hour on hyperscalers, barely greater than H100 however with improved efficiency, whereas specialist GPU clouds generally supply GH200 at aggressive charges. Decentralised marketplaces might enable cheaper entry however usually restrict options. Clarifai’s compute platform makes use of sensible autoscaling and GPU fractioning to optimise useful resource utilisation, decreasing price per token additional.

Reminiscence‑Sure vs Compute‑Sure Workloads

Whereas GH200 shines for reminiscence‑sure duties, it doesn’t at all times beat H100 for compute‑sure kernels. Some compute‑intensive kernels saturate the GPU’s compute items and aren’t restricted by reminiscence bandwidth, so the efficiency benefit shrinks. Fluence’s information notes that GH200 shouldn’t be the best selection for easy single‑GPU coaching or compute‑solely duties. In such circumstances, H100 or H200 may ship comparable or higher efficiency at decrease price.

Skilled Insights

- Price per token issues – Inference price isn’t nearly GPU value; it’s about throughput. GH200’s skill to make use of bigger batches and retailer key–worth caches on CPU reminiscence drastically cuts price per token.

- Batch dimension is the important thing – Bigger unified reminiscence permits greater batches and reduces the overhead of reloading contexts, resulting in huge throughput features.

- Stability compute and reminiscence – For compute‑heavy duties like CNN coaching or matrix multiplications, H100 or H200 might suffice. GH200 is focused at reminiscence‑sure workloads, so select accordingly.

Use Instances and Workload Match

Fast Abstract: Which workloads profit most from GH200? – GH200 excels in massive language mannequin inference and coaching, retrieval‑augmented era (RAG), multimodal AI, vector search, graph neural networks, advanced simulations, video era, and scientific HPC. Its unified reminiscence permits storing massive key–worth caches and embeddings in RAM, enabling quicker response occasions and bigger context home windows. Exascale supercomputers like JUPITER make use of tens of 1000’s of GH200 chips to simulate local weather and physics at unprecedented scale.

Giant Language Fashions and Chatbots

Trendy LLMs corresponding to Llama 3, Llama 2, GPT‑J and different 70 B+ parameter fashions require storing gigabytes of weights and key–worth caches. GH200’s unified reminiscence helps as much as 624 GB of accessible reminiscence, that means that lengthy context home windows (128 okay tokens or extra) will be served with out swapping to disk. Nvidia’s weblog on multiturn interactions exhibits that offloading KV caches to CPU reminiscence reduces time‑to‑first token by as much as 14× and improves throughput 2× in contrast with x86‑H100 servers. This makes GH200 very best for chatbots requiring actual‑time responses and deep context.

Retrieval‑Augmented Era (RAG)

RAG pipelines combine massive language fashions with vector databases to fetch related info. This requires producing embeddings, constructing vector indices and performing similarity search. Nvidia’s RAG benchmark exhibits GH200 achieves 2.7× quicker embedding era, 2.9× quicker index construct, 3.3× quicker vector search, and 5.7× quicker LLM inference in comparison with A100. The power to maintain vector databases in unified reminiscence reduces information motion and improves latency. Clarifai’s RAG APIs can run on GH200 to deploy chatbots with area‑particular information and summarisation capabilities.

Multimodal AI and Video Era

The GH200’s reminiscence capability additionally advantages multimodal fashions (textual content + picture + video). Fashions like VideoPoet or diffusion‑based mostly video synthesizers require storing frames and cross‑modal embeddings. GH200’s reminiscence can maintain longer sequences and unify CPU and GPU reminiscence, accelerating coaching and inference. That is particularly invaluable for corporations engaged on video era or massive‑scale picture captioning.

Graph Neural Networks and Suggestion Programs

Giant recommender methods and graph neural networks deal with billions of nodes and edges, usually requiring terabytes of reminiscence. Nvidia’s press launch on the DGX GH200 emphasises that NVLink swap mixed with a number of superchips permits 144 TB of shared reminiscence for coaching suggestion methods. This reminiscence capability is essential for fashions like Deep Studying Suggestion Mannequin 3 (DLRM‑v3) or GNNs utilized in social networks and information graphs. GH200 can drastically scale back coaching time and enhance scaling.

Scientific HPC and Exascale Simulations

Exterior AI, the GH200 performs a job in scientific HPC. The European JUPITER supercomputer, anticipated to exceed 90 exaflops, employs 24 000 GH200 superchips interconnected through InfiniBand, with every node utilizing 288 Arm cores and 896 GB of reminiscence. The excessive reminiscence and compute density speed up local weather fashions, physics simulations and drug discovery. Equally, the Helios and DGX GH200 methods join lots of of superchips through NVLink switches to kind unified supernodes with exascale efficiency.

Skilled Insights

- RAG is reminiscence‑sure – RAG workloads usually fail on smaller GPUs as a consequence of restricted reminiscence for embeddings and indices; GH200 solves this by providing unified reminiscence and close to‑zero copy entry.

- Video era wants massive temporal context – GH200’s reminiscence permits storing a number of frames and have maps for top‑decision video synthesis, decreasing I/O overhead.

- Graph workloads thrive on reminiscence bandwidth – Analysis on GNN coaching exhibits GH200 offers 4×–7× speedups for graph neural networks in contrast with conventional GPUs, due to its reminiscence capability and NVLink community.

Deployment Choices and Ecosystem

Fast Abstract: The place are you able to entry GH200 at the moment? – GH200 is offered through on‑premises DGX methods, cloud suppliers like AWS, Azure and Google Cloud, specialist GPU clouds (Lambda, Baseten, Fluence) and decentralised marketplaces. Clarifai presents enterprise‑grade GH200 internet hosting with options like sensible autoscaling, GPU fractioning and cross‑cloud orchestration. NVLink swap methods enable a number of superchips to behave as a single GPU with huge shared reminiscence.

On‑Premise DGX Programs

Nvidia’s DGX GH200 makes use of NVLink swap to attach as much as 256 superchips, delivering 1 exaflop of efficiency and 144 TB unified reminiscence. Organisations like Google, Meta and Microsoft have been early adopters and plan to make use of DGX GH200 methods for big mannequin coaching and AI analysis. For enterprises with strict information‑sovereignty necessities, DGX packing containers supply most management and excessive‑velocity NVLink interconnects.

Hyperscaler Situations

Main cloud suppliers now supply GH200 situations. On AWS, Azure and Google Cloud, you’ll be able to hire GH200 nodes at roughly $4–$6 per hour. Pricing varies relying on area and configuration; the unified reminiscence reduces the necessity for multi‑GPU clusters, doubtlessly decreasing general prices. Cloud situations are usually obtainable in restricted areas as a consequence of provide constraints, so early reservation is advisable.

Specialist GPU Clouds and Decentralised Markets

Firms like Lambda Cloud, Baseten and Fluence present GH200 rental or hosted inference. Fluence’s information compares pricing throughout suppliers and notes that specialist clouds might supply extra aggressive pricing and higher software program assist than hyperscalers. Baseten’s experiments present easy methods to run Llama 3 on GH200 for inference with 32 % higher throughput than H100. Decentralised GPU marketplaces corresponding to Golem or GPUX enable customers to hire GH200 capability from people or small information centres, though options like NVLink pairing could also be restricted.

Clarifai Compute Platform

Clarifai stands out by providing enterprise‑grade GH200 internet hosting with sturdy orchestration instruments. Key options embody:

- Good autoscaling: mechanically scales GH200 assets based mostly on mannequin demand, making certain low latency whereas optimising price.

- GPU fractioning: splits a GH200 into smaller logical partitions, permitting a number of workloads to share the reminiscence pool and compute items effectively.

- Cross‑cloud flexibility: run workloads on GH200 {hardware} throughout a number of clouds or on‑premises, simplifying migration and failover.

- Unified management & governance: handle all deployments by Clarifai’s console or API, with monitoring, logging and compliance in-built.

These capabilities let enterprises undertake GH200 with out investing in bodily infrastructure and guarantee they solely pay for what they use.

Skilled Insights

- NVLink swap vs InfiniBand – NVLink swap presents decrease latency and better bandwidth than InfiniBand, enabling a number of GH200 modules to behave like a single GPU.

- Cloud availability is proscribed – Attributable to excessive demand and restricted provide, GH200 situations could also be scarce on public cloud; working with specialist suppliers or Clarifai ensures precedence entry.

- Compute orchestration simplifies adoption – Utilizing Clarifai’s orchestration options permits engineers to deal with fashions somewhat than infrastructure, bettering time‑to‑market.

Resolution Information: GH200 vs H100/H200 vs B200/Rubin

Fast Abstract: How do you determine which GPU to make use of? – The selection relies on reminiscence necessities, bandwidth, software program assist, energy price range and value. GH200 presents unified reminiscence (96–144 GB HBM + 480 GB LPDDR) and excessive bandwidth (900 GB/s NVLink‑C2C), making it very best for reminiscence‑sure duties. H100 and H200 are higher for compute‑sure workloads or when utilizing x86 software program stacks. B200 (Blackwell) and upcoming Rubin promise much more reminiscence and value effectivity, however availability might lag. Clarifai’s orchestration can combine and match {hardware} to satisfy workload wants.

Reminiscence Capability & Bandwidth

- H100 – 80 GB HBM and a pair of TB/s reminiscence bandwidth (HBM3). Reminiscence is native to the GPU; information have to be moved from CPU through PCIe.

- H200 – 141 GB HBM3e and 4.8 TB/s bandwidth. A drop‑in alternative for H100 however nonetheless requires PCIe or NVLink bridging. Appropriate for compute‑sure duties needing extra GPU reminiscence.

- GH200 – 96 GB HBM3 or 144 GB HBM3e plus 480 GB LPDDR5X accessible through 900 GB/s NVLink‑C2C, yielding a unified 624 GB pool.

- B200 (Blackwell) – Rumoured to supply 208 GB HBM3e and 10 TB/s bandwidth; lacks unified CPU reminiscence, so nonetheless reliant on PCIe or NVLink connections.

- Rubin platform – Will function an 88‑core CPU with 1.5 TB of LPDDR5X and 1.8 TB/s NVLink‑C2C bandwidth. NVL72 racks will drastically scale back inference price.

Software program Stack & Structure

- GH200 makes use of an ARM structure (Grace CPU). Many AI frameworks assist ARM, however some Python libraries and CUDA variations might require recompilation. Clarifai’s native runner solves this by offering containerised environments with the best dependencies.

- H100/H200 run on x86 servers and profit from mature software program ecosystems. In case your codebase closely relies on x86‑particular libraries, migrating to GH200 might require further effort.

Energy Consumption & Cooling

GH200 methods can draw as much as 1 000 W per node because of the mixed CPU and GPU. Guarantee ample cooling and energy infrastructure. H100 and H200 nodes usually eat much less energy individually however might require extra nodes to match GH200’s reminiscence capability.

Price & Availability

GH200 {hardware} is costlier than H100/H200 upfront, however the diminished variety of nodes required for reminiscence‑intensive workloads can offset price. Pricing information suggests GH200 leases price about $4–$6 per hour. H100/H200 could also be cheaper per hour however want extra items to host the identical mannequin. Blackwell and Rubin aren’t but broadly obtainable; early adopters might pay premium pricing.

Resolution Matrix

- Select GH200 when your workloads are reminiscence‑sure (LLM inference, RAG, GNNs, large embeddings) or require unified reminiscence for environment friendly pipelines.

- Select H100/H200 for compute‑sure duties like convolutional neural networks, transformer pretraining, or when utilizing x86‑dependent software program. H200 provides extra HBM however nonetheless lacks unified CPU reminiscence.

- Watch for B200/Rubin when you want even bigger reminiscence or higher price effectivity and may deal with delayed availability. Rubin’s NVL72 racks could also be revolutionary for exascale AI.

- Leverage Clarifai to combine {hardware} sorts inside a single pipeline, utilizing GH200 for reminiscence‑heavy levels and H100/B200 for compute‑heavy phases.

Skilled Insights

- Unified reminiscence adjustments the calculus – Take into account reminiscence capability first; the unified 624 GB on GH200 can change a number of H100 playing cards and simplify scaling.

- ARM software program is maturing – Instruments like PyTorch and TensorFlow have improved assist for ARM; containerised environments (e.g., Clarifai native runner) make deployment manageable.

- HBM3e is a powerful bridge – H200’s HBM3e reminiscence offers a few of GH200’s capability advantages with out new CPU structure, providing a less complicated improve path.

Challenges, Limitations and Mitigation

Fast Abstract: What are the pitfalls of adopting GH200 and how will you mitigate them? – Key challenges embody software program compatibility on ARM, excessive energy consumption, cross‑die latency, provide chain constraints and increased price. Mitigation methods contain utilizing containerised environments (Clarifai native runner), proper‑sizing assets (GPU fractioning), and planning for provide constraints.

Software program Ecosystem on ARM

The Grace CPU makes use of an ARM structure, which can require recompiling libraries or dependencies. PyTorch, TensorFlow and CUDA assist ARM, however some Python packages depend on x86 binaries. Lambda’s weblog warns that PyTorch have to be compiled for ARM, and there could also be restricted prebuilt wheels. Clarifai’s native runner addresses this by packaging dependencies and offering pre‑configured containers, making it simpler to deploy fashions on GH200.

Energy and Cooling Necessities

A GH200 superchip can eat as much as 900 W for the GPU and 1000 W for the total system. Knowledge centres should guarantee ample cooling, energy supply and monitoring. Utilizing sensible autoscaling to spin down unused nodes reduces power utilization. Take into account the environmental influence and potential regulatory necessities (e.g., carbon reporting).

Latency & NUMA Results

Whereas NVLink‑C2C presents excessive bandwidth, cross‑die reminiscence entry has increased latency than native HBM. Chips and Cheese’s evaluation notes that the common latency will increase when accessing CPU reminiscence vs HBM. Builders ought to design algorithms to prioritise information locality: maintain regularly accessed tensors in HBM and use CPU reminiscence for KV caches and sometimes accessed information. Analysis is ongoing to optimise information placement and scheduling. explores LLVM OpenMP offload optimisations on GH200, offering insights for HPC workloads.

Provide Chain & Pricing

Excessive demand and restricted provide imply GH200 situations will be scarce. Fluence’s pricing comparability highlights that GH200 might price greater than H100 per hour however presents higher efficiency for reminiscence‑heavy duties. To mitigate provide points, work with suppliers like Clarifai that reserve capability or use decentrised markets to dump non‑essential workloads.

Skilled Insights

- Embrace hybrid structure – Use each H100/H200 and GH200 the place applicable; unify them through container orchestration to beat provide and software program limitations.

- Optimise information placement – Hold compute‑intensive kernels on HBM; offload caches to LPDDR reminiscence. Monitor reminiscence bandwidth and latency utilizing profiling instruments.

- Plan for lengthy lead occasions – Pre‑order GH200 {hardware} or cloud reservations. Develop software program in moveable frameworks to ease transitions between architectures.

Rising Developments & Future Outlook

Fast Abstract: What’s subsequent for reminiscence‑centric AI {hardware}? – Developments embody HBM3e reminiscence, Blackwell (B200/GB200) GPUs, Rubin CPU platforms, NVLink‑6 and NVL72 racks, and the rise of exascale supercomputers. These improvements purpose to additional scale back inference price and power consumption whereas rising reminiscence capability and compute density.

HBM3e and Blackwell

The HBM3e revision of GH200 already will increase reminiscence capability to 144 GB and bandwidth to 4.9 TB/s. Nvidia’s subsequent GPU structure, Blackwell, options the B200 and server configurations like GB200 and GB300. These chips will enhance HBM capability to round 208 GB, present improved compute throughput and should incorporate the Hopper or Rubin CPU for unified reminiscence. In accordance with Medium analyst Adrian Cockcroft, GH200 pairs an H200 GPU with the Grace CPU and may join 256 modules utilizing shared reminiscence for improved efficiency.

Rubin Platform and NVLink‑6

Nvidia’s Rubin platform pushes reminiscence‑centric design additional by introducing an 88‑core CPU with 1.5 TB LPDDR5X and 1.8 TB/s NVLink‑C2C bandwidth. Rubin’s NVL72 rack methods will scale back inference price by 10× and the variety of GPUs wanted for coaching by 4× in contrast with Blackwell. We will count on mainstream adoption round 2026–2027, though early entry could also be restricted to massive cloud suppliers.

Exascale Supercomputers & International AI Infrastructure

Supercomputers like JUPITER and Helios show the potential of GH200 at scale. JUPITER makes use of 24 000 GH200 superchips and is anticipated to ship greater than 90 exaflops. These methods will energy analysis into local weather change, climate prediction, quantum physics and AI. As generative AI functions corresponding to video era and protein folding require extra reminiscence, these exascale infrastructures shall be essential.

Trade Collaboration and Ecosystem

Nvidia’s press releases emphasise that main tech corporations (Google, Meta, Microsoft) and integrators like SoftBank are investing closely in GH200 methods. In the meantime, storage and networking distributors are adapting their merchandise to deal with unified reminiscence and excessive‑throughput information streams. The ecosystem will proceed to increase, bringing higher software program instruments, reminiscence‑conscious schedulers and cross‑vendor interoperability.

Skilled Insights

- Reminiscence is the brand new frontier – Future platforms will emphasise reminiscence capability and bandwidth over uncooked flops; algorithms shall be redesigned to use unified reminiscence.

- Rubin and NVLink 6 – These will doubtless allow multi‑rack clusters with unified reminiscence measured in petabytes, remodeling AI infrastructure.

- Put together now – Constructing pipelines that may run on GH200 units you as much as undertake B200/Rubin with minimal adjustments.

Clarifai Product Integration & Finest Practices

Fast Abstract: How does Clarifai leverage GH200 and what are greatest practices for customers? – Clarifai presents enterprise‑grade GH200 internet hosting with options corresponding to sensible autoscaling, GPU fractioning, cross‑cloud orchestration, and a native runner for ARM‑optimised deployment. To maximise efficiency, use bigger batch sizes, retailer key–worth caches on CPU reminiscence, and combine vector databases with Clarifai’s RAG APIs.

Clarifai’s GH200 Internet hosting

Clarifai’s compute platform makes the GH200 accessible with no need to buy {hardware}. It abstracts complexity by options:

- Good autoscaling provisions GH200 situations as demand will increase and scales them down throughout idle intervals.

- GPU fractioning lets a number of jobs share a single GH200, splitting reminiscence and compute assets to maximise utilisation.

- Cross‑cloud orchestration permits workloads to run on GH200 throughout varied clouds and on‑premises infrastructure with unified monitoring and governance.

- Unified management & governance offers centralised dashboards, auditing and position‑based mostly entry, essential for enterprise compliance.

Clarifai’s RAG and embedding APIs are optimised for GH200 and assist vector search and summarisation. Builders can deploy LLMs with massive context home windows and combine exterior information sources with out worrying about reminiscence administration. Clarifai’s pricing is clear and usually tied to utilization, providing price‑efficient entry to GH200 assets.

Finest Practices for Deploying on GH200

- Use massive batch sizes – Leverage the unified reminiscence to extend batch sizes for inference; this reduces overhead and improves throughput.

- Offload KV caches to CPU reminiscence – Retailer key–worth caches in LPDDR reminiscence to liberate HBM for compute; NVLink‑C2C ensures low‑latency entry.

- Combine vector databases – For RAG, join Clarifai’s APIs to vector shops; maintain indices in unified reminiscence to speed up search.

- Monitor reminiscence bandwidth – Use profiling instruments to detect reminiscence bottlenecks. Knowledge placement issues; excessive‑frequency tensors ought to keep in HBM.

- Undertake containerised environments – Use Clarifai’s native runner to deal with ARM dependencies and preserve reproducibility.

- Plan cross‑{hardware} pipelines – Mix GH200 for reminiscence‑intensive levels with H100/B200 for compute‑heavy levels, orchestrated through Clarifai’s platform.

Skilled Insights

- Reminiscence‑conscious design – Rethink your algorithms to use unified reminiscence: pre‑allocate massive buffers, scale back information copies and tune for NVLink bandwidth.

- GPU sharing boosts ROI – Fractioning a GH200 throughout a number of workloads will increase utilisation and lowers price per job; that is particularly helpful for startups.

- Clarifai’s cross‑cloud synergy – Operating workloads throughout a number of clouds prevents vendor lock‑in and ensures excessive availability.

Regularly Requested Questions

Q1: Is GH200 obtainable at the moment and the way a lot does it price? – Sure. GH200 methods can be found through cloud suppliers and specialist GPU clouds. Rental costs vary from $4–$6 per hour relying on supplier and area. Clarifai presents utilization‑based mostly pricing by its platform.

Q2: How does GH200 differ from H100 and H200? – GH200 fuses a CPU and GPU on one module with 900 GB/s NVLink‑C2C, making a unified reminiscence pool of as much as 624 GB. H100 is a standalone GPU with 80 GB HBM, whereas H200 upgrades the H100 with 141 GB HBM3e. GH200 is healthier for reminiscence‑sure duties; H100/H200 stay sturdy for compute‑sure workloads and x86 compatibility.

Q3: Will I have to rewrite my code to run on GH200? – Most AI frameworks (PyTorch, TensorFlow, JAX) assist ARM and CUDA. Nevertheless, some libraries might have recompilation. Utilizing containerised environments (e.g., Clarifai native runner) simplifies the migration.

This fall: What about energy consumption and cooling? – GH200 nodes can eat round 1 000 W. Guarantee ample energy and cooling. Good autoscaling reduces idle consumption.

Q5: When will Blackwell/B200/Rubin be broadly obtainable? – Nvidia has introduced B200 and Rubin platforms, however broad availability might arrive in late 2026 or 2027. Rubin guarantees 10× decrease inference price and 4× fewer GPUs in comparison with Blackwell. For many builders, GH200 will stay a flagship selection by 2026.

Conclusion

The Nvidia GH200 marks a turning level in AI {hardware}. By fusing a 72‑core Grace CPU with a Hopper/H200 GPU through NVLink‑C2C, it delivers a unified reminiscence pool as much as 624 GB and eliminates the bottlenecks of PCIe. Benchmarks present as much as 1.8× extra efficiency than the H100 and massive enhancements in price per token for LLM inference. These features stem from reminiscence: the power to maintain whole fashions, key–worth caches and vector indices on chip. Whereas GH200 isn’t excellent—software program on ARM requires adaptation, energy consumption is excessive and provide is proscribed—it presents unparalleled capabilities for reminiscence‑sure workloads.

As AI enters the period of trillion‑parameter fashions, reminiscence‑centric computing turns into important. GH200 paves the best way for Blackwell, Rubin and past, with bigger reminiscence swimming pools and extra environment friendly NVLink interconnects. Whether or not you’re constructing chatbots, producing video, exploring scientific simulations or coaching recommender methods, GH200 offers a robust platform. Partnering with Clarifai simplifies adoption: their compute platform presents sensible autoscaling, GPU fractioning and cross‑cloud orchestration, making the GH200 accessible to groups of all sizes. By understanding the structure, efficiency traits and greatest practices outlined right here, you’ll be able to harness the GH200’s potential and put together for the subsequent wave of AI innovation.