mall makes use of Massive Language Fashions (LLM) to run

Pure Language Processing (NLP) operations towards your information. This bundle

is on the market for each R, and Python. Model 0.2.0 has been launched to

CRAN and

PyPi respectively.

In R, you may set up the newest model with:

In Python, with:

This launch expands the variety of LLM suppliers you need to use with mall. Additionally,

in Python it introduces the choice to run the NLP operations over string vectors,

and in R, it allows help for ‘parallelized’ requests.

It is usually very thrilling to announce a model new cheatsheet for this bundle. It

is on the market in print (PDF) and HTML format!

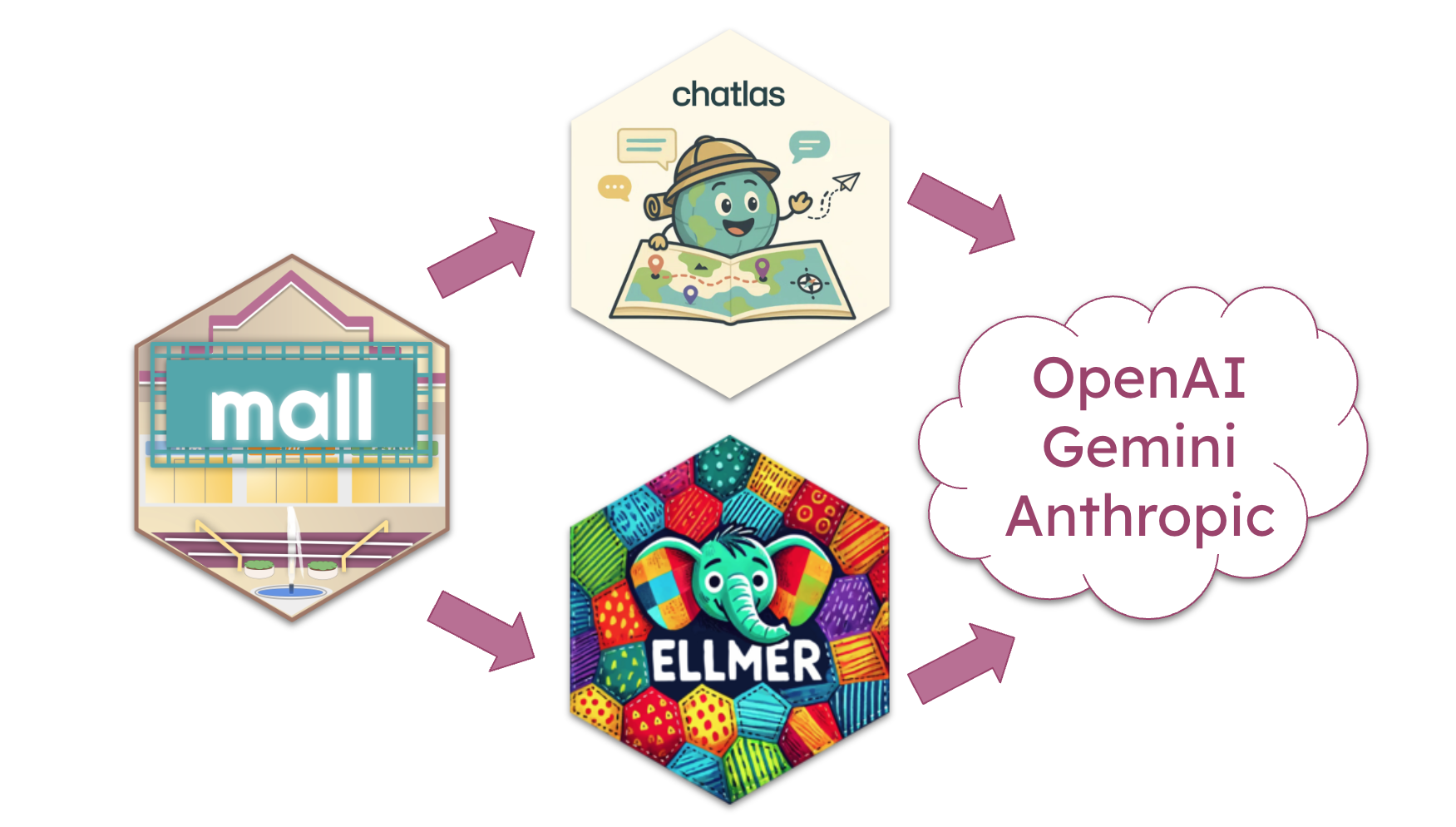

Extra LLM suppliers

The most important spotlight of this launch is the the power to make use of exterior LLM

suppliers corresponding to OpenAI, Gemini

and Anthropic. As a substitute of writing integration for

every supplier one after the other, mall makes use of specialised integration packages to behave as

intermediates.

In R, mall makes use of the ellmer bundle

to combine with a wide range of LLM suppliers.

To entry the brand new function, first create a chat connection, after which move that

connection to llm_use(). Right here is an instance of connecting and utilizing OpenAI:

set up.packages("ellmer")

library(mall)

library(ellmer)

chat <- chat_openai()

#> Utilizing mannequin = "gpt-4.1".

llm_use(chat, .cache = "_my_cache")

#>

#> ── mall session object

#> Backend: ellmerLLM session: mannequin:gpt-4.1R session: cache_folder:_my_cacheIn Python, mall makes use of chatlas as

the combination level with the LLM. chatlas additionally integrates with

a number of LLM suppliers.

To make use of, first instantiate a chatlas chat connection class, after which move that

to the Polars information body through the <DF>.llm.use() perform:

pip set up chatlas

import mall

from chatlas import ChatOpenAI

chat = ChatOpenAI()

information = mall.MallData

evaluations = information.evaluations

evaluations.llm.use(chat)

#> {'backend': 'chatlas', 'chat': <Chat OpenAI/gpt-4.1 turns=0 tokens=0/0 $0.0>

#> , '_cache': '_mall_cache'}Connecting mall to exterior LLM suppliers introduces a consideration of price.

Most suppliers cost for the usage of their API, so there’s a potential {that a}

giant desk, with lengthy texts, might be an costly operation.

Parallel requests (R solely)

A brand new function launched in ellmer 0.3.0

allows the entry to submit a number of prompts in parallel, somewhat than in sequence.

This makes it quicker, and probably cheaper, to course of a desk. If the supplier

helps this function, ellmer is ready to leverage it through the

parallel_chat()

perform. Gemini and OpenAI help the function.

Within the new launch of mall, the combination with ellmer has been specifically

written to make the most of parallel chat. The internals have been re-written to

submit the NLP-specific directions as a system message so as

scale back the scale of every immediate. Moreover, the cache system has additionally been

re-tooled to help batched requests.

NLP operations with no desk

Since its preliminary model, mall has offered the power for R customers to carry out

the NLP operations over a string vector, in different phrases, with no need a desk.

Beginning with the brand new launch, mall additionally supplies this similar performance

in its Python model.

mall can course of vectors contained in a record object. To make use of, initialize a

new LLMVec class object with both an Ollama mannequin, or a chatlas Chat

object, after which entry the identical NLP capabilities because the Polars extension.

# Initialize a Chat object

from chatlas import ChatOllama

chat = ChatOllama(mannequin = "llama3.2")

# Go it to a brand new LLMVec

from mall import LLMVec

llm = LLMVec(chat) Entry the capabilities through the brand new LLMVec object, and move the textual content to be processed.

llm.sentiment(["I am happy", "I am sad"])

#> ['positive', 'negative']

llm.translate(["Este es el mejor dia!"], "english")

#> ['This is the best day!']For extra info go to the reference web page: LLMVec

New cheatsheet

The model new official cheatsheet is now obtainable from Posit:

Pure Language processing utilizing LLMs in R/Python.

Its imply function is that one aspect of the web page is devoted to the R model,

and the opposite aspect of the web page to the Python model.

An internet web page model can be availabe within the official cheatsheet web site

right here. It takes

benefit of the tab function that lets you choose between R and Python

explanations and examples.