Introduction

Selecting the best GPU is a essential resolution when working machine studying and LLM workloads. You want sufficient compute to run your fashions effectively with out overspending on pointless energy. On this submit, we examine two strong choices: NVIDIA’s A10 and the newer L40S GPUs. We’ll break down their specs, efficiency benchmarks towards LLMs, and pricing that can assist you select based mostly in your workload.

There may be additionally a rising problem within the trade. Almost 40% of corporations battle to run AI initiatives as a result of restricted entry to GPUs. The demand is outpacing provide, making it tougher to scale reliably. That is the place flexibility turns into vital. Counting on a single cloud or {hardware} supplier can decelerate your initiatives. We’ll discover how Clarifai’s Compute Orchestration helps you entry each A10 and L40S GPUs, providing you with the liberty to change based mostly on availability and workload wants whereas avoiding vendor lock-in.

Let’s dive in and check out these two completely different GPU architectures.

Ampere GPUs (NVIDIA A10)

NVIDIA’s Ampere structure, launched in 2020, launched third-generation Tensor Cores optimized for mixed-precision compute (FP16, TF32, INT8) and improved Multi-Occasion GPU (MIG) help. The A10 GPU is designed for cost-effective AI inference, laptop imaginative and prescient, and graphics-heavy workloads. It handles mid-sized LLMs, imaginative and prescient fashions, and video duties effectively. With second-gen RT Cores and RTX Digital Workstation (vWS) help, the A10 is a strong selection for working graphics and AI workloads on virtualized infrastructure.

Ada Lovelace GPUs (NVIDIA L40S)

The Ada Lovelace structure takes efficiency and effectivity additional, designed for contemporary AI and graphics workloads. The L40S GPU options fourth-gen Tensor Cores with FP8 precision help, delivering vital acceleration for giant LLMs, generative AI, and fine-tuning. It additionally provides third-gen RT Cores and AV1 {hardware} encoding, making it a powerful match for complicated 3D graphics, rendering, and media pipelines. Lovelace structure allows the L40S to deal with multi-workload environments the place AI compute and high-end graphics run facet by facet.

A10 vs. L40S: Specs Comparability

Core Rely and Clock Speeds

The L40S includes a larger CUDA core depend than the A10, offering higher parallel processing energy for AI and ML workloads. CUDA cores are specialised GPU cores designed to deal with complicated computations in parallel, which is crucial for accelerating AI duties.

The L40S additionally runs at a better enhance clock of 2520 MHz, a 49% enhance over the A10’s 1695 MHz, leading to quicker compute efficiency.

VRAM Capability and Reminiscence Bandwidth

The L40S provides 48 GB of VRAM, double the A10’s 24 GB, permitting it to deal with bigger fashions and datasets extra effectively. Its reminiscence bandwidth can be larger at 864.0 GB/s in comparison with the A10’s 600.2 GB/s, enhancing knowledge throughput throughout memory-intensive duties.

A10 vs L40S: Efficiency

How do the A10 and L40S examine in real-world LLM inference? Our analysis workforce benchmarked the MiniCPM-4B, Phi4-mini-instruct, and Llama-3.2-3b-instruct fashions working in FP16 (half-precision) on each GPUs. FP16 allows quicker efficiency and decrease reminiscence utilization—excellent for large-scale AI workloads.

We examined latency (the time taken to generate every token and full a full request, measured in seconds) and throughput (the variety of tokens processed per second) throughout varied eventualities. Each metrics are essential for evaluating LLM efficiency in manufacturing.

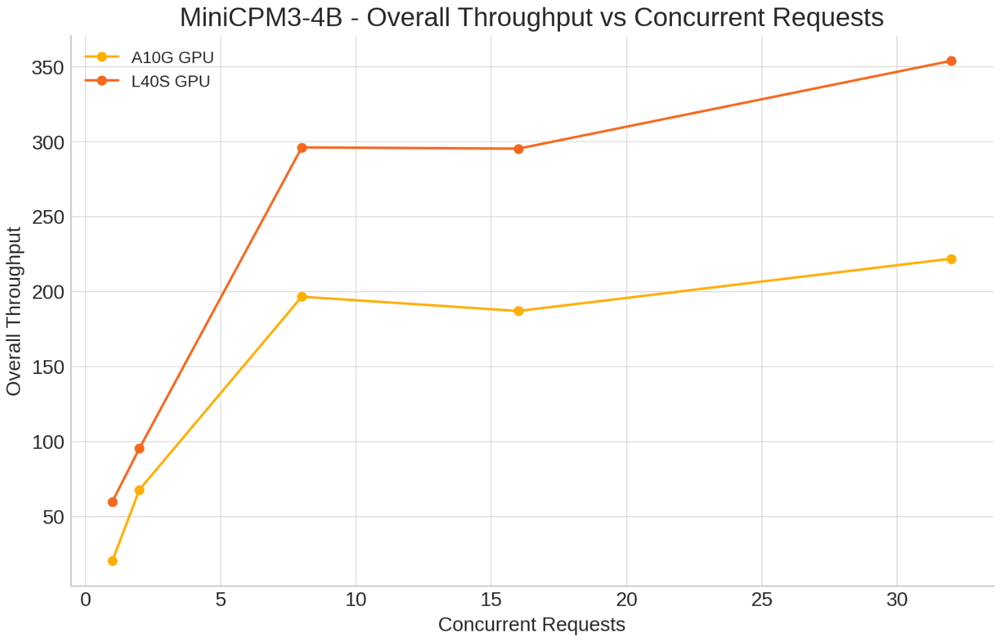

MiniCPM-4B

Eventualities examined:

- Concurrent Requests: 1, 2, 8, 16, 32

- Enter tokens: 500

- Output Tokens: 150

Key Insights:

Single Concurrent Request: L40S considerably improved latency per token (0.016s vs. 0.047s on A10G) and elevated end-to-end throughput from 97.21 to 296.46 tokens/sec.

Increased Concurrency (32 concurrent requests): L40S maintained higher latency (0.067s vs. 0.088s) and throughput of 331.96 tokens/sec, whereas A10G reached 258.22 tokens/sec.

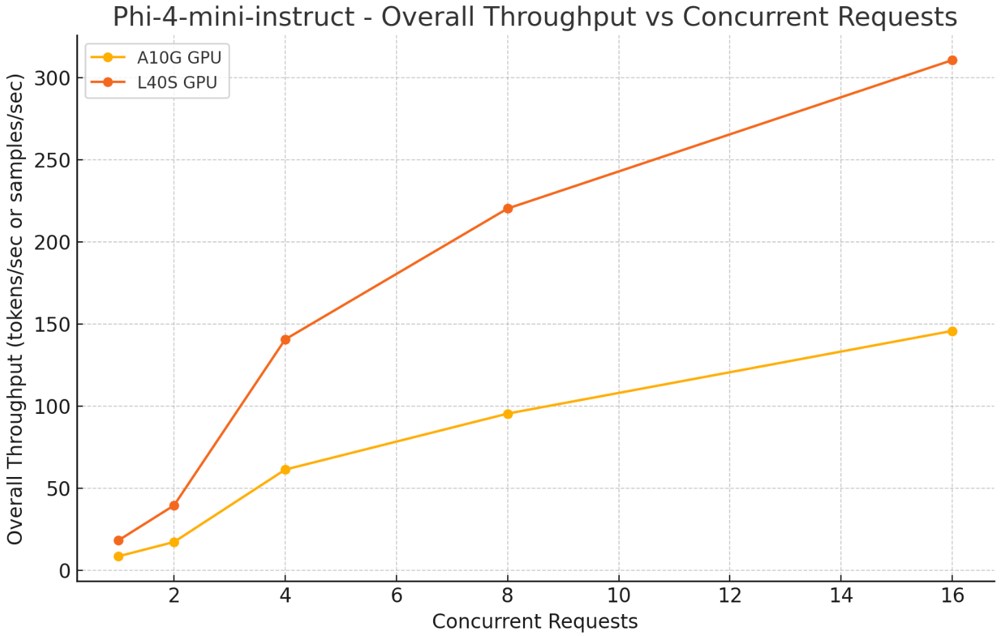

Phi4-mini-instruct

Eventualities examined:

- Concurrent Requests: 1, 2, 8, 16, 32

- Enter Tokens: 500

- Output Tokens: 150

Key Insights:

- Single Concurrent Request: L40S minimize latency per token from 0.02s (A10) to 0.013s and improved general throughput from 56.16 to 85.18 tokens/sec.

- Increased Concurrency (32 concurrent requests): L40S achieved 590.83 tokens/sec throughput with 0.03s latency per token, surpassing A10’s 353.69 tokens/sec.

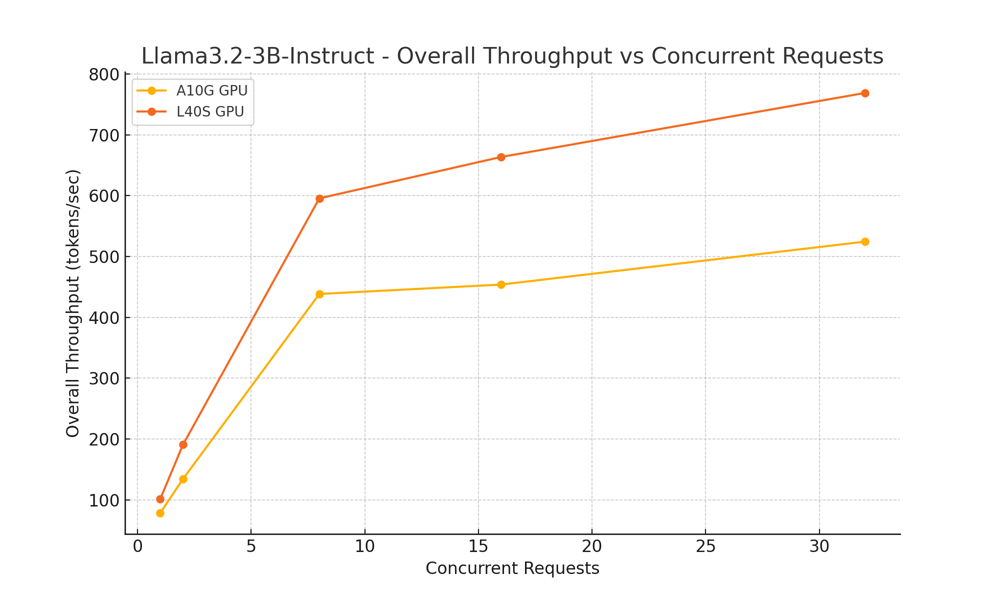

Llama-3.2-3b-instruct

Eventualities Examined:

- Concurrent Requests: 1, 2, 8, 16, 32

- Enter tokens: 500

- Output Tokens: 150

Key Insights:

- Single Concurrent Request: L40S improved latency per token from 0.015s (A10) to 0.012s, with throughput rising from 76.92 to 95.34 tokens/sec.

- Increased Concurrency (32 concurrent requests): L40S delivered 609.58 tokens/sec throughput, outperforming A10’s 476.63 tokens/sec, and diminished latency per token from 0.039s (A10) to 0.027s.

Throughout all examined fashions, the NVIDIA L40S GPU persistently outperformed the A10 in decreasing latency and enhancing throughput.

Whereas the L40S demonstrates robust efficiency enhancements, it’s equally vital to think about components reminiscent of value and useful resource necessities. Upgrading to the L40S might require a better upfront funding, so groups ought to fastidiously consider the trade-offs based mostly on their particular use instances, scale, and funds.

Now, let’s take a more in-depth take a look at how the A10 and L40S examine by way of pricing.

A10 vs L40S: Pricing

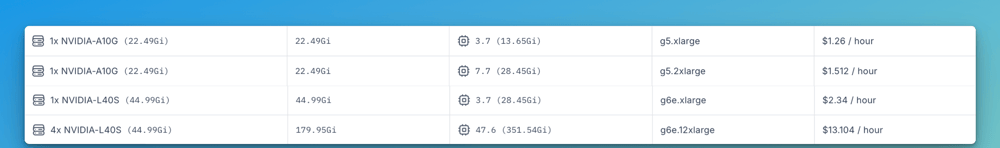

Whereas the L40S is extra highly effective than the A10, it’s additionally considerably dearer to run. Primarily based on Clarifai’s Compute Orchestration pricing, the L40S occasion (g6e.xlarge) prices $2.34 per hour, practically double the price of the A10-equipped occasion (g5.xlarge) at $1.26 per hour.

There are two variants accessible for each A10 and L40S:

- A10 is available in g5.xlarge ($1.26/hour) and g5.2xlarge ($1.512/hour) configurations.

- L40S is available in g6e.xlarge ($2.34/hour) and g6e.12xlarge ($13.104/hour) for bigger workloads.

Selecting the Proper GPU

Choosing between the NVIDIA A10 and L40S relies on your workload calls for and funds issues:

- NVIDIA A10 is well-suited for enterprises searching for an economical GPU able to dealing with blended workloads, together with AI inference, machine studying, {and professional} visualization. Its decrease energy consumption and strong efficiency make it a sensible selection for mainstream functions the place excessive compute energy just isn’t required.

- NVIDIA L40S is designed for organizations working compute-intensive workloads reminiscent of generative AI and LLM inference. With considerably larger efficiency and reminiscence bandwidth, the L40S delivers the scalability wanted for demanding AI and graphics duties, making it a powerful funding for manufacturing environments that require top-tier GPU energy.

Scaling AI Workloads with Flexibility and Reliability

We now have seen the distinction between the A10 and L40S and the way choosing the proper GPU relies on your particular use case and efficiency wants. However the subsequent query is—how do you entry these GPUs in your AI workloads?

One of many rising challenges in AI and machine studying improvement is navigating the worldwide GPU scarcity whereas avoiding dependence on a single cloud supplier. Excessive-demand GPUs just like the L40S, with its superior efficiency, will not be all the time available once you want them. However, whereas the A10 is extra accessible and cost-effective, availability can nonetheless fluctuate relying on the cloud area or supplier.

That is the place Clarifai’s Compute Orchestration is available in. It provides you versatile, on-demand entry to each A10 and L40S GPUs throughout a number of cloud suppliers and personal infrastructure with out locking you right into a single vendor. You possibly can select the cloud supplier and area the place you wish to deploy, reminiscent of AWS, GCP, Azure, Vultr, or Oracle, and run your AI workloads on devoted GPU clusters inside these environments.

Whether or not your workload wants the effectivity of the A10 or the facility of the L40S, Clarifai routes your jobs to the assets you choose whereas optimizing for availability, efficiency, and price. This method helps you keep away from delays brought on by GPU shortages or pricing spikes and offers you the flexibleness to scale your AI initiatives with confidence with out being tied to at least one supplier.

Conclusion

Selecting the best GPU comes right down to understanding your workload necessities and efficiency objectives. The NVIDIA A10 provides an economical choice for blended AI and graphics workloads, whereas the L40S delivers the facility and scalability wanted for demanding duties like generative AI and enormous language fashions. By matching your GPU option to your particular use case, you may obtain the correct stability of efficiency, effectivity, and price.

Clarifai’s Compute Orchestration makes it straightforward to entry each A10 and L40S GPUs throughout a number of cloud suppliers, providing you with the flexibleness to scale with out being restricted by availability or vendor lock-in.

For a breakdown of GPU prices and to check pricing throughout completely different deployment choices, go to the Clarifai Pricing web page. You too can be a part of our Discord channel anytime to attach with consultants, get your questions answered about choosing the proper GPU in your workloads, or get assist optimizing your AI infrastructure.