Meta has launched MobileLLM-R1, a household of light-weight edge reasoning fashions now out there on Hugging Face. The discharge consists of fashions starting from 140M to 950M parameters, with a give attention to environment friendly mathematical, coding, and scientific reasoning at sub-billion scale.

In contrast to general-purpose chat fashions, MobileLLM-R1 is designed for edge deployment, aiming to ship state-of-the-art reasoning accuracy whereas remaining computationally environment friendly.

What structure powers MobileLLM-R1?

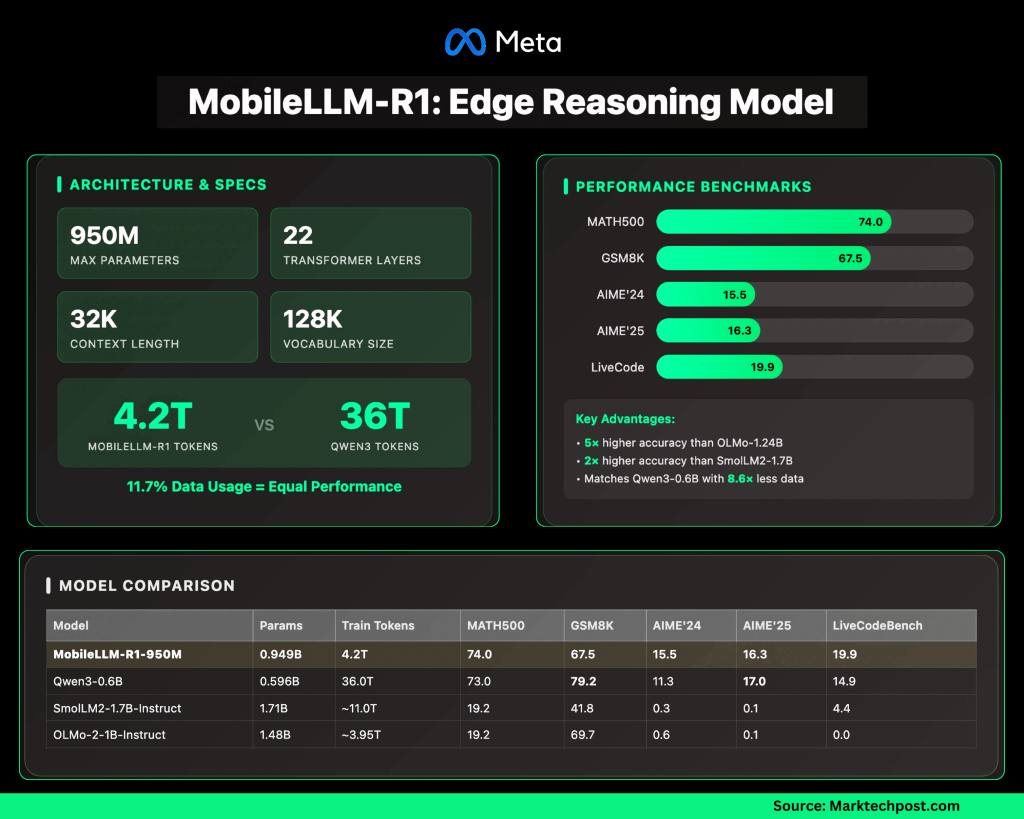

The biggest mannequin, MobileLLM-R1-950M, integrates a number of architectural optimizations:

- 22 Transformer layers with 24 consideration heads and 6 grouped KV heads.

- Embedding dimension: 1536; hidden dimension: 6144.

- Grouped-Question Consideration (GQA) reduces compute and reminiscence.

- Block-wise weight sharing cuts parameter rely with out heavy latency penalties.

- SwiGLU activations enhance small-model illustration.

- Context size: 4K for base, 32K for post-trained fashions.

- 128K vocabulary with shared enter/output embeddings.

The emphasis is on lowering compute and reminiscence necessities, making it appropriate for deployment on constrained gadgets.

How environment friendly is the coaching?

MobileLLM-R1 is notable for information effectivity:

- Skilled on ~4.2T tokens in whole.

- By comparability, Qwen3’s 0.6B mannequin was skilled on 36T tokens.

- This implies MobileLLM-R1 makes use of solely ≈11.7% of the information to succeed in or surpass Qwen3’s accuracy.

- Submit-training applies supervised fine-tuning on math, coding, and reasoning datasets.

This effectivity interprets immediately into decrease coaching prices and useful resource calls for.

How does it carry out in opposition to different open fashions?

On benchmarks, MobileLLM-R1-950M exhibits important features:

- MATH (MATH500 dataset): ~5× greater accuracy than Olmo-1.24B and ~2× greater accuracy than SmolLM2-1.7B.

- Reasoning and coding (GSM8K, AIME, LiveCodeBench): Matches or surpasses Qwen3-0.6B, regardless of utilizing far fewer tokens.

The mannequin delivers outcomes usually related to bigger architectures whereas sustaining a smaller footprint.

The place does MobileLLM-R1 fall quick?

The mannequin’s focus creates limitations:

- Robust in math, code, and structured reasoning.

- Weaker in common dialog, commonsense, and inventive duties in comparison with bigger LLMs.

- Distributed below FAIR NC (non-commercial) license, which restricts utilization in manufacturing settings.

- Longer contexts (32K) increase KV-cache and reminiscence calls for at inference.

How does MobileLLM-R1 evaluate to Qwen3, SmolLM2, and OLMo?

Efficiency snapshot (post-trained fashions):

| Mannequin | Params | Prepare tokens (T) | MATH500 | GSM8K | AIME’24 | AIME’25 | LiveCodeBench |

|---|---|---|---|---|---|---|---|

| MobileLLM-R1-950M | 0.949B | 4.2 | 74.0 | 67.5 | 15.5 | 16.3 | 19.9 |

| Qwen3-0.6B | 0.596B | 36.0 | 73.0 | 79.2 | 11.3 | 17.0 | 14.9 |

| SmolLM2-1.7B-Instruct | 1.71B | ~11.0 | 19.2 | 41.8 | 0.3 | 0.1 | 4.4 |

| OLMo-2-1B-Instruct | 1.48B | ~3.95 | 19.2 | 69.7 | 0.6 | 0.1 | 0.0 |

Key observations:

- R1-950M matches Qwen3-0.6B in math (74.0 vs 73.0) whereas requiring ~8.6× fewer tokens.

- Efficiency gaps vs SmolLM2 and OLMo are substantial throughout reasoning duties.

- Qwen3 maintains an edge in GSM8K, however the distinction is small in comparison with the coaching effectivity benefit.

Abstract

Meta’s MobileLLM-R1 underscores a development towards smaller, domain-optimized fashions that ship aggressive reasoning with out huge coaching budgets. By reaching 2×–5× efficiency features over bigger open fashions whereas coaching on a fraction of the information, it demonstrates that effectivity—not simply scale—will outline the subsequent part of LLM deployment, particularly for math, coding, and scientific use circumstances on edge gadgets.

Try the Mannequin on Hugging Face. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to observe us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our Publication.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.