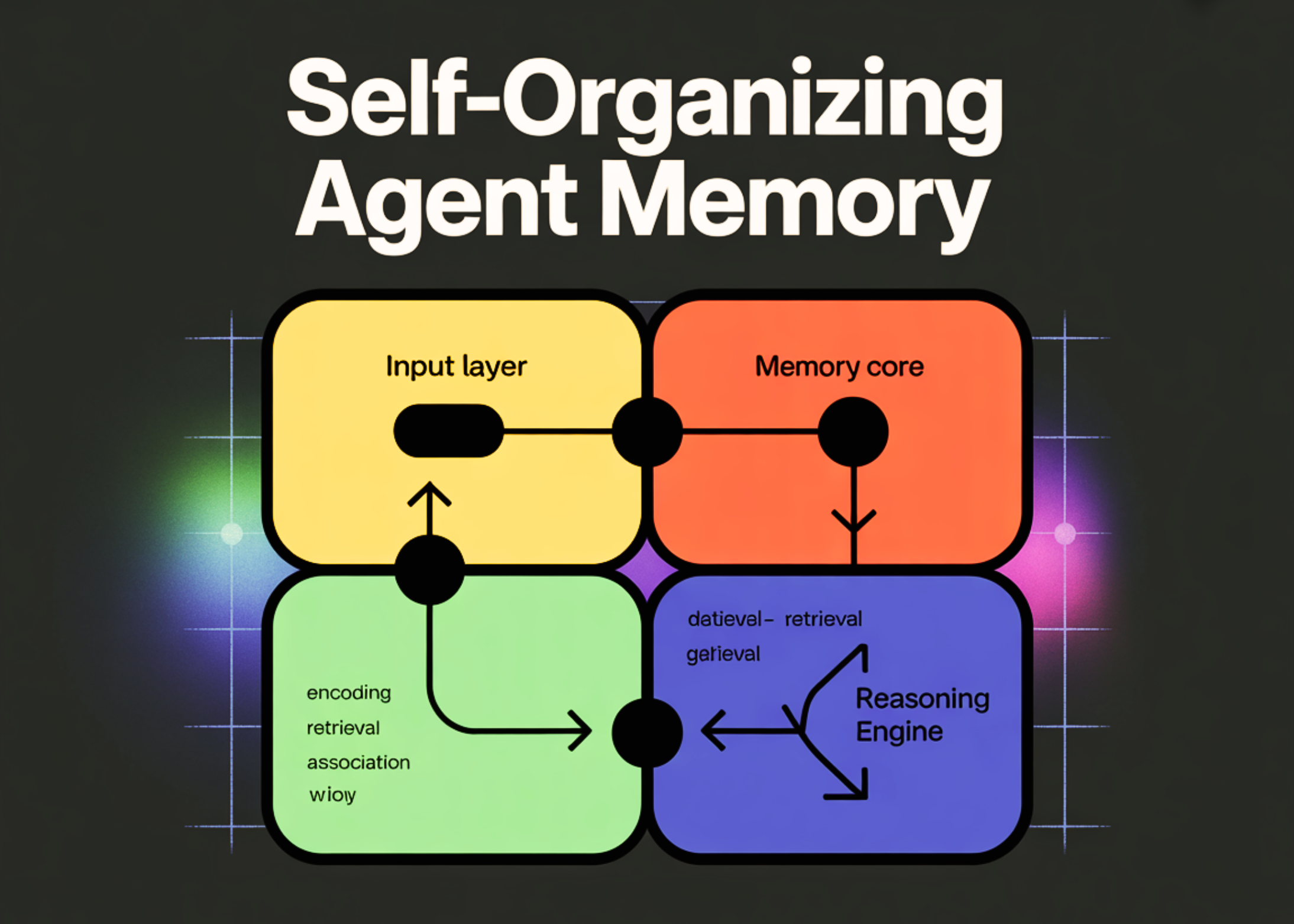

On this tutorial, we construct a self-organizing reminiscence system for an agent that goes past storing uncooked dialog historical past and as a substitute buildings interactions into persistent, significant data models. We design the system in order that reasoning and reminiscence administration are clearly separated, permitting a devoted element to extract, compress, and manage info. On the similar time, the primary agent focuses on responding to the consumer. We use structured storage with SQLite, scene-based grouping, and abstract consolidation, and we present how an agent can preserve helpful context over lengthy horizons with out counting on opaque vector-only retrieval.

import sqlite3

import json

import re

from datetime import datetime

from typing import Listing, Dict

from getpass import getpass

from openai import OpenAI

OPENAI_API_KEY = getpass("Enter your OpenAI API key: ").strip()

shopper = OpenAI(api_key=OPENAI_API_KEY)

def llm(immediate, temperature=0.1, max_tokens=500):

return shopper.chat.completions.create(

mannequin="gpt-4o-mini",

messages=[{"role": "user", "content": prompt}],

temperature=temperature,

max_tokens=max_tokens

).selections[0].message.content material.strip()We arrange the core runtime by importing all required libraries and securely accumulating the API key at execution time. We initialize the language mannequin shopper and outline a single helper perform that standardizes all mannequin calls. We be certain that each downstream element depends on this shared interface for constant era conduct.

class MemoryDB:

def __init__(self):

self.db = sqlite3.join(":reminiscence:")

self.db.row_factory = sqlite3.Row

self._init_schema()

def _init_schema(self):

self.db.execute("""

CREATE TABLE mem_cells (

id INTEGER PRIMARY KEY,

scene TEXT,

cell_type TEXT,

salience REAL,

content material TEXT,

created_at TEXT

)

""")

self.db.execute("""

CREATE TABLE mem_scenes (

scene TEXT PRIMARY KEY,

abstract TEXT,

updated_at TEXT

)

""")

self.db.execute("""

CREATE VIRTUAL TABLE mem_cells_fts

USING fts5(content material, scene, cell_type)

""")

def insert_cell(self, cell):

self.db.execute(

"INSERT INTO mem_cells VALUES(NULL,?,?,?,?,?)",

(

cell["scene"],

cell["cell_type"],

cell["salience"],

json.dumps(cell["content"]),

datetime.utcnow().isoformat()

)

)

self.db.execute(

"INSERT INTO mem_cells_fts VALUES(?,?,?)",

(

json.dumps(cell["content"]),

cell["scene"],

cell["cell_type"]

)

)

self.db.commit()We outline a structured reminiscence database that persists info throughout interactions. We create tables for atomic reminiscence models, higher-level scenes, and a full-text search index to allow symbolic retrieval. We additionally implement the logic to insert new reminiscence entries in a normalized and queryable kind.

def get_scene(self, scene):

return self.db.execute(

"SELECT * FROM mem_scenes WHERE scene=?", (scene,)

).fetchone()

def upsert_scene(self, scene, abstract):

self.db.execute("""

INSERT INTO mem_scenes VALUES(?,?,?)

ON CONFLICT(scene) DO UPDATE SET

abstract=excluded.abstract,

updated_at=excluded.updated_at

""", (scene, abstract, datetime.utcnow().isoformat()))

self.db.commit()

def retrieve_scene_context(self, question, restrict=6):

tokens = re.findall(r"[a-zA-Z0-9]+", question)

if not tokens:

return []

fts_query = " OR ".be part of(tokens)

rows = self.db.execute("""

SELECT scene, content material FROM mem_cells_fts

WHERE mem_cells_fts MATCH ?

LIMIT ?

""", (fts_query, restrict)).fetchall()

if not rows:

rows = self.db.execute("""

SELECT scene, content material FROM mem_cells

ORDER BY salience DESC

LIMIT ?

""", (restrict,)).fetchall()

return rows

def retrieve_scene_summary(self, scene):

row = self.get_scene(scene)

return row["summary"] if row else ""We deal with reminiscence retrieval and scene upkeep logic. We implement secure full-text search by sanitizing consumer queries and including a fallback technique when no lexical matches are discovered. We additionally expose helper strategies to fetch consolidated scene summaries for long-horizon context constructing.

class MemoryManager:

def __init__(self, db: MemoryDB):

self.db = db

def extract_cells(self, consumer, assistant) -> Listing[Dict]:

immediate = f"""

Convert this interplay into structured reminiscence cells.

Return JSON array with objects containing:

- scene

- cell_type (truth, plan, desire, choice, process, threat)

- salience (0-1)

- content material (compressed, factual)

Person: {consumer}

Assistant: {assistant}

"""

uncooked = llm(immediate)

uncooked = re.sub(r"```json|```", "", uncooked)

attempt:

cells = json.masses(uncooked)

return cells if isinstance(cells, checklist) else []

besides Exception:

return []

def consolidate_scene(self, scene):

rows = self.db.db.execute(

"SELECT content material FROM mem_cells WHERE scene=? ORDER BY salience DESC",

(scene,)

).fetchall()

if not rows:

return

cells = [json.loads(r["content"]) for r in rows]

immediate = f"""

Summarize this reminiscence scene in beneath 100 phrases.

Preserve it secure and reusable for future reasoning.

Cells:

{cells}

"""

abstract = llm(immediate, temperature=0.05)

self.db.upsert_scene(scene, abstract)

def replace(self, consumer, assistant):

cells = self.extract_cells(consumer, assistant)

for cell in cells:

self.db.insert_cell(cell)

for scene in set(c["scene"] for c in cells):

self.consolidate_scene(scene)We implement the devoted reminiscence administration element chargeable for structuring expertise. We extract compact reminiscence representations from interactions, retailer them, and periodically consolidate them into secure scene summaries. We be certain that reminiscence evolves incrementally with out interfering with the agent’s response movement.

class WorkerAgent:

def __init__(self, db: MemoryDB, mem_manager: MemoryManager):

self.db = db

self.mem_manager = mem_manager

def reply(self, user_input):

recalled = self.db.retrieve_scene_context(user_input)

scenes = set(r["scene"] for r in recalled)

summaries = "n".be part of(

f"[{scene}]n{self.db.retrieve_scene_summary(scene)}"

for scene in scenes

)

immediate = f"""

You're an clever agent with long-term reminiscence.

Related reminiscence:

{summaries}

Person: {user_input}

"""

assistant_reply = llm(immediate)

self.mem_manager.replace(user_input, assistant_reply)

return assistant_reply

db = MemoryDB()

memory_manager = MemoryManager(db)

agent = WorkerAgent(db, memory_manager)

print(agent.reply("We're constructing an agent that remembers initiatives long run."))

print(agent.reply("It ought to manage conversations into matters routinely."))

print(agent.reply("This reminiscence system ought to assist future reasoning."))

for row in db.db.execute("SELECT * FROM mem_scenes"):

print(dict(row))We outline the employee agent that performs reasoning whereas remaining memory-aware. We retrieve related scenes, assemble contextual summaries, and generate responses grounded in long-term data. We then shut the loop by passing the interplay again to the reminiscence supervisor so the system repeatedly improves over time.

On this tutorial, we demonstrated how an agent can actively curate its personal reminiscence and switch previous interactions into secure, reusable data fairly than ephemeral chat logs. We enabled reminiscence to evolve by way of consolidation and selective recall, which helps extra constant and grounded reasoning throughout periods. This strategy gives a sensible basis for constructing long-lived agentic methods, and it may be naturally prolonged with mechanisms for forgetting, richer relational reminiscence, or graph-based orchestration because the system grows in complexity.

Take a look at the Full Codes. Additionally, be at liberty to comply with us on Twitter and don’t overlook to hitch our 100k+ ML SubReddit and Subscribe to our Publication. Wait! are you on telegram? now you may be part of us on telegram as properly.