On this tutorial, we exhibit how you can transfer past static, code-heavy charts and construct a genuinely interactive exploratory knowledge evaluation workflow instantly utilizing PyGWalker. We begin by getting ready the Titanic dataset for large-scale interactive querying. These analysis-ready engineered options reveal the underlying construction of the info whereas enabling each detailed row-level exploration and high-level aggregated views for deeper perception. Embedding a Tableau-style drag-and-drop interface instantly within the pocket book allows fast speculation testing, intuitive cohort comparisons, and environment friendly data-quality inspection, all with out the friction of switching between code and visualization instruments.

import sys, subprocess, json, math, os

from pathlib import Path

def pip_install(pkgs):

subprocess.check_call([sys.executable, "-m", "pip", "install", "-q"] + pkgs)

pip_install([

"pygwalker>=0.4.9",

"duckdb>=0.10.0",

"pandas>=2.0.0",

"numpy>=1.24.0",

"seaborn>=0.13.0"

])

import numpy as np

import pandas as pd

import seaborn as sns

df_raw = sns.load_dataset("titanic").copy()

print("Uncooked form:", df_raw.form)

show(df_raw.head(3))We arrange a clear and reproducible Colab surroundings by putting in all required dependencies for interactive EDA. We load the Titanic dataset and carry out an preliminary sanity test to grasp its uncooked construction and scale. It establishes a secure basis earlier than any transformation or visualization begins.

def make_safe_bucket(collection, bins=None, labels=None, q=None, prefix="bucket"):

s = pd.to_numeric(collection, errors="coerce")

if q just isn't None:

strive:

cuts = pd.qcut(s, q=q, duplicates="drop")

return cuts.astype("string").fillna("Unknown")

besides Exception:

go

if bins just isn't None:

cuts = pd.minimize(s, bins=bins, labels=labels, include_lowest=True)

return cuts.astype("string").fillna("Unknown")

return s.astype("float64")

def preprocess_titanic_advanced(df):

out = df.copy()

out.columns = [c.strip().lower().replace(" ", "_") for c in out.columns]

for c in ["survived", "pclass", "sibsp", "parch"]:

if c in out.columns:

out[c] = pd.to_numeric(out[c], errors="coerce").fillna(-1).astype("int64")

if "age" in out.columns:

out["age"] = pd.to_numeric(out["age"], errors="coerce").astype("float64")

out["age_is_missing"] = out["age"].isna()

out["age_bucket"] = make_safe_bucket(

out["age"],

bins=[0, 12, 18, 30, 45, 60, 120],

labels=["child", "teen", "young_adult", "adult", "mid_age", "senior"],

)

if "fare" in out.columns:

out["fare"] = pd.to_numeric(out["fare"], errors="coerce").astype("float64")

out["fare_is_missing"] = out["fare"].isna()

out["log_fare"] = np.log1p(out["fare"].fillna(0))

out["fare_bucket"] = make_safe_bucket(out["fare"], q=8)

for c in ["sex", "class", "who", "embarked", "alone", "adult_male"]:

if c in out.columns:

out[c] = out[c].astype("string").fillna("Unknown")

if "cabin" in out.columns:

out["deck"] = out["cabin"].astype("string").str.strip().str[0].fillna("Unknown")

out["deck_is_missing"] = out["cabin"].isna()

else:

out["deck"] = "Unknown"

out["deck_is_missing"] = True

if "ticket" in out.columns:

t = out["ticket"].astype("string")

out["ticket_len"] = t.str.len().fillna(0).astype("int64")

out["ticket_has_alpha"] = t.str.accommodates(r"[A-Za-z]", regex=True, na=False)

out["ticket_prefix"] = t.str.extract(r"^([A-Za-z./s]+)", broaden=False).fillna("None").str.strip()

out["ticket_prefix"] = out["ticket_prefix"].exchange("", "None").astype("string")

if "sibsp" in out.columns and "parch" in out.columns:

out["family_size"] = (out["sibsp"] + out["parch"] + 1).astype("int64")

out["is_alone"] = (out["family_size"] == 1)

if "title" in out.columns:

title = out["name"].astype("string").str.extract(r",s*([^.]+).", broaden=False).fillna("Unknown").str.strip()

vc = title.value_counts(dropna=False)

maintain = set(vc[vc >= 15].index.tolist())

out["title"] = title.the place(title.isin(maintain), different="Uncommon").astype("string")

else:

out["title"] = "Unknown"

out["segment"] = (

out["sex"].fillna("Unknown").astype("string")

+ " | "

+ out["class"].fillna("Unknown").astype("string")

+ " | "

+ out["age_bucket"].fillna("Unknown").astype("string")

)

for c in out.columns:

if out[c].dtype == bool:

out[c] = out[c].astype("int64")

if out[c].dtype == "object":

out[c] = out[c].astype("string")

return out

df = preprocess_titanic_advanced(df_raw)

print("Prepped form:", df.form)

show(df.head(3))We give attention to superior preprocessing and have engineering to transform the uncooked knowledge into an analysis-ready type. We create sturdy, DuckDB-safe options akin to buckets, segments, and engineered categorical indicators that improve downstream exploration. We make sure the dataset is secure, expressive, and appropriate for interactive querying.

def data_quality_report(df):

rows = []

n = len(df)

for c in df.columns:

s = df[c]

miss = int(s.isna().sum())

miss_pct = (miss / n * 100.0) if n else 0.0

nunique = int(s.nunique(dropna=True))

dtype = str(s.dtype)

pattern = s.dropna().head(3).tolist()

rows.append({

"col": c,

"dtype": dtype,

"lacking": miss,

"missing_%": spherical(miss_pct, 2),

"nunique": nunique,

"sample_values": pattern

})

return pd.DataFrame(rows).sort_values(["missing", "nunique"], ascending=[False, False])

dq = data_quality_report(df)

show(dq.head(20))

RANDOM_SEED = 42

MAX_ROWS_FOR_UI = 200_000

df_for_ui = df

if len(df_for_ui) > MAX_ROWS_FOR_UI:

df_for_ui = df_for_ui.pattern(MAX_ROWS_FOR_UI, random_state=RANDOM_SEED).reset_index(drop=True)

agg = (

df.groupby(["segment", "deck", "embarked"], dropna=False)

.agg(

n=("survived", "dimension"),

survival_rate=("survived", "imply"),

avg_fare=("fare", "imply"),

avg_age=("age", "imply"),

)

.reset_index()

)

for c in ["survival_rate", "avg_fare", "avg_age"]:

agg[c] = agg[c].astype("float64")

Path("/content material").mkdir(dad and mom=True, exist_ok=True)

df_for_ui.to_csv("/content material/titanic_prepped_for_ui.csv", index=False)

agg.to_csv("/content material/titanic_agg_segment_deck_embarked.csv", index=False)We consider knowledge high quality and generate a structured overview of missingness, cardinality, and knowledge sorts. We put together each a row-level dataset and an aggregated cohort-level desk to help quick comparative evaluation. The twin illustration permits us to discover detailed patterns and high-level developments concurrently.

import pygwalker as pyg

SPEC_PATH = Path("/content material/pygwalker_spec_titanic.json")

def load_spec(path):

if path.exists():

strive:

return json.masses(path.read_text())

besides Exception:

return None

return None

def save_spec(path, spec_obj):

strive:

if isinstance(spec_obj, str):

spec_obj = json.masses(spec_obj)

path.write_text(json.dumps(spec_obj, indent=2))

return True

besides Exception:

return False

def launch_pygwalker(df, spec_path):

spec = load_spec(spec_path)

kwargs = {}

if spec just isn't None:

kwargs["spec"] = spec

strive:

walker = pyg.stroll(df, use_kernel_calc=True, **kwargs)

besides TypeError:

walker = pyg.stroll(df, **kwargs) if spec just isn't None else pyg.stroll(df)

captured = None

for attr in ["spec", "_spec"]:

if hasattr(walker, attr):

strive:

captured = getattr(walker, attr)

break

besides Exception:

go

for meth in ["to_spec", "export_spec", "get_spec"]:

if captured is None and hasattr(walker, meth):

strive:

captured = getattr(walker, meth)()

break

besides Exception:

go

if captured just isn't None:

save_spec(spec_path, captured)

return walker

walker_rows = launch_pygwalker(df_for_ui, SPEC_PATH)

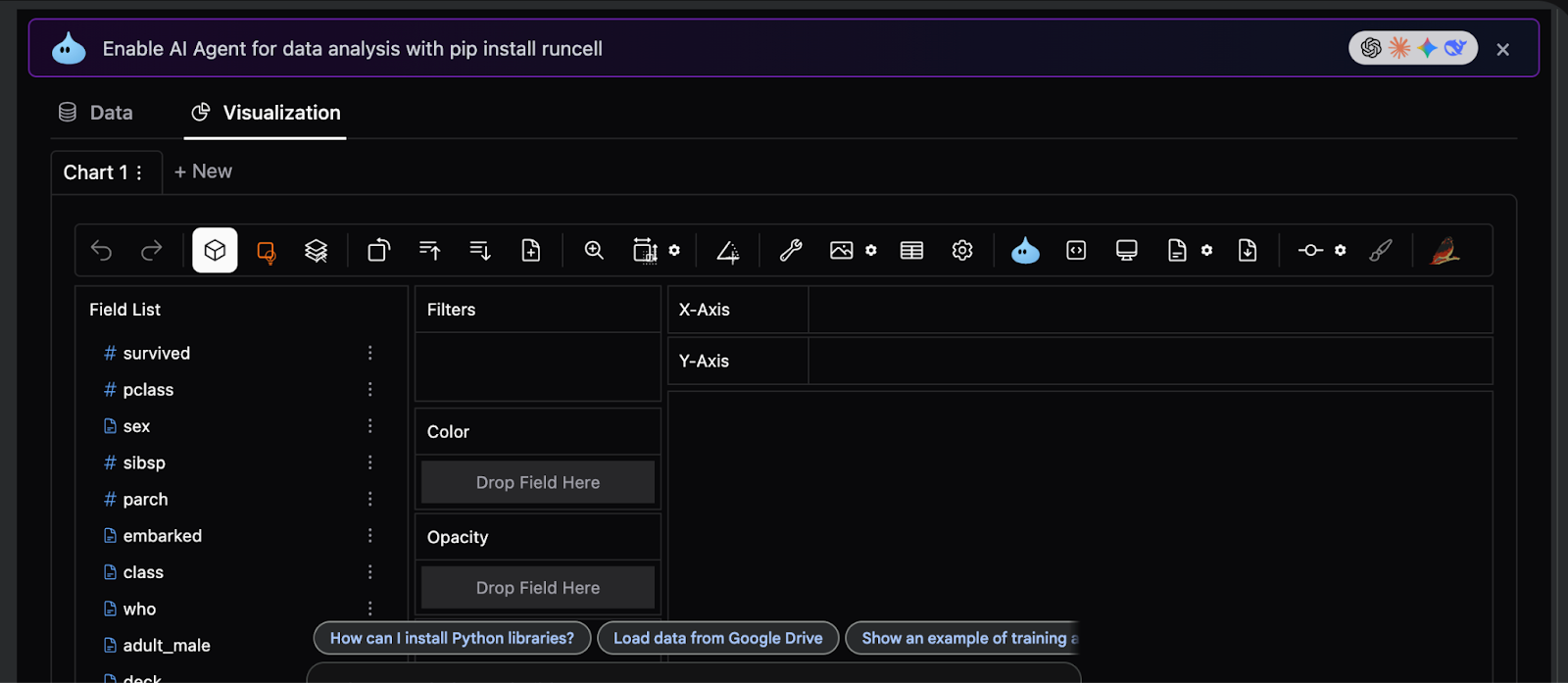

walker_agg = pyg.stroll(agg)We combine PyGWalker to remodel our ready tables into a totally interactive, drag-and-drop analytical interface. We persist the visualization specification in order that dashboard layouts and encodings survive pocket book reruns. It turns the pocket book right into a reusable, BI-style exploration surroundings.

HTML_PATH = Path("/content material/pygwalker_titanic_dashboard.html")

def export_html_best_effort(df, spec_path, out_path):

spec = load_spec(spec_path)

html = None

strive:

html = pyg.stroll(df, spec=spec, return_html=True) if spec just isn't None else pyg.stroll(df, return_html=True)

besides Exception:

html = None

if html is None:

for fn in ["to_html", "export_html"]:

if hasattr(pyg, fn):

strive:

f = getattr(pyg, fn)

html = f(df, spec=spec) if spec just isn't None else f(df)

break

besides Exception:

proceed

if html is None:

return None

if not isinstance(html, str):

html = str(html)

out_path.write_text(html, encoding="utf-8")

return out_path

export_html_best_effort(df_for_ui, SPEC_PATH, HTML_PATH)We lengthen the workflow by exporting the interactive dashboard as a standalone HTML artifact. We make sure the evaluation will be shared or reviewed with out requiring a Python surroundings or Colab session. It completes the pipeline from uncooked knowledge to distributable, interactive perception.

In conclusion, we established a sturdy sample for superior EDA that scales far past the Titanic dataset whereas remaining absolutely notebook-native. We confirmed how cautious preprocessing, kind security, and have design enable PyGWalker to function reliably on complicated knowledge, and the way combining detailed information with aggregated summaries unlocks highly effective analytical workflows. As a substitute of treating visualization as an afterthought, we used it as a first-class interactive layer, permitting us to iterate, validate assumptions, and extract insights in actual time.

Try the Full Codes right here. Additionally, be happy to observe us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you possibly can be part of us on telegram as properly.