Introduction

Scaling AI workloads is now not optionally available—it is a necessity in a world the place person expectations and knowledge volumes are accelerating. Whether or not you’re deploying a pc imaginative and prescient mannequin on the edge or orchestrating giant‑scale language fashions within the cloud, you could guarantee your infrastructure can develop seamlessly. Vertical scaling (scale up) and horizontal scaling (scale out) are the 2 traditional methods for growth, however many engineering groups wrestle to determine which method higher fits their wants. As a market chief in AI, Clarifai typically works with prospects who ask, “How ought to we scale our AI fashions successfully with out breaking the financial institution or sacrificing efficiency?”

This complete information explains the basic variations between vertical and horizontal scaling, highlights their benefits and limitations, and explores hybrid methods that can assist you make an knowledgeable choice. We’ll combine insights from educational analysis, trade greatest practices and actual‑world case research, and we’ll spotlight how Clarifai’s compute orchestration, mannequin inference, and native runners can assist your scaling journey.

Fast Digest

- Scalability is the power of a system to deal with rising load whereas sustaining efficiency and availability. It’s very important for AI purposes to assist progress in knowledge and customers.

- Vertical scaling will increase the assets (CPU, RAM, storage) of a single server, providing simplicity and rapid efficiency enhancements however restricted by {hardware} ceilings and single factors of failure.

- Horizontal scaling provides extra servers to distribute workload, enhancing fault tolerance and concurrency, although it introduces complexity and community overhead.

- Choice elements embrace workload sort, progress projections, price, architectural complexity and regulatory necessities.

- Hybrid (diagonal) scaling combines each approaches, scaling up till {hardware} limits are reached after which scaling out.

- Rising tendencies: AI‑pushed predictive autoscaling utilizing hybrid fashions, Kubernetes Horizontal and Vertical Pod Autoscalers, serverless scaling, and inexperienced computing all form the way forward for scalability.

Introduction to Scalability and Scaling Methods

Fast Abstract: What’s scalability, and why does it matter?

Scalability refers to a system’s functionality to deal with rising load whereas sustaining efficiency, making it essential for AI workloads that develop quickly. With out scalability, your software might expertise latency spikes or failures, eroding person belief and inflicting monetary losses.

What Does Scalability Imply?

Scalability is the property of a system to adapt its assets in response to altering workload calls for. In easy phrases, if extra customers request predictions out of your picture classifier, the infrastructure ought to robotically deal with the extra requests with out slowing down. That is totally different from efficiency tuning, which optimises a system’s baseline effectivity however doesn’t essentially put together it for surges in demand. Scalability is a steady self-discipline, essential for prime‑availability AI providers.

Key causes for scaling embrace dealing with elevated person load, sustaining efficiency and making certain reliability. Analysis highlights that scaling helps assist rising knowledge and storage wants and ensures higher person experiences. As an example, an AI mannequin that processes thousands and thousands of transactions per second calls for infrastructure that may scale each in compute and storage to keep away from bottlenecks and downtime.

Why Scaling Issues for AI Purposes

AI purposes typically deal with variable workloads—starting from sporadic spikes in inference requests to steady heavy coaching hundreds. With out correct scaling, these workloads might trigger efficiency degradation or outages. In keeping with a survey on hyperscale knowledge centres, the mixed use of vertical and horizontal scaling dramatically will increase vitality utilisation. This implies organisations should think about not solely efficiency but additionally sustainability.

For Clarifai’s prospects, scaling is especially vital as a result of mannequin inference and coaching workloads may be unpredictable, particularly when fashions are built-in into third‑social gathering methods or client apps. Clarifai’s compute orchestration options assist customers handle assets effectively by leveraging auto‑scaling teams and container orchestration, making certain fashions stay responsive at the same time as demand fluctuates.

Professional Insights

- Infrastructure specialists emphasise that scalability needs to be designed in from day one, not bolted on later. They warn that retrofitting scaling options typically incurs important technical debt.

- Analysis on inexperienced computing notes that combining vertical and horizontal scaling dramatically will increase energy consumption, highlighting the necessity for sustainability practices.

- Clarifai engineers suggest monitoring utilization patterns and step by step introducing horizontal and vertical scaling primarily based on software necessities, relatively than selecting one method by default.

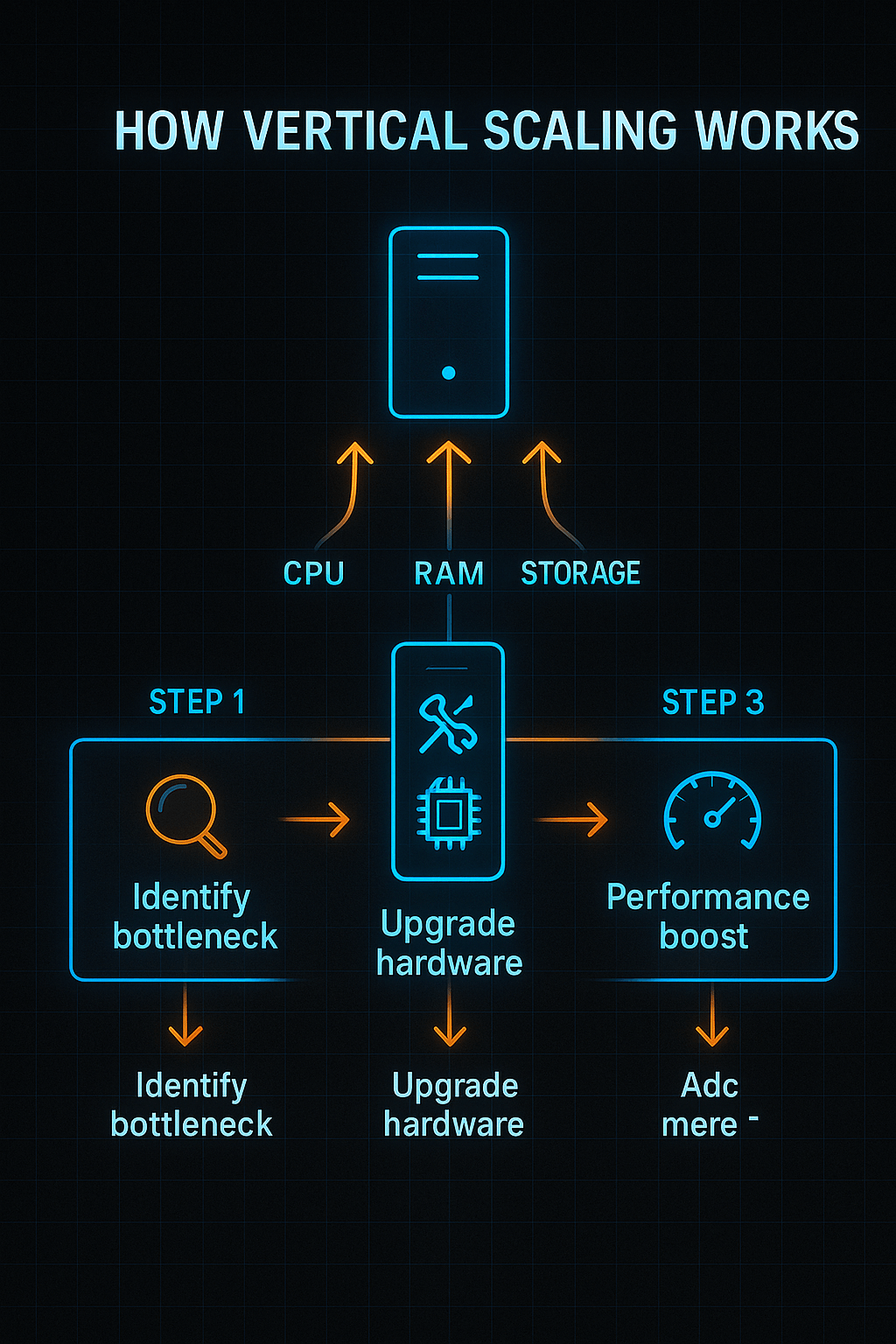

Understanding Vertical Scaling (Scaling Up)

Fast Abstract: What’s vertical scaling?

Vertical scaling will increase the assets (CPU, RAM, storage) of a single server or node, offering a direct efficiency increase however finally restricted by {hardware} constraints and potential downtime.

What Is Vertical Scaling?

Vertical scaling, also called scaling up, means augmenting the capability of a single machine. You possibly can add extra CPU cores, enhance reminiscence, improve to sooner storage, or transfer the workload to a extra highly effective server. For cloud workloads, this typically entails resizing an occasion to a bigger occasion sort, akin to upgrading from a medium GPU occasion to a excessive‑efficiency GPU cluster.

Vertical scaling is easy as a result of it doesn’t require rewriting the applying structure. Database directors typically scale up database servers for fast efficiency good points; AI groups might broaden GPU reminiscence when coaching giant language fashions. Since you solely improve one machine, vertical scaling preserves knowledge locality and reduces community overhead, leading to decrease latency for sure workloads.

Benefits of Vertical Scaling

- Simplicity and ease of implementation: You don’t want so as to add new nodes or deal with distributed methods complexity. Upgrading reminiscence in your native Clarifai mannequin runner might yield rapid efficiency advantages.

- No want to switch software structure: Vertical scaling retains your single‑node design intact, which fits legacy methods or monolithic AI providers.

- Quicker interprocess communication: All parts run on the identical {hardware}, so there are not any community hops; this could cut back latency for coaching and inference duties.

- Higher knowledge consistency: Single‑node architectures keep away from replication lag, making vertical scaling best for stateful workloads that require robust consistency.

Limitations of Vertical Scaling

- {Hardware} limitations: There’s a cap on the CPU, reminiscence and storage you may add—often known as the {hardware} ceiling. When you attain the utmost supported assets, vertical scaling is now not viable.

- Single level of failure: A vertically scaled system nonetheless runs on one machine; if the server goes down, your software goes offline.

- Downtime for upgrades: {Hardware} upgrades typically require upkeep home windows, resulting in downtime or degraded efficiency throughout scaling operations.

- Price escalation: Excessive‑finish {hardware} turns into exponentially costlier as you scale; buying high‑tier GPUs or NVMe storage can pressure budgets.

Actual‑World Instance

Think about you’re coaching a giant language mannequin on Clarifai’s native runner. Because the dataset grows, the coaching job turns into I/O sure due to inadequate reminiscence. Vertical scaling would possibly contain including extra RAM or upgrading to a GPU with extra VRAM, permitting the mannequin to load extra parameters in reminiscence, leading to sooner coaching. Nonetheless, as soon as the {hardware} capability is maxed out, you’ll want an alternate technique, akin to horizontal or hybrid scaling.

Clarifai Product Integration

Clarifai’s native runners allow you to deploy fashions on‑premises or on edge units. For those who want extra processing energy for inference, you may improve your native {hardware} (vertical scaling) with out altering the Clarifai API calls. Clarifai additionally supplies excessive‑efficiency inference employees within the cloud; you can begin with vertical scaling by selecting bigger compute plans after which transition to horizontal scaling when your fashions require extra throughput.

Professional Insights

- Engineers warning that vertical scaling supplies diminishing returns: every successive {hardware} improve yields smaller efficiency enhancements relative to price. Because of this vertical scaling is commonly a stepping stone relatively than a protracted‑time period answer.

- Database specialists emphasise that vertical scaling is good for transactional workloads requiring robust consistency, akin to financial institution transactions.

- Clarifai recommends vertical scaling for low‑visitors or prototype fashions the place simplicity and quick setup outweigh the necessity for redundancy.

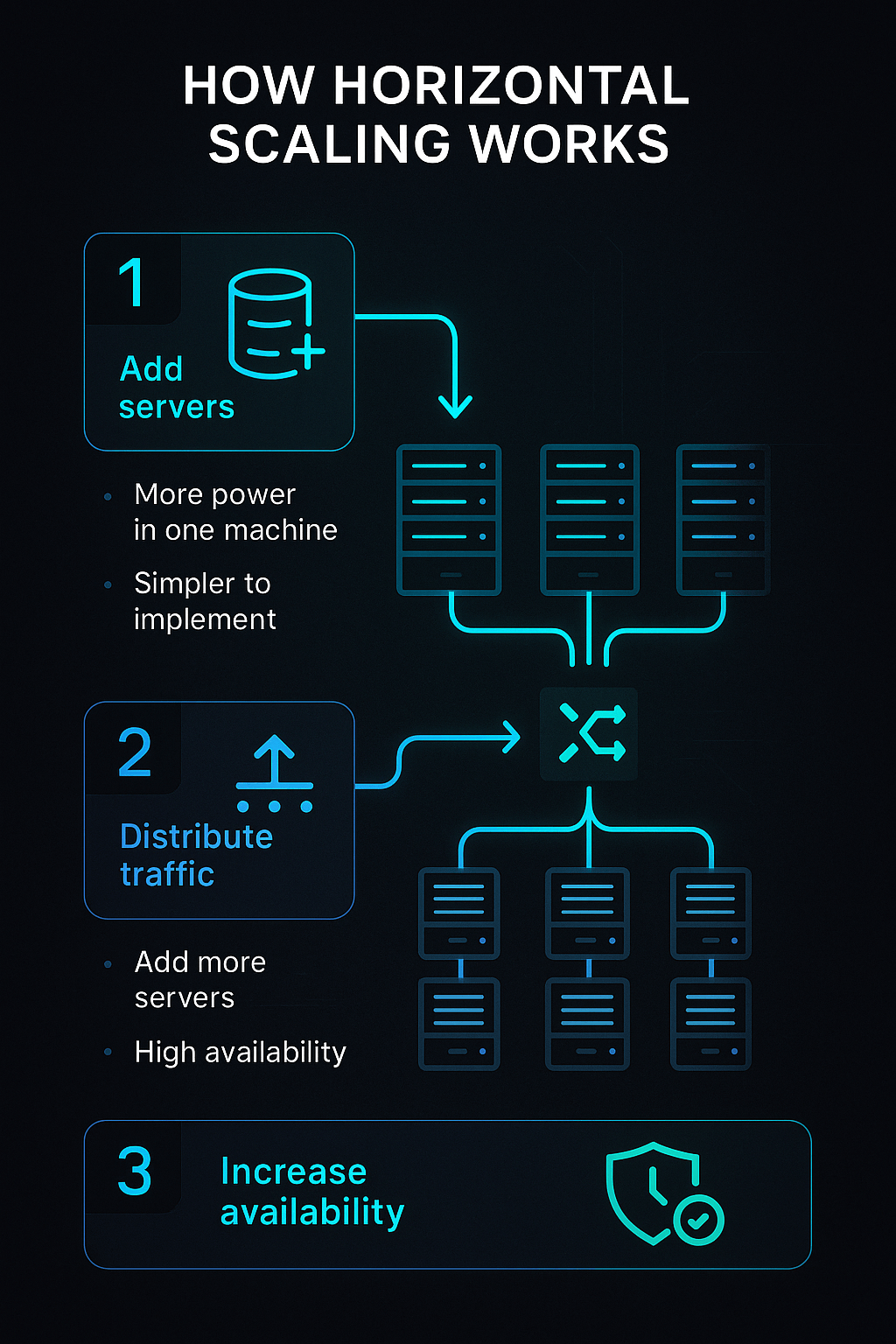

Understanding Horizontal Scaling (Scaling Out)

Fast Abstract: What’s horizontal scaling?

Horizontal scaling provides extra servers or nodes to distribute workload, enhancing resilience and concurrency however rising complexity.

What Is Horizontal Scaling?

Horizontal scaling, or scaling out, is the method of including extra machines to deal with workload distribution. As an alternative of upgrading a single server, you replicate providers throughout a number of nodes. For AI purposes, this would possibly imply deploying a number of inference servers behind a load balancer. Requests are distributed in order that no single server turns into a bottleneck.

Whenever you scale out, you could handle duties akin to load balancing, sharding, knowledge replication and repair discovery, as a result of your software parts run throughout totally different machines. Horizontal scaling is key to microservices architectures, container orchestration methods like Kubernetes and fashionable serverless platforms.

Advantages of Horizontal Scaling

- Close to‑limitless scalability: You possibly can add extra servers as wanted, enabling your system to deal with unpredictable spikes. Cloud suppliers make it straightforward to spin up cases and combine them into auto‑scaling teams.

- Improved fault tolerance and redundancy: If one node fails, visitors is rerouted to others; the system continues working. That is essential for AI providers that should preserve excessive availability.

- Zero or minimal downtime: New nodes may be added with out shutting down the system. This property permits steady scaling throughout occasions like product launches or viral campaigns.

- Versatile price administration: You possibly can pay just for what you employ, enabling higher alignment of compute prices with actual demand; however be aware of community and administration overhead.

Challenges of Horizontal Scaling

- Distributed system complexity: You have to deal with knowledge consistency, concurrency, eventual consistency and community latency. Orchestrating distributed parts requires experience.

- Greater preliminary complexity: Establishing load balancers, Kubernetes clusters or service meshes takes time. Observability instruments and automation are important to take care of reliability.

- Community overhead: Inter‑node communication introduces latency; that you must optimise knowledge switch and caching methods.

- Price administration: Though horizontal scaling spreads prices, including extra servers can nonetheless be costly if not managed correctly.

Actual‑World Instance

Suppose you’ve deployed a pc imaginative and prescient API utilizing Clarifai to categorise thousands and thousands of photographs per day. When a advertising and marketing marketing campaign drives a sudden visitors spike, a single server can not deal with the load. Horizontal scaling entails deploying a number of inference servers behind a load balancer, permitting requests to be distributed throughout nodes. Clarifai’s compute orchestration can robotically begin new containers when CPU or reminiscence metrics exceed thresholds. When the load diminishes, unused nodes are gracefully eliminated, saving prices.

Clarifai Product Integration

Clarifai’s multi‑node deployment capabilities combine seamlessly with horizontal scaling methods. You possibly can run a number of inference employees throughout totally different availability zones, behind a managed load balancer. Clarifai’s orchestration screens metrics and spins up or down containers robotically, enabling environment friendly scaling out. Builders may also combine Clarifai inference right into a Kubernetes cluster; utilizing Clarifai’s APIs, the service may be distributed throughout nodes for larger throughput.

Professional Insights

- System architects spotlight that horizontal scaling brings excessive availability: when one machine fails, the system stays operational.

- Nonetheless, engineers warn that distributed knowledge consistency is a significant problem; you could must undertake eventual consistency fashions or consensus protocols to take care of knowledge correctness.

- Clarifai advocates for a microservices method, the place AI inference is decoupled from enterprise logic, making horizontal scaling simpler to implement.

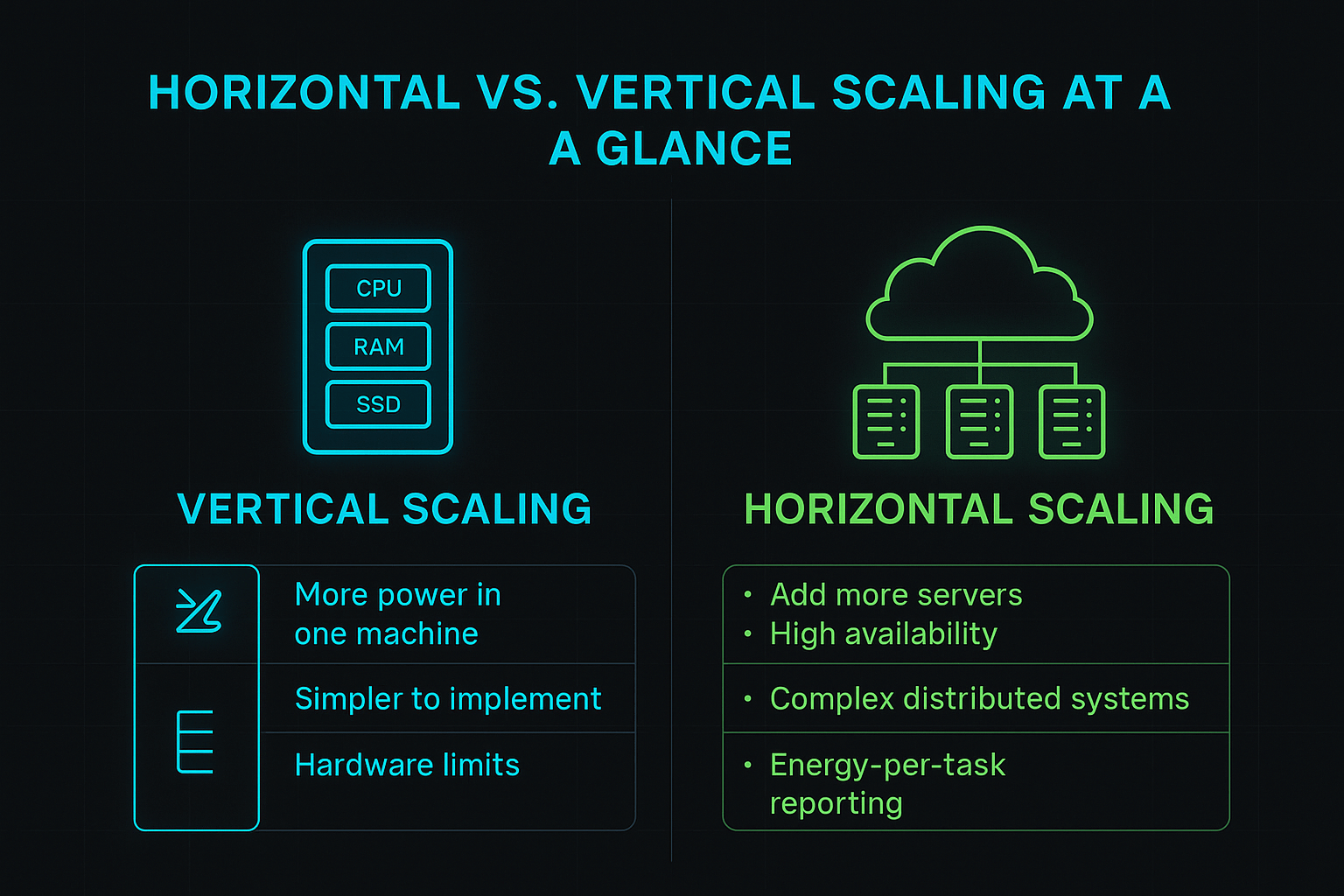

Evaluating Horizontal vs Vertical Scaling: Professionals, Cons & Key Variations

Fast Abstract: How do horizontal and vertical scaling differ?

Vertical scaling will increase assets of a single machine, whereas horizontal scaling distributes the workload throughout a number of machines. Vertical scaling is easier however restricted, whereas horizontal scaling gives higher resilience and scalability at the price of complexity.

Facet‑by‑Facet Comparability

To determine which method fits your wants, think about the next key variations:

- Useful resource Addition: Vertical scaling upgrades an present node (CPU, reminiscence); horizontal scaling provides extra nodes.

- Scalability: Vertical scaling is restricted by {hardware} constraints; horizontal scaling gives close to‑limitless scalability by including nodes.

- Complexity: Vertical scaling is easy; horizontal scaling introduces distributed system complexities.

- Fault Tolerance: Vertical scaling has a single level of failure; horizontal scaling improves resilience as a result of failure of 1 node doesn’t carry down the system.

- Price Dynamics: Vertical scaling could be cheaper initially however turns into costly at excessive tiers; horizontal scaling spreads prices however requires orchestration instruments and provides community overhead.

- Downtime: Vertical scaling typically requires downtime for {hardware} upgrades; horizontal scaling usually permits on‑the‑fly addition or elimination of nodes.

Professionals and Cons

|

Technique |

Professionals |

Cons |

|

Vertical scaling |

Simplicity, minimal architectural adjustments, robust consistency, decrease latency |

{Hardware} limits, single level of failure, downtime throughout upgrades, escalating prices |

|

Horizontal scaling |

Excessive availability, elasticity, zero downtime, close to‑limitless scalability |

Complexity, community latency, consistency challenges, administration overhead |

Diagonal/Hybrid Scaling

Diagonal scaling combines each methods. It entails scaling up a machine till it reaches an economically environment friendly threshold, then scaling out by including extra nodes. This method permits you to stability price and efficiency. As an example, you would possibly scale up your database server to maximise efficiency and preserve robust consistency, then deploy further stateless inference servers horizontally to deal with surges in visitors. Firms like ridesharing or hospitality startups have adopted diagonal scaling, beginning with vertical upgrades after which rolling out microservices to deal with progress.

Clarifai Product Integration

Clarifai helps each vertical and horizontal scaling methods, enabling hybrid scaling. You possibly can select bigger inference cases (vertical) or spin up a number of smaller cases (horizontal) relying in your workload. Clarifai’s compute orchestration gives versatile scaling insurance policies, together with mixing on‑premise native runners with cloud‑primarily based inference employees, enabling diagonal scaling.

Professional Insights

- Technical leads suggest beginning with vertical scaling to simplify deployment, then step by step introducing horizontal scaling as demand grows and complexity turns into manageable.

- Hybrid scaling is especially efficient for AI providers: you may preserve robust consistency for stateful parts (e.g., mannequin metadata) whereas horizontally scaling stateless inference endpoints.

- Clarifai’s expertise reveals that prospects who undertake hybrid scaling get pleasure from improved reliability and value effectivity, particularly when utilizing Clarifai’s orchestration to robotically handle horizontal and vertical assets.

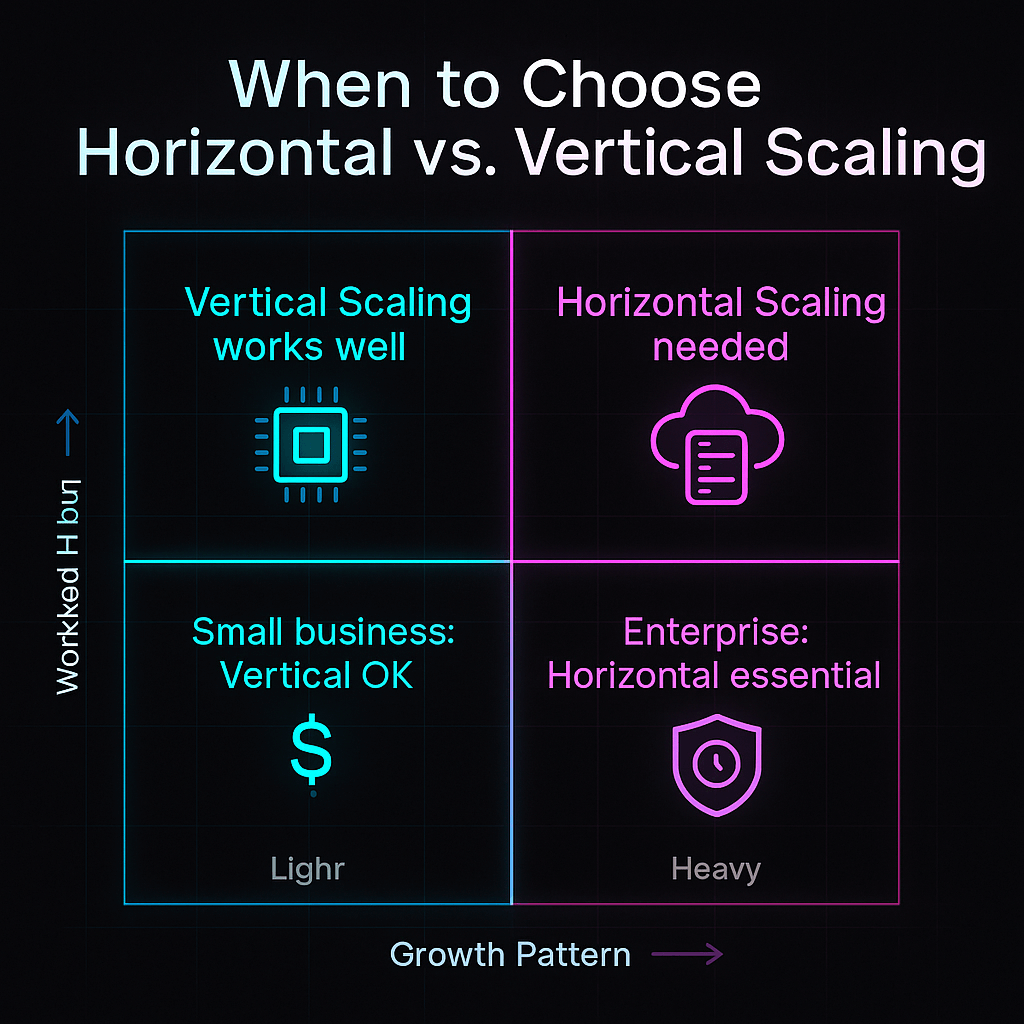

Choice Elements—The way to Select the Proper Scaling Technique

Fast Abstract: How must you select between horizontal and vertical scaling?

Selecting a scaling technique depends upon workload traits, progress projections, price constraints, architectural complexity, and reliability necessities.

Key Choice Standards

- Workload Kind:

- CPU‑sure or reminiscence‑sure workloads (e.g., giant mannequin coaching) might profit from vertical scaling initially, as a result of extra assets on a single machine cut back communication overhead.

- Stateless or embarrassingly parallel workloads (e.g., picture classification throughout many photographs) are appropriate for horizontal scaling as a result of requests may be distributed simply.

- Stateful vs. Stateless Elements:

- Stateful providers (databases, mannequin metadata shops) typically require robust consistency, making vertical or hybrid scaling preferable.

- Stateless providers (API gateways, inference microservices) are perfect for horizontal scaling.

- Progress Projections:

- For those who anticipate exponential progress or unpredictable spikes, horizontal or diagonal scaling is important.

- For restricted or regular progress, vertical scaling might suffice.

- Price Issues:

- Evaluate capital expenditure (capex) for {hardware} upgrades vs. operational expenditure (opex) for working a number of cases.

- Use price optimisation instruments to estimate the overall price of possession over time.

- Availability Necessities:

- Mission‑crucial methods might require excessive redundancy and failover; horizontal scaling supplies higher fault tolerance.

- Non‑crucial prototypes might tolerate quick downtime and might use vertical scaling for simplicity.

- Regulatory & Safety Necessities:

- Some industries require knowledge to stay inside particular geographies; vertical scaling on native servers could also be essential.

- Horizontal scaling throughout areas should adhere to compliance frameworks.

Growing a Choice Framework

Create a call matrix evaluating these elements to your software. Assign weights primarily based on priorities—e.g., reliability could also be extra vital than price for a healthcare AI system. Clarifai’s buyer success crew typically guides organisations by way of these choice matrices, factoring in mannequin traits, person progress charges and regulatory constraints.

Clarifai Product Integration

Clarifai’s administration console supplies insights into mannequin utilization, latency and throughput, enabling knowledge‑pushed scaling selections. You can begin with vertical scaling by deciding on bigger compute plans, then monitor metrics to determine when to scale horizontally utilizing auto‑scaling teams. Clarifai additionally gives consulting providers to assist design scaling methods tailor-made to your workloads.

Professional Insights

- Architects emphasise {that a} one‑dimension‑matches‑all technique doesn’t exist; you need to consider every part of your system individually and select the suitable scaling method.

- Trade analysts suggest factoring in environmental influence—scaling methods that cut back vitality consumption whereas assembly efficiency objectives can yield lengthy‑time period price financial savings and align with company sustainability initiatives.

- Clarifai advises beginning with thorough monitoring and profiling to grasp bottlenecks earlier than investing in scaling.

Implementation Methods and Greatest Practices

Fast Abstract: How do you implement vertical and horizontal scaling?

Vertical scaling requires upgrading {hardware} or deciding on bigger cases, whereas horizontal scaling entails deploying a number of nodes with load balancing and orchestration. Greatest practices embrace automation, monitoring and testing.

Implementing Vertical Scaling

- {Hardware} Upgrades: Add CPU cores, reminiscence modules or sooner storage. For cloud cases, resize to a bigger tier. Plan upgrades throughout upkeep home windows to keep away from downtime.

- Software program Optimization: Modify working system parameters and allocate reminiscence extra effectively. Positive‑tune frameworks (e.g., use bigger GPU reminiscence swimming pools) to take advantage of new assets.

- Virtualisation and Hypervisors: Guarantee hypervisors allocate assets correctly; think about using Clarifai’s native runner on an upgraded server to take care of efficiency domestically.

Implementing Horizontal Scaling

- Load Balancing: Use reverse proxies or load balancers (e.g., NGINX, HAProxy) to distribute requests throughout a number of cases.

- Container Orchestration: Undertake Kubernetes or Docker Swarm to automate deployment and scaling. Use the Horizontal Pod Autoscaler (HPA) to regulate the variety of pods primarily based on CPU/reminiscence metrics.

- Service Discovery: Use a service registry (e.g., Consul, etcd) or Kubernetes DNS to allow cases to find one another.

- Knowledge Sharding & Replication: For databases, shard or partition knowledge throughout nodes; implement replication and consensus protocols to take care of knowledge integrity.

- Monitoring & Observability: Use instruments like Prometheus, Grafana or Clarifai’s constructed‑in dashboards to watch metrics and set off scaling occasions.

- Automation & Infrastructure as Code: Handle infrastructure with Terraform or CloudFormation to make sure reproducibility and consistency.

Utilizing Hybrid Approaches

Hybrid scaling typically requires each vertical and horizontal strategies. For instance, improve the bottom server (vertical) whereas additionally configuring auto‑scaling teams (horizontal). Kubernetes Vertical Pod Autoscaler (VPA) can suggest optimum useful resource sizes for pods, complementing HPA.

Artistic Instance

Think about you’re deploying a textual content summarisation API. Initially, you run one server with 32 GB of RAM (vertical scaling). As visitors will increase, you arrange a Kubernetes cluster with an HPA to handle a number of duplicate pods. The HPA scales pods up when CPU utilization exceeds 70 % and scales down when utilization drops, making certain price effectivity. In the meantime, a VPA screens useful resource utilization and adjusts pod reminiscence requests to optimise utilisation. A cluster autoscaler provides or removes employee nodes, offering further capability when new pods must run.

Clarifai Product Integration

- Compute Orchestration: Clarifai’s platform helps containerised deployments, making it easy to combine with Kubernetes or serverless frameworks. You possibly can outline auto‑scaling insurance policies that spin up further inference employees when metrics exceed thresholds, then spin them down when demand drops.

- Mannequin Inference API: Clarifai’s API endpoints may be positioned behind load balancers to distribute inference requests throughout a number of replicas. As a result of Clarifai makes use of stateless RESTful endpoints, horizontal scaling is seamless.

- Native Runners: For those who want working fashions on‑premises, Clarifai’s native runners profit from vertical scaling. You possibly can improve your server and run a number of processes to deal with extra inference requests.

Professional Insights

- DevOps engineers warning that improper scaling insurance policies can result in thrashing, the place cases are created and terminated too often; they suggest setting cool‑down durations and secure thresholds.

- Researchers spotlight hybrid autoscaling frameworks utilizing machine‑studying fashions: one examine designed a proactive autoscaling mechanism combining Fb Prophet and LSTM to foretell workload and alter pod counts. This method outperformed conventional reactive scaling in accuracy and useful resource effectivity.

- Clarifai’s SRE crew emphasises the significance of observability—with out metrics and logs, it’s unimaginable to nice‑tune scaling insurance policies.

Efficiency, Latency & Throughput Issues

Fast Abstract: How do scaling methods have an effect on efficiency and latency?

Vertical scaling reduces community overhead and latency however is restricted by single‑machine concurrency. Horizontal scaling will increase throughput by way of parallelism, although it introduces inter‑node latency and complexity.

Latency Results

Vertical scaling retains knowledge and computation on a single machine, permitting processes to speak by way of reminiscence or shared bus. This results in decrease latency for duties akin to actual‑time inference or excessive‑frequency buying and selling. Nonetheless, even giant machines can deal with solely so many concurrent requests.

Horizontal scaling distributes workloads throughout a number of nodes, which suggests requests might traverse a community swap and even cross availability zones. Community hops introduce latency; you could design your system to maintain latency inside acceptable bounds. Strategies like locality‑conscious load balancing, caching and edge computing mitigate latency influence.

Throughput Results

Horizontal scaling shines when rising throughput. By distributing requests throughout many nodes, you may course of 1000’s of concurrent requests. That is crucial for AI inference workloads with unpredictable demand. In distinction, vertical scaling will increase throughput solely as much as the machine’s capability; as soon as maxed out, including extra threads or processes yields diminishing returns because of CPU competition.

CAP Theorem and Consistency Fashions

Distributed methods face the CAP theorem, which posits you can’t concurrently assure consistency, availability and partition tolerance. Horizontal scaling typically sacrifices robust consistency for eventual consistency. For AI purposes that don’t require transactional consistency (e.g., suggestion engines), eventual consistency could also be acceptable. Vertical scaling avoids this commerce‑off however lacks redundancy.

Artistic Instance

Think about a actual‑time translation service constructed on Clarifai. For decrease latency in excessive‑stakes conferences, you would possibly run a robust GPU occasion with numerous reminiscence (vertical scaling). This occasion processes translation requests rapidly however can solely deal with a restricted variety of customers. For an internet convention with 1000’s of attendees, you horizontally scale by including extra translation servers; throughput will increase massively, however you could handle session consistency and deal with community delays.

Clarifai Product Integration

- Clarifai gives globally distributed inference endpoints to cut back latency by bringing compute nearer to customers. Utilizing Clarifai’s compute orchestration, you may route requests to the closest node, balancing latency and throughput.

- Clarifai’s API helps batch processing for prime‑throughput situations, enabling environment friendly dealing with of huge datasets throughout horizontally scaled clusters.

Professional Insights

- Efficiency engineers observe that vertical scaling is useful for latency‑delicate workloads, akin to fraud detection or autonomous automobile notion, as a result of knowledge stays native.

- Distributed methods specialists stress the necessity for caching and knowledge locality when scaling horizontally; in any other case, community overhead can negate throughput good points.

- Clarifai’s efficiency crew recommends combining vertical and horizontal scaling: allocate sufficient assets to particular person nodes for baseline efficiency, then add nodes to deal with peaks.

Price Evaluation & Whole Price of Possession

Fast Abstract: What are the fee implications of scaling?

Vertical scaling might have decrease upfront price however escalates quickly at larger tiers; horizontal scaling distributes prices over many cases however requires orchestration and administration overhead.

Price Fashions

- Capital Expenditure (Capex): Vertical scaling typically entails buying or leasing excessive‑finish {hardware}. The associated fee per unit of efficiency will increase as you method high‑tier assets. For on‑premise deployments, capex may be important since you should put money into servers, GPUs and cooling.

- Operational Expenditure (Opex): Horizontal scaling entails paying for a lot of cases, normally on a pay‑as‑you‑go mannequin. Opex may be simpler to funds and observe, but it surely will increase with the variety of nodes and their utilization.

- Hidden Prices: Think about downtime (upkeep for vertical scaling), vitality consumption (knowledge centres devour large energy), licensing charges for software program and added complexity (DevOps and SRE staffing).

Price Dynamics

Vertical scaling might seem cheaper initially, particularly when beginning with small workloads. Nonetheless, as you improve to larger‑capability {hardware}, price rises steeply. For instance, upgrading from a 16 GB GPU to a 32 GB GPU might double or triple the worth. Horizontal scaling spreads price throughout a number of decrease‑price machines, which may be turned off when not wanted, making it less expensive at scale. Nonetheless, orchestration and community prices add overhead.

Artistic Instance

Assume that you must deal with 100,000 picture classifications per minute. You possibly can select a vertical technique by buying a high‑of‑the‑line server for $50,000 able to dealing with the load. Alternatively, horizontal scaling entails leasing twenty smaller servers at $500 per thirty days every. The second choice prices $10,000 per thirty days however permits you to shut down servers throughout off‑peak hours, doubtlessly saving cash. Hybrid scaling would possibly contain shopping for a mid‑tier server and leasing further capability when wanted.

Clarifai Product Integration

- Clarifai gives versatile pricing, permitting you to pay just for the compute you employ. Beginning with a smaller plan (vertical) and scaling horizontally with further inference employees can stability price and efficiency.

- Clarifai’s compute orchestration helps optimise prices by robotically turning off unused containers and cutting down assets throughout low demand durations.

Professional Insights

- Monetary analysts counsel modelling prices over the anticipated lifetime of the service, together with upkeep, vitality and staffing. They warn towards focusing solely on {hardware} prices.

- Sustainability specialists emphasise that the environmental price of scaling needs to be factored into TCO; investing in inexperienced knowledge centres and vitality‑environment friendly {hardware} can cut back lengthy‑time period bills.

- Clarifai’s buyer success crew encourages utilizing price monitoring instruments to trace utilization and set budgets, stopping runaway bills.

Hybrid/Diagonal Scaling Methods

Fast Abstract: What’s hybrid or diagonal scaling?

Hybrid scaling combines vertical and horizontal methods, scaling up till the machine is price environment friendly, then scaling out with further nodes.

What Is Hybrid Scaling?

Hybrid (diagonal) scaling acknowledges that neither vertical nor horizontal scaling alone can accommodate all workloads effectively. It entails scaling up a machine to its price‑efficient restrict after which scaling out when further capability is required. For instance, you would possibly improve your GPU server till the price of additional upgrades outweighs advantages, then deploy further servers to deal with extra requests.

Why Select Hybrid Scaling?

- Price Optimisation: Hybrid scaling helps stability capex and opex. You utilize vertical scaling to get probably the most out of your {hardware}, then add nodes horizontally when demand exceeds that capability.

- Efficiency & Flexibility: You preserve low latency for key parts by way of vertical scaling whereas scaling out stateless providers to deal with peaks.

- Danger Mitigation: Hybrid scaling reduces the one level of failure by including redundancy whereas nonetheless benefiting from robust consistency on scaled‑up nodes.

Actual‑World Examples

Begin‑ups typically start with a vertically scaled monolith; as visitors grows, they break providers into microservices and scale out horizontally. Transportation and hospitality platforms used this method, scaling up early on and step by step adopting microservices and auto‑scaling teams.

Clarifai Product Integration

- Clarifai’s platform permits you to run fashions on‑premises or within the cloud, making hybrid scaling easy. You possibly can vertically scale an on‑premise server for delicate knowledge and horizontally scale cloud inference for public visitors.

- Clarifai’s compute orchestration can handle each forms of scaling; insurance policies can prioritise native assets and burst to the cloud when demand surges.

Professional Insights

- Architects argue that hybrid scaling is probably the most sensible choice for a lot of fashionable workloads, because it supplies a stability of efficiency, price and reliability.

- Analysis on predictive autoscaling suggests integrating hybrid fashions (e.g., Prophet + LSTM) with vertical scaling to additional optimise useful resource allocation.

- Clarifai’s engineers spotlight that hybrid scaling requires cautious coordination between parts; they suggest utilizing orchestration instruments to handle failover and guarantee constant routing of requests.

Use Circumstances & Trade Examples

Fast Abstract: The place are scaling methods utilized in the true world?

Scaling methods differ by trade and workload; AI‑powered providers in e‑commerce, media, finance, IoT and begin‑ups every undertake totally different scaling approaches primarily based on their particular wants.

E‑Commerce & Retail

On-line marketplaces typically expertise unpredictable spikes throughout gross sales occasions. They horizontally scale stateless internet providers (product catalogues, suggestion engines) to deal with surges. Databases could also be scaled vertically to take care of transaction integrity. Clarifai’s visible recognition fashions may be deployed utilizing hybrid scaling—vertical scaling ensures secure product picture classification whereas horizontal scaling handles elevated search queries.

Media & Streaming

Video streaming platforms require large throughput. They make use of horizontal scaling throughout distributed servers for streaming and content material supply networks (CDNs). Metadata shops and person desire engines might scale vertically to take care of consistency. Clarifai’s video evaluation fashions can run on distributed clusters, analysing frames in parallel whereas metadata is saved on scaled‑up servers.

Monetary Providers

Banks and buying and selling platforms prioritise consistency and reliability. They typically vertically scale core transaction methods to ensure ACID properties. Nonetheless, entrance‑finish danger analytics and fraud detection methods scale horizontally to course of giant volumes of transactions concurrently. Clarifai’s anomaly detection fashions are utilized in horizontal clusters to scan for fraudulent patterns in actual time.

IoT & Edge Computing

Edge units acquire knowledge and carry out preliminary processing vertically because of {hardware} constraints. Cloud again‑ends scale horizontally to mixture and analyse knowledge. Clarifai’s edge runners allow on‑gadget inference, whereas knowledge is shipped to cloud clusters for additional evaluation. Hybrid scaling ensures rapid response on the edge whereas leveraging cloud capability for deeper insights.

Begin‑Ups & SMBs

Small firms usually begin with vertical scaling as a result of it’s easy and value efficient. As they develop, they undertake horizontal scaling for higher resilience. Clarifai’s versatile pricing and compute orchestration permit begin‑ups to start small and scale simply when wanted.

Case Research

- An e‑commerce website adopted auto‑scaling teams to deal with Black Friday visitors, utilizing horizontal scaling for internet servers and vertical scaling for the order administration database.

- A monetary establishment improved resilience by migrating its danger evaluation engine to a horizontally scaled microservices structure whereas retaining a vertically scaled core banking system.

- A analysis lab used Clarifai’s fashions for wildlife monitoring, deploying native runners at distant websites (vertical scaling) and sending aggregated knowledge to a central cloud cluster for evaluation (horizontal scaling).

Professional Insights

- Trade specialists observe that deciding on the suitable scaling technique relies upon closely on area necessities; there isn’t a common answer.

- Clarifai’s buyer success crew has witnessed improved person experiences and lowered latency when shoppers undertake hybrid scaling for AI inference workloads.

Rising Traits & Way forward for Scaling

Fast Abstract: What tendencies are shaping the way forward for scaling?

Kubernetes autoscaling, AI‑pushed predictive autoscaling, serverless computing, edge computing and sustainability initiatives are reshaping how organisations scale their methods.

Kubernetes Auto‑Scaling

Kubernetes gives constructed‑in auto‑scaling mechanisms: the Horizontal Pod Autoscaler (HPA) adjusts the variety of pods primarily based on CPU or reminiscence utilization, whereas the Vertical Pod Autoscaler (VPA) dynamically resizes pod assets. A cluster autoscaler provides or removes employee nodes. These instruments allow nice‑grained management over useful resource allocation, enhancing effectivity and reliability.

AI‑Pushed Predictive Autoscaling

Analysis reveals that combining statistical fashions like Prophet with neural networks like LSTM can predict workload patterns and proactively scale assets. Predictive autoscaling goals to allocate capability earlier than spikes happen, decreasing latency and avoiding overprovisioning. Machine‑studying‑pushed autoscaling will possible change into extra prevalent as AI methods develop in complexity.

Serverless & Operate‑as‑a‑Service (FaaS)

Serverless platforms robotically scale capabilities primarily based on demand, releasing builders from infrastructure administration. They scale horizontally behind the scenes, enabling price‑environment friendly dealing with of intermittent workloads. AWS launched predictive scaling for container providers, harnessing machine studying to anticipate demand and alter scaling insurance policies accordingly (as reported in trade information). Clarifai’s APIs may be built-in into serverless workflows to create occasion‑pushed AI purposes.

Edge Computing & Cloud‑Edge Hybrid

Edge computing brings computation nearer to the person, decreasing latency and bandwidth consumption. Vertical scaling on edge units (e.g., upgrading reminiscence or storage) can enhance actual‑time inference, whereas horizontal scaling within the cloud aggregates and analyses knowledge streams. Clarifai’s edge options permit fashions to run on native {hardware}; mixed with cloud assets, this hybrid method ensures each quick response and deep evaluation.

Sustainability and Inexperienced Computing

Hyperscale knowledge centres devour huge vitality, with the mix of vertical and horizontal scaling rising utilisation. Future scaling methods should combine vitality‑environment friendly {hardware}, carbon‑conscious scheduling and renewable vitality sources to cut back environmental influence. AI‑powered useful resource administration can optimise workloads to run on servers with decrease carbon footprints.

Clarifai Product Integration

- Clarifai is exploring AI‑pushed predictive autoscaling, leveraging workload analytics to anticipate demand and alter inference capability in actual time.

- Clarifai’s assist for Kubernetes makes it straightforward to undertake HPA and VPA; fashions can robotically scale primarily based on CPU/GPU utilization.

- Clarifai is dedicated to sustainability, partnering with inexperienced cloud suppliers and providing environment friendly inference choices to cut back energy utilization.

Professional Insights

- Trade analysts consider that clever autoscaling will change into the norm, the place machine studying fashions predict demand, allocate assets and think about carbon footprint concurrently.

- Edge computing advocates argue that native processing will enhance, necessitating vertical scaling on units and horizontal scaling within the cloud.

- Clarifai’s analysis crew is engaged on dynamic mannequin compression and structure search, enabling fashions to scale down gracefully for edge deployment whereas sustaining accuracy.

-png.png?width=1024&height=1536&name=ChatGPT%20Image%20Sep%2018%2c%202025%2c%2001_42_48%20PM%20(1)-png.png)

Step‑by‑Step Information for Deciding on and Implementing a Scaling Technique

Fast Abstract: How do you choose and implement a scaling technique?

Observe a structured course of: assess workloads, select the correct scaling sample for every part, implement scaling mechanisms, monitor efficiency and alter insurance policies.

Step 1: Assess Workloads & Bottlenecks

- Profile your software: Use monitoring instruments to grasp CPU, reminiscence, I/O and community utilization. Determine sizzling spots and bottlenecks.

- Classify parts: Decide which providers are stateful or stateless, and whether or not they’re CPU‑sure, reminiscence‑sure or I/O‑sure.

Step 2: Select Scaling Patterns for Every Part

- Stateful providers (e.g., databases, mannequin registries) might profit from vertical scaling or hybrid scaling.

- Stateless providers (e.g., inference APIs, function extraction) are perfect for horizontal scaling.

- Think about diagonal scaling—scale vertically till price‑environment friendly, then scale horizontally.

Step 3: Implement Scaling Mechanisms

- Vertical Scaling: Resize servers; improve {hardware}; alter reminiscence and CPU allocations.

- Horizontal Scaling: Deploy load balancers, auto‑scaling teams, Kubernetes HPA/VPA; use service discovery.

- Hybrid Scaling: Mix each; use VPA for useful resource optimisation; configure cluster autoscalers.

Step 4: Take a look at & Validate

- Carry out load testing to simulate visitors spikes and measure latency, throughput and value. Modify scaling thresholds and guidelines.

- Conduct chaos testing to make sure the system tolerates node failures and community partitions.

Step 5: Monitor & Optimise

- Implement observability with metrics, logs and traces to watch useful resource utilisation and prices.

- Refine scaling insurance policies primarily based on actual‑world utilization; alter thresholds, cool‑down durations and predictive fashions.

- Evaluation prices and optimise by turning off unused cases or resizing underutilised servers.

Step 6: Plan for Progress & Sustainability

- Consider future workloads and plan capability accordingly. Think about rising tendencies like predictive autoscaling, serverless and edge computing.

- Incorporate sustainability objectives, deciding on inexperienced knowledge centres and vitality‑environment friendly {hardware}.

Clarifai Product Integration

- Clarifai gives detailed utilization dashboards to watch API calls, latency and throughput; these metrics feed into scaling selections.

- Clarifai’s orchestration instruments let you configure auto‑scaling insurance policies straight from the dashboard or by way of API; you may outline thresholds, replic depend and concurrency limits.

- Clarifai’s assist crew can help in designing and implementing customized scaling methods tailor-made to your fashions.

Professional Insights

- DevOps specialists emphasise automation: guide scaling doesn’t scale with the enterprise; infrastructure as code and automatic insurance policies are important.

- Researchers stress the significance of steady testing and monitoring; scaling methods ought to evolve as workloads change.

- Clarifai engineers remind customers to think about knowledge governance and compliance when scaling throughout areas and clouds.

Frequent Pitfalls and The way to Keep away from Them

Fast Abstract: What frequent errors do groups make when scaling?

Frequent pitfalls embrace over‑provisioning or below‑provisioning assets, neglecting failure modes, ignoring knowledge consistency, lacking observability and disregarding vitality consumption.

Over‑Scaling and Beneath‑Scaling

Over‑scaling results in wasteful spending, particularly if auto‑scaling insurance policies are too aggressive. Beneath‑scaling causes efficiency degradation and potential outages. Keep away from each by setting life like thresholds, cool‑down durations and predictive guidelines.

Ignoring Single Factors of Failure

Groups typically scale up a single server with out redundancy. If that server fails, your complete service goes down, inflicting downtime. At all times design for failover and redundancy.

Complexity Debt in Horizontal Scaling

Deploying a number of cases with out correct automation results in configuration drift, the place totally different nodes run barely totally different software program variations or configurations. Use orchestration and infrastructure as code to take care of consistency.

Knowledge Consistency Challenges

Distributed databases might undergo from replication lag and eventual consistency. Design your software to tolerate eventual consistency, or use hybrid scaling for stateful parts.

Safety & Compliance Dangers

Scaling introduces new assault surfaces, akin to poorly secured load balancers or misconfigured community insurance policies. Apply zero‑belief ideas and steady compliance checks.

Neglecting Sustainability

Failing to think about the environmental influence of scaling will increase vitality consumption and carbon emissions. Select vitality‑environment friendly {hardware} and schedule non‑pressing duties throughout low‑carbon durations.

Clarifai Product Integration

- Clarifai’s platform supplies greatest practices for securing AI endpoints, together with API key administration and encryption.

- Clarifai’s monitoring instruments assist detect over‑scaling or below‑scaling, enabling you to regulate insurance policies earlier than prices spiral.

Professional Insights

- Incident response groups emphasise the significance of chaos engineering—intentionally injecting failures to find weaknesses in scaling structure.

- Safety specialists suggest steady vulnerability scanning throughout all scaled assets.

- Clarifai encourages a proactive tradition of observability and sustainability, embedding monitoring and inexperienced initiatives into scaling plans.

Conclusion & Suggestions

Fast Abstract: Which scaling technique must you select?

There isn’t any one‑dimension‑matches‑all reply—consider your software’s necessities and design accordingly. Begin small with vertical scaling, plan for horizontal scaling, embrace hybrid methods and undertake predictive autoscaling. Sustainability needs to be a core consideration.

Key Takeaways

- Vertical scaling is easy and efficient for early‑stage or monolithic workloads, but it surely has {hardware} limits and introduces single factors of failure.

- Horizontal scaling delivers elasticity and resilience, although it requires distributed methods experience and cautious orchestration.

- Hybrid (diagonal) scaling gives a balanced method, leveraging the advantages of each methods.

- Rising tendencies like predictive autoscaling, serverless computing and edge computing will form the way forward for scalability, making automation and AI integral to infrastructure administration.

- Clarifai supplies the instruments and experience that can assist you scale your AI workloads effectively, whether or not on‑premise, within the cloud or throughout each.

Closing Suggestions

- Begin with vertical scaling for prototypes or small workloads, utilizing Clarifai’s native runners or bigger occasion plans.

- Implement horizontal scaling when visitors will increase, deploying a number of inference employees and cargo balancers; use Kubernetes HPA and Clarifai’s compute orchestration.

- Undertake hybrid scaling to stability price, efficiency and reliability; use VPA to optimise pod sizes and cluster autoscaling to handle nodes.

- Monitor and optimise consistently, utilizing Clarifai’s dashboards and third‑social gathering observability instruments. Modify scaling insurance policies as your workloads evolve.

- Plan for sustainability, deciding on inexperienced cloud choices and vitality‑environment friendly {hardware}; incorporate carbon‑conscious scheduling.

If you’re not sure which method to decide on, attain out to Clarifai’s assist crew. We assist you to analyse workloads, design scaling architectures and implement auto‑scaling insurance policies. With the correct technique, your AI purposes will stay responsive, price environment friendly and environmentally accountable.

Continuously Requested Questions (FAQ)

What’s the essential distinction between vertical and horizontal scaling?

Vertical scaling provides assets (CPU, reminiscence, storage) to a single machine, whereas horizontal scaling provides extra machines to distribute workload, offering better redundancy and scalability.

When ought to I select vertical scaling?

Select vertical scaling for small workloads, prototypes or legacy purposes that require robust consistency and are simpler to handle on a single server. It’s additionally appropriate for stateful providers and on‑premise deployments with compliance constraints.

When ought to I select horizontal scaling?

Horizontal scaling is good for purposes with unpredictable or quickly rising demand. It gives elasticity and fault tolerance, making it excellent for stateless providers, microservices architectures and AI inference workloads.

What’s diagonal scaling?

Diagonal (hybrid) scaling combines vertical and horizontal methods. You scale up a machine till it reaches a value‑environment friendly threshold after which scale out by including nodes. This method balances efficiency, price and reliability.

How does Kubernetes deal with scaling?

Kubernetes supplies the Horizontal Pod Autoscaler (HPA) for scaling the variety of pods, the Vertical Pod Autoscaler (VPA) for adjusting useful resource requests, and a cluster autoscaler for including or eradicating nodes. Collectively, these instruments allow dynamic, nice‑grained scaling of containerised workloads.

What’s predictive autoscaling?

Predictive autoscaling makes use of machine‑studying fashions to forecast workload demand and allocate assets proactively. This reduces latency, prevents over‑provisioning and improves price effectivity.

How can Clarifai assist with scaling?

Clarifai’s compute orchestration and mannequin inference APIs assist each vertical and horizontal scaling. Customers can select bigger inference cases, run a number of inference employees throughout areas, or mix native runners with cloud providers. Clarifai additionally gives consulting and assist for designing scalable, sustainable AI deployments.

Why ought to I care about sustainability in scaling?

Hyperscale knowledge centres devour substantial vitality, and poor scaling methods can exacerbate this. Selecting vitality‑environment friendly {hardware} and leveraging predictive autoscaling reduces vitality utilization and carbon emissions, aligning with company sustainability objectives.

What’s one of the simplest ways to begin implementing scaling?

Start by monitoring your present workloads to establish bottlenecks. Create a call matrix primarily based on workload traits, progress projections and value constraints. Begin with vertical scaling for rapid wants, then undertake horizontal or hybrid scaling as visitors will increase. Use automation and observability instruments, and seek the advice of specialists like Clarifai’s engineering crew for steering.