Baidu AI Analysis crew has simply launched ERNIE-4.5-21B-A3B-Pondering, a brand new reasoning-focused massive language mannequin designed round effectivity, long-context reasoning, and power integration. Being a part of the ERNIE-4.5 household, this mannequin is a Combination-of-Specialists (MoE) structure with 21B whole parameters however solely 3B energetic parameters per token, making it computationally environment friendly whereas sustaining aggressive reasoning functionality. Launched below the Apache-2.0 license, it’s accessible for each analysis and business deployment through Hugging Face.

What’s the architectural design of ERNIE-4.5-21B-A3B-Pondering?

ERNIE-4.5-21B-A3B-Pondering is constructed on a Combination-of-Specialists spine. As a substitute of activating all 21B parameters, the router selects a subset of specialists, leading to 3B energetic parameters per token. This construction reduces computation with out compromising the specialization of various specialists. The analysis crew applies router orthogonalization loss and token-balanced loss to encourage various professional activation and steady coaching.

This design offers a center floor between small dense fashions and ultra-large methods. The analysis crew’s assumptions embody a concept that ~3B energetic parameters per token could signify a sensible candy spot for reasoning efficiency versus deployment effectivity.

How does the mannequin deal with long-context reasoning?

A defining functionality of ERNIE-4.5-21B-A3B-Pondering is its 128K context size. This permits the mannequin to course of very lengthy paperwork, carry out prolonged multi-step reasoning, and combine structured information sources akin to educational papers or multi-file codebases.

The analysis crew achieves this by progressive scaling of Rotary Place Embeddings (RoPE)—regularly rising the frequency base from 10K as much as 500K throughout coaching. Further optimizations, together with FlashMask consideration and memory-efficient scheduling, make these long-context operations computationally possible.

What coaching technique helps its reasoning?

The mannequin follows the multi-stage recipe outlined throughout the ERNIE-4.5 household:

- Stage I – Textual content-only pretraining builds the core language spine, beginning with 8K context and increasing to 128K.

- Stage II – Imaginative and prescient coaching is skipped for this text-only variant.

- Stage III – Joint multimodal coaching isn’t used right here, as A3B-Pondering is only textual.

Publish-training focuses on reasoning duties. The analysis crew employs Supervised High quality-Tuning (SFT) throughout arithmetic, logic, coding, and science, adopted by Progressive Reinforcement Studying (PRL). Reinforcement phases start with logic, then lengthen to arithmetic and programming, and at last to broader reasoning duties. That is enhanced by Unified Choice Optimization (UPO), which integrates choice studying with PPO to stabilize alignment and scale back reward hacking.

What position does software utilization play on this mannequin?

ERNIE-4.5-21B-A3B-Pondering helps structured software and performance calling, making it helpful for eventualities the place exterior computation or retrieval is required. Builders can combine it with vLLM, Transformers 4.54+, and FastDeploy. This tool-use functionality is especially suited to program synthesis, symbolic reasoning, and multi-agent workflows.

Constructed-in operate calling permits the mannequin to purpose over lengthy contexts whereas dynamically invoking exterior APIs, a key requirement for utilized reasoning in enterprise methods.

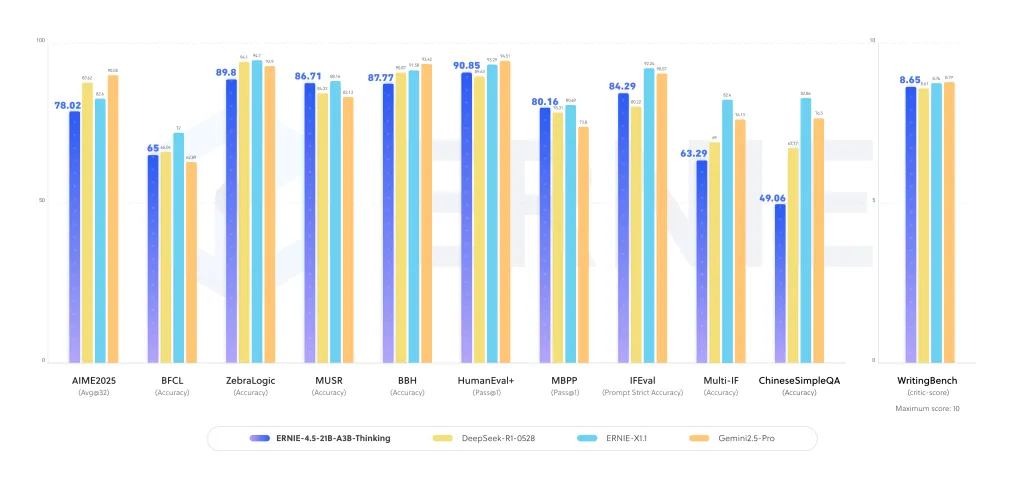

How does ERNIE-4.5-21B-A3B-Pondering carry out on reasoning benchmarks?

It present sturdy efficiency enhancements throughout logical reasoning, arithmetic, scientific QA, and programming duties. In evaluations, the mannequin demonstrates:

- Enhanced accuracy in multi-step reasoning datasets, the place lengthy chains of thought are required.

- Competitiveness with bigger dense fashions on STEM reasoning duties.

- Secure textual content technology and educational synthesis efficiency, benefiting from prolonged context coaching.

These outcomes counsel that the MoE construction amplifies reasoning specialization, making it environment friendly with out requiring trillion-scale dense parameters.

How does it examine to different reasoning-focused LLMs?

This launch will get into the panorama that features OpenAI’s o3, Anthropic’s Claude 4, DeepSeek-R1, and Qwen-3. Many of those rivals depend on dense architectures or bigger energetic parameter counts. Baidu analysis crew’s selection of a compact MoE with 3B energetic parameters presents a special steadiness:

- Scalability: Sparse activation reduces compute overhead whereas scaling professional capability.

- Lengthy-context readiness: 128K context is immediately skilled, not retrofitted.

- Industrial openness: Apache-2.0 license lowers adoption friction for enterprises.

Abstract

ERNIE-4.5-21B-A3B-Pondering explains how deep reasoning will be achieved with out huge dense parameter counts. By combining environment friendly MoE routing, 128K context coaching, and power integration, Baidu’s analysis crew presents a mannequin that balances research-grade reasoning with deployment feasibility.

Try the Mannequin on Hugging Face and PAPER. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to observe us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our E-newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.