Alibaba Cloud simply up to date the open-source panorama. As we speak, the Qwen group launched Qwen3.5, the latest technology of their massive language mannequin (LLM) household. Essentially the most highly effective model is Qwen3.5-397B-A17B. This mannequin is a sparse Combination-of-Specialists (MoE) system. It combines huge reasoning energy with excessive effectivity.

Qwen3.5 is a local vision-language mannequin. It’s designed particularly for AI brokers. It may well see, code, and motive throughout 201 languages.

The Core Structure: 397B Complete, 17B Energetic

The technical specs of Qwen3.5-397B-A17B are spectacular. The mannequin comprises 397B complete parameters. Nonetheless, it makes use of a sparse MoE design. This implies it solely prompts 17B parameters throughout any single ahead move.

This 17B activation rely is crucial quantity for devs. It permits the mannequin to supply the intelligence of a 400B mannequin. However it runs with the pace of a a lot smaller mannequin. The Qwen group stories a 8.6x to 19.0x improve in decoding throughput in comparison with earlier generations. This effectivity solves the excessive price of working large-scale AI.

Environment friendly Hybrid Structure: Gated Delta Networks

Qwen3.5 doesn’t use a typical Transformer design. It makes use of an ‘Environment friendly Hybrid Structure.’ Most LLMs rely solely on Consideration mechanisms. These can change into gradual with lengthy textual content. Qwen3.5 combines Gated Delta Networks (linear consideration) with Combination-of-Specialists (MoE).

The mannequin consists of 60 layers. The hidden dimension dimension is 4,096. These layers comply with a particular ‘Hidden Structure.’ The structure teams layers into units of 4.

- 3 blocks use Gated DeltaNet-plus-MoE.

- 1 block makes use of Gated Consideration-plus-MoE.

- This sample repeats 15 occasions to achieve 60 layers.

Technical particulars embrace:

- Gated DeltaNet: It makes use of 64 linear consideration heads for Values (V). It makes use of 16 heads for Queries and Keys (QK).

- MoE Construction: The mannequin has 512 complete specialists. Every token prompts 10 routed specialists and 1 shared skilled. This equals 11 energetic specialists per token.

- Vocabulary: The mannequin makes use of a padded vocabulary of 248,320 tokens.

Native Multimodal Coaching: Early Fusion

Qwen3.5 is a native vision-language mannequin. Many different fashions add imaginative and prescient capabilities later. Qwen3.5 used ‘Early Fusion’ coaching. This implies the mannequin discovered from photos and textual content on the similar time.

The coaching used trillions of multimodal tokens. This makes Qwen3.5 higher at visible reasoning than earlier Qwen3-VL variations. It’s extremely able to ‘agentic’ duties. For instance, it could actually take a look at a UI screenshot and generate the precise HTML and CSS code. It may well additionally analyze lengthy movies with second-level accuracy.

The mannequin helps the Mannequin Context Protocol (MCP). It additionally handles complicated function-calling. These options are very important for constructing brokers that management apps or browse the net. Within the IFBench take a look at, it scored 76.5. This rating beats many proprietary fashions.

Fixing the Reminiscence Wall: 1M Context Size

Lengthy-form knowledge processing is a core function of Qwen3.5. The bottom mannequin has a local context window of 262,144 (256K) tokens. The hosted Qwen3.5-Plus model goes even additional. It helps 1M tokens.

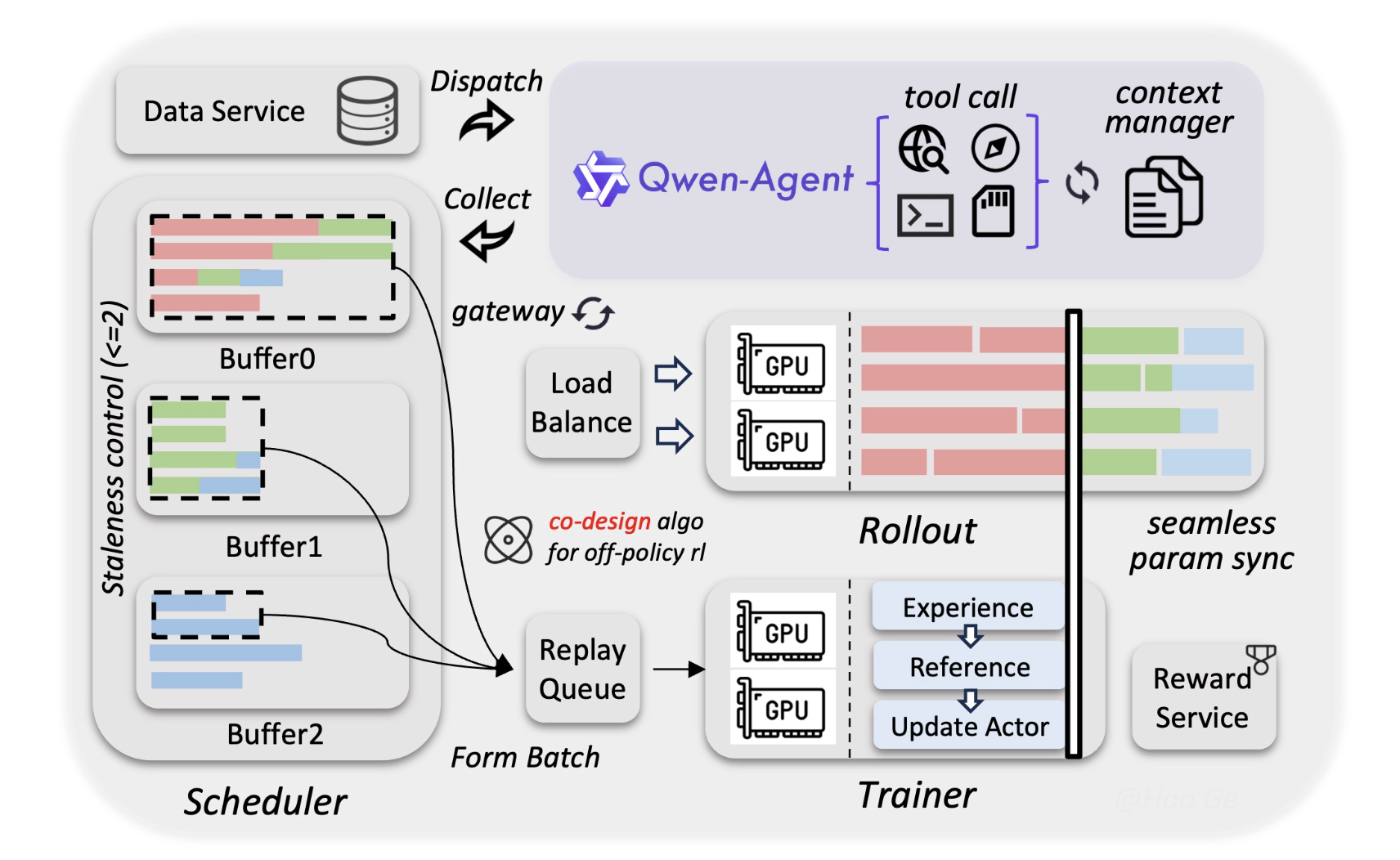

Alibaba Qwen group used a brand new asynchronous Reinforcement Studying (RL) framework for this. It ensures the mannequin stays correct even on the finish of a 1M token doc. For Devs, this implies you’ll be able to feed a complete codebase into one immediate. You don’t all the time want a posh Retrieval-Augmented Era (RAG) system.

Efficiency and Benchmarks

The mannequin excels in technical fields. It achieved excessive scores on Humanity’s Final Examination (HLE-Verified). It is a tough benchmark for AI information.

- Coding: It reveals parity with top-tier closed-source fashions.

- Math: The mannequin makes use of ‘Adaptive Software Use.’ It may well write Python code to resolve math issues. It then runs the code to confirm the reply.

- Languages: It helps 201 totally different languages and dialects. It is a huge leap from the 119 languages within the earlier model.

Key Takeaways

- Hybrid Effectivity (MoE + Gated Delta Networks): Qwen3.5 makes use of a 3:1 ratio of Gated Delta Networks (linear consideration) to straightforward Gated Consideration blocks throughout 60 layers. This hybrid design permits for an 8.6x to 19.0x improve in decoding throughput in comparison with earlier generations.

- Huge Scale, Low Footprint: The Qwen3.5-397B-A17B options 397B complete parameters however solely prompts 17B per token. You get 400B-class intelligence with the inference pace and reminiscence necessities of a a lot smaller mannequin.

- Native Multimodal Basis: Not like ‘bolted-on’ imaginative and prescient fashions, Qwen3.5 was skilled by way of Early Fusion on trillions of textual content and picture tokens concurrently. This makes it a top-tier visible agent, scoring 76.5 on IFBench for following complicated directions in visible contexts.

- 1M Token Context: Whereas the bottom mannequin helps a local 256k token context, the hosted Qwen3.5-Plus handles as much as 1M tokens. This huge window permits devs to course of total codebases or 2-hour movies with no need complicated RAG pipelines.

Take a look at the Technical particulars, Mannequin Weights and GitHub Repo. Additionally, be happy to comply with us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our Publication. Wait! are you on telegram? now you’ll be able to be a part of us on telegram as properly.