On this tutorial, we discover how federated studying behaves when the normal centralized aggregation server is eliminated and changed with a totally decentralized, peer-to-peer gossip mechanism. We implement each centralized FedAvg and decentralized Gossip Federated Studying from scratch and introduce client-side differential privateness by injecting calibrated noise into native mannequin updates. By working managed experiments on non-IID MNIST information, we look at how privateness power, as measured by completely different epsilon values, straight impacts convergence velocity, stability, and closing mannequin accuracy. Additionally, we research the sensible trade-offs between privateness ensures and studying effectivity in real-world decentralized studying methods. Try the Full Codes right here.

import os, math, random, time

from dataclasses import dataclass

from typing import Dict, Record, Tuple

import subprocess, sys

def pip_install(pkgs):

subprocess.check_call([sys.executable, "-m", "pip", "install", "-q"] + pkgs)

pip_install(["torch", "torchvision", "numpy", "matplotlib", "networkx", "tqdm"])

import numpy as np

import torch

import torch.nn as nn

import torch.nn.practical as F

from torch.utils.information import DataLoader, Subset

from torchvision import datasets, transforms

import matplotlib.pyplot as plt

import networkx as nx

from tqdm import trange

SEED = 7

random.seed(SEED)

np.random.seed(SEED)

torch.manual_seed(SEED)

torch.cuda.manual_seed_all(SEED)

torch.backends.cudnn.deterministic = False

torch.backends.cudnn.benchmark = True

rework = transforms.Compose([transforms.ToTensor()])

train_ds = datasets.MNIST(root="/content material/information", prepare=True, obtain=True, rework=rework)

test_ds = datasets.MNIST(root="/content material/information", prepare=False, obtain=True, rework=rework)We arrange the execution setting and put in all required dependencies. We initialize random seeds and system settings to keep up reproducibility throughout experiments. We additionally load the MNIST dataset, which serves as a light-weight but efficient benchmark for federated studying experiments. Try the Full Codes right here.

def make_noniid_clients(dataset, num_clients=20, shards_per_client=2, seed=SEED):

rng = np.random.default_rng(seed)

y = np.array([dataset[i][1] for i in vary(len(dataset))])

idx = np.arange(len(dataset))

idx_sorted = idx[np.argsort(y)]

num_shards = num_clients * shards_per_client

shard_size = len(dataset) // num_shards

shards = [idx_sorted[i*shard_size:(i+1)*shard_size] for i in vary(num_shards)]

rng.shuffle(shards)

client_indices = []

for c in vary(num_clients):

take = shards[c*shards_per_client:(c+1)*shards_per_client]

client_indices.append(np.concatenate(take))

return client_indices

NUM_CLIENTS = 20

client_indices = make_noniid_clients(train_ds, num_clients=NUM_CLIENTS, shards_per_client=2)

test_loader = DataLoader(test_ds, batch_size=1024, shuffle=False, num_workers=2, pin_memory=True)

class MLP(nn.Module):

def __init__(self):

tremendous().__init__()

self.fc1 = nn.Linear(28*28, 256)

self.fc2 = nn.Linear(256, 128)

self.fc3 = nn.Linear(128, 10)

def ahead(self, x):

x = x.view(x.measurement(0), -1)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

return self.fc3(x)We assemble a non-IID information distribution by partitioning the coaching dataset into label-based shards throughout a number of shoppers. We outline a compact neural community mannequin that balances expressiveness and computational effectivity. It allows us to realistically simulate information heterogeneity, a important problem in federated studying methods. Try the Full Codes right here.

def get_model_params(mannequin):

return {ok: v.detach().clone() for ok, v in mannequin.state_dict().objects()}

def set_model_params(mannequin, params):

mannequin.load_state_dict(params, strict=True)

def add_params(a, b):

return {ok: a[k] + b[k] for ok in a.keys()}

def sub_params(a, b):

return {ok: a[k] - b[k] for ok in a.keys()}

def scale_params(a, s):

return {ok: a[k] * s for ok in a.keys()}

def mean_params(params_list):

out = {ok: torch.zeros_like(params_list[0][k]) for ok in params_list[0].keys()}

for p in params_list:

for ok in out.keys():

out[k] += p[k]

for ok in out.keys():

out[k] /= len(params_list)

return out

def l2_norm_params(delta):

sq = 0.0

for v in delta.values():

sq += float(torch.sum(v.float() * v.float()).merchandise())

return math.sqrt(sq)

def dp_sanitize_update(delta, clip_norm, epsilon, delta_dp, rng):

norm = l2_norm_params(delta)

scale = min(1.0, clip_norm / (norm + 1e-12))

clipped = scale_params(delta, scale)

if epsilon is None or math.isinf(epsilon) or epsilon <= 0:

return clipped

sigma = clip_norm * math.sqrt(2.0 * math.log(1.25 / delta_dp)) / epsilon

noised = {}

for ok, v in clipped.objects():

noise = torch.regular(imply=0.0, std=sigma, measurement=v.form, generator=rng, system=v.system, dtype=v.dtype)

noised[k] = v + noise

return noisedWe implement parameter manipulation utilities that allow addition, subtraction, scaling, and averaging of mannequin weights throughout shoppers. We introduce differential privateness by clipping native updates and injecting Gaussian noise, each decided by the chosen privateness finances. It serves because the core privateness mechanism that permits us to review the privateness–utility trade-off in each centralized and decentralized settings. Try the Full Codes right here.

def local_train_one_client(base_params, client_id, epochs, lr, batch_size, weight_decay=0.0):

mannequin = MLP().to(system)

set_model_params(mannequin, base_params)

mannequin.prepare()

loader = DataLoader(

Subset(train_ds, client_indices[client_id].tolist() if hasattr(client_indices[client_id], "tolist") else client_indices[client_id]),

batch_size=batch_size,

shuffle=True,

num_workers=2,

pin_memory=True

)

decide = torch.optim.SGD(mannequin.parameters(), lr=lr, momentum=0.9, weight_decay=weight_decay)

for _ in vary(epochs):

for xb, yb in loader:

xb, yb = xb.to(system), yb.to(system)

decide.zero_grad(set_to_none=True)

logits = mannequin(xb)

loss = F.cross_entropy(logits, yb)

loss.backward()

decide.step()

return get_model_params(mannequin)

@torch.no_grad()

def consider(params):

mannequin = MLP().to(system)

set_model_params(mannequin, params)

mannequin.eval()

whole, appropriate = 0, 0

loss_sum = 0.0

for xb, yb in test_loader:

xb, yb = xb.to(system), yb.to(system)

logits = mannequin(xb)

loss = F.cross_entropy(logits, yb, discount="sum")

loss_sum += float(loss.merchandise())

pred = torch.argmax(logits, dim=1)

appropriate += int((pred == yb).sum().merchandise())

whole += int(yb.numel())

return loss_sum / whole, appropriate / wholeWe outline the native coaching loop that every shopper executes independently on its non-public information. We additionally implement a unified analysis routine to measure check loss and accuracy for any given mannequin state. Collectively, these features simulate sensible federated studying habits the place coaching and analysis are absolutely decoupled from information possession. Try the Full Codes right here.

@dataclass

class FedAvgConfig:

rounds: int = 25

clients_per_round: int = 10

local_epochs: int = 1

lr: float = 0.06

batch_size: int = 64

clip_norm: float = 2.0

epsilon: float = math.inf

delta_dp: float = 1e-5

def run_fedavg(cfg):

global_params = get_model_params(MLP().to(system))

historical past = {"test_loss": [], "test_acc": []}

for r in trange(cfg.rounds):

chosen = random.pattern(vary(NUM_CLIENTS), ok=cfg.clients_per_round)

start_params = global_params

updates = []

for cid in chosen:

local_params = local_train_one_client(start_params, cid, cfg.local_epochs, cfg.lr, cfg.batch_size)

delta = sub_params(local_params, start_params)

rng = torch.Generator(system=system)

rng.manual_seed(SEED * 10000 + r * 100 + cid)

delta_dp = dp_sanitize_update(delta, cfg.clip_norm, cfg.epsilon, cfg.delta_dp, rng)

updates.append(delta_dp)

avg_update = mean_params(updates)

global_params = add_params(start_params, avg_update)

tl, ta = consider(global_params)

historical past["test_loss"].append(tl)

historical past["test_acc"].append(ta)

return historical past, global_paramsWe implement the centralized FedAvg algorithm, the place a subset of shoppers trains domestically and sends differentially non-public updates to a central aggregator. We monitor mannequin efficiency throughout communication rounds to look at convergence habits below various privateness budgets. This serves because the baseline in opposition to which decentralized gossip-based studying is in contrast. Try the Full Codes right here.

@dataclass

class GossipConfig:

rounds: int = 25

local_epochs: int = 1

lr: float = 0.06

batch_size: int = 64

clip_norm: float = 2.0

epsilon: float = math.inf

delta_dp: float = 1e-5

topology: str = "ring"

p: float = 0.2

gossip_pairs_per_round: int = 10

def build_topology(cfg):

if cfg.topology == "ring":

G = nx.cycle_graph(NUM_CLIENTS)

elif cfg.topology == "erdos_renyi":

G = nx.erdos_renyi_graph(NUM_CLIENTS, cfg.p, seed=SEED)

if not nx.is_connected(G):

comps = record(nx.connected_components(G))

for i in vary(len(comps) - 1):

a = subsequent(iter(comps[i]))

b = subsequent(iter(comps[i+1]))

G.add_edge(a, b)

else:

elevate ValueError

return G

def run_gossip(cfg):

node_params = [get_model_params(MLP().to(device)) for _ in range(NUM_CLIENTS)]

G = build_topology(cfg)

historical past = {"avg_test_loss": [], "avg_test_acc": []}

for r in trange(cfg.rounds):

new_params = []

for cid in vary(NUM_CLIENTS):

p0 = node_params[cid]

p_local = local_train_one_client(p0, cid, cfg.local_epochs, cfg.lr, cfg.batch_size)

delta = sub_params(p_local, p0)

rng = torch.Generator(system=system)

rng.manual_seed(SEED * 10000 + r * 100 + cid)

delta_dp = dp_sanitize_update(delta, cfg.clip_norm, cfg.epsilon, cfg.delta_dp, rng)

p_local_dp = add_params(p0, delta_dp)

new_params.append(p_local_dp)

node_params = new_params

edges = record(G.edges())

for _ in vary(cfg.gossip_pairs_per_round):

i, j = random.alternative(edges)

avg = mean_params([node_params[i], node_params[j]])

node_params[i] = avg

node_params[j] = avg

losses, accs = [], []

for cid in vary(NUM_CLIENTS):

tl, ta = consider(node_params[cid])

losses.append(tl)

accs.append(ta)

historical past["avg_test_loss"].append(float(np.imply(losses)))

historical past["avg_test_acc"].append(float(np.imply(accs)))

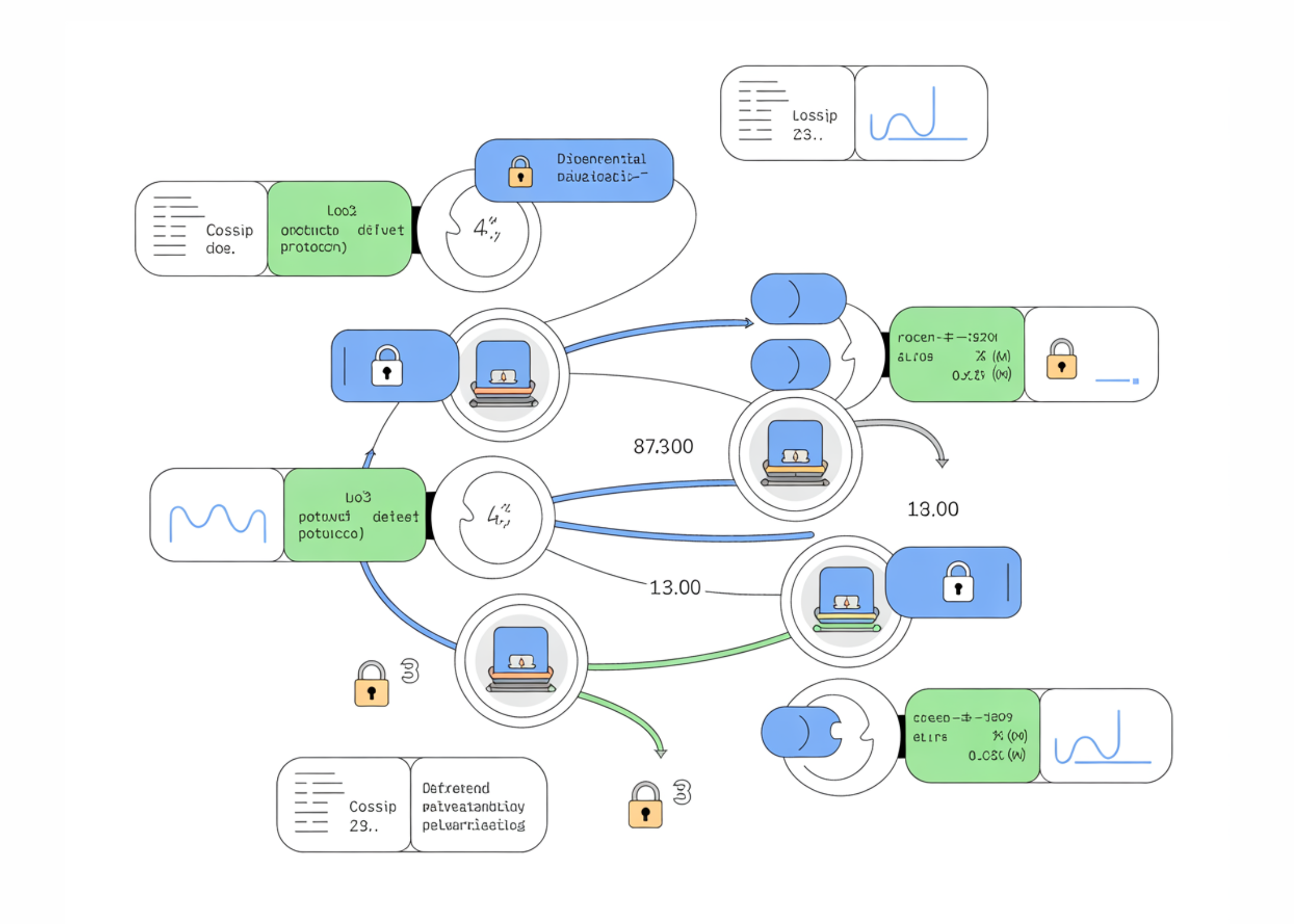

return historical past, node_paramsWe implement decentralized Gossip Federated Studying utilizing a peer-to-peer mannequin that exchanges over a predefined community topology. We simulate repeated native coaching and pairwise parameter averaging with out counting on a central server. It permits us to research how privateness noise propagates via decentralized communication patterns and impacts convergence. Try the Full Codes right here.

eps_sweep = [math.inf, 8.0, 4.0, 2.0, 1.0]

ROUNDS = 20

fedavg_results = {}

gossip_results = {}

common_local_epochs = 1

common_lr = 0.06

common_bs = 64

common_clip = 2.0

common_delta = 1e-5

for eps in eps_sweep:

fcfg = FedAvgConfig(

rounds=ROUNDS,

clients_per_round=10,

local_epochs=common_local_epochs,

lr=common_lr,

batch_size=common_bs,

clip_norm=common_clip,

epsilon=eps,

delta_dp=common_delta

)

hist_f, _ = run_fedavg(fcfg)

fedavg_results[eps] = hist_f

gcfg = GossipConfig(

rounds=ROUNDS,

local_epochs=common_local_epochs,

lr=common_lr,

batch_size=common_bs,

clip_norm=common_clip,

epsilon=eps,

delta_dp=common_delta,

topology="ring",

gossip_pairs_per_round=10

)

hist_g, _ = run_gossip(gcfg)

gossip_results[eps] = hist_g

plt.determine(figsize=(10, 5))

for eps in eps_sweep:

plt.plot(fedavg_results[eps]["test_acc"], label=f"FedAvg eps={eps}")

plt.xlabel("Spherical")

plt.ylabel("Accuracy")

plt.legend()

plt.grid(True)

plt.present()

plt.determine(figsize=(10, 5))

for eps in eps_sweep:

plt.plot(gossip_results[eps]["avg_test_acc"], label=f"Gossip eps={eps}")

plt.xlabel("Spherical")

plt.ylabel("Avg Accuracy")

plt.legend()

plt.grid(True)

plt.present()

final_fed = [fedavg_results[eps]["test_acc"][-1] for eps in eps_sweep]

final_gos = [gossip_results[eps]["avg_test_acc"][-1] for eps in eps_sweep]

x = [100.0 if math.isinf(eps) else eps for eps in eps_sweep]

plt.determine(figsize=(8, 5))

plt.plot(x, final_fed, marker="o", label="FedAvg")

plt.plot(x, final_gos, marker="o", label="Gossip")

plt.xlabel("Epsilon")

plt.ylabel("Ultimate Accuracy")

plt.legend()

plt.grid(True)

plt.present()

def rounds_to_threshold(acc_curve, threshold):

for i, a in enumerate(acc_curve):

if a >= threshold:

return i + 1

return None

best_f = fedavg_results[math.inf]["test_acc"][-1]

best_g = gossip_results[math.inf]["avg_test_acc"][-1]

th_f = 0.9 * best_f

th_g = 0.9 * best_g

for eps in eps_sweep:

rf = rounds_to_threshold(fedavg_results[eps]["test_acc"], th_f)

rg = rounds_to_threshold(gossip_results[eps]["avg_test_acc"], th_g)

print(eps, rf, rg)We run managed experiments throughout a number of privateness ranges and accumulate outcomes for each centralized and decentralized coaching methods. We visualize convergence tendencies and closing accuracy to obviously expose the privateness–utility trade-off. We additionally compute convergence velocity metrics to quantitatively examine how completely different aggregation schemes reply to growing privateness constraints.

In conclusion, we demonstrated that decentralization essentially modifications how differential privateness noise propagates via a federated system. We noticed that whereas centralized FedAvg usually converges quicker below weak privateness constraints, gossip-based federated studying is extra sturdy to noisy updates at the price of slower convergence. Our experiments highlighted that stronger privateness ensures considerably sluggish studying in each settings, however the impact is amplified in decentralized topologies attributable to delayed info mixing. Total, we confirmed that designing privacy-preserving federated methods requires collectively reasoning about aggregation topology, communication patterns, and privateness budgets fairly than treating them as impartial decisions.

Try the Full Codes right here. Additionally, be happy to comply with us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you may be a part of us on telegram as properly.