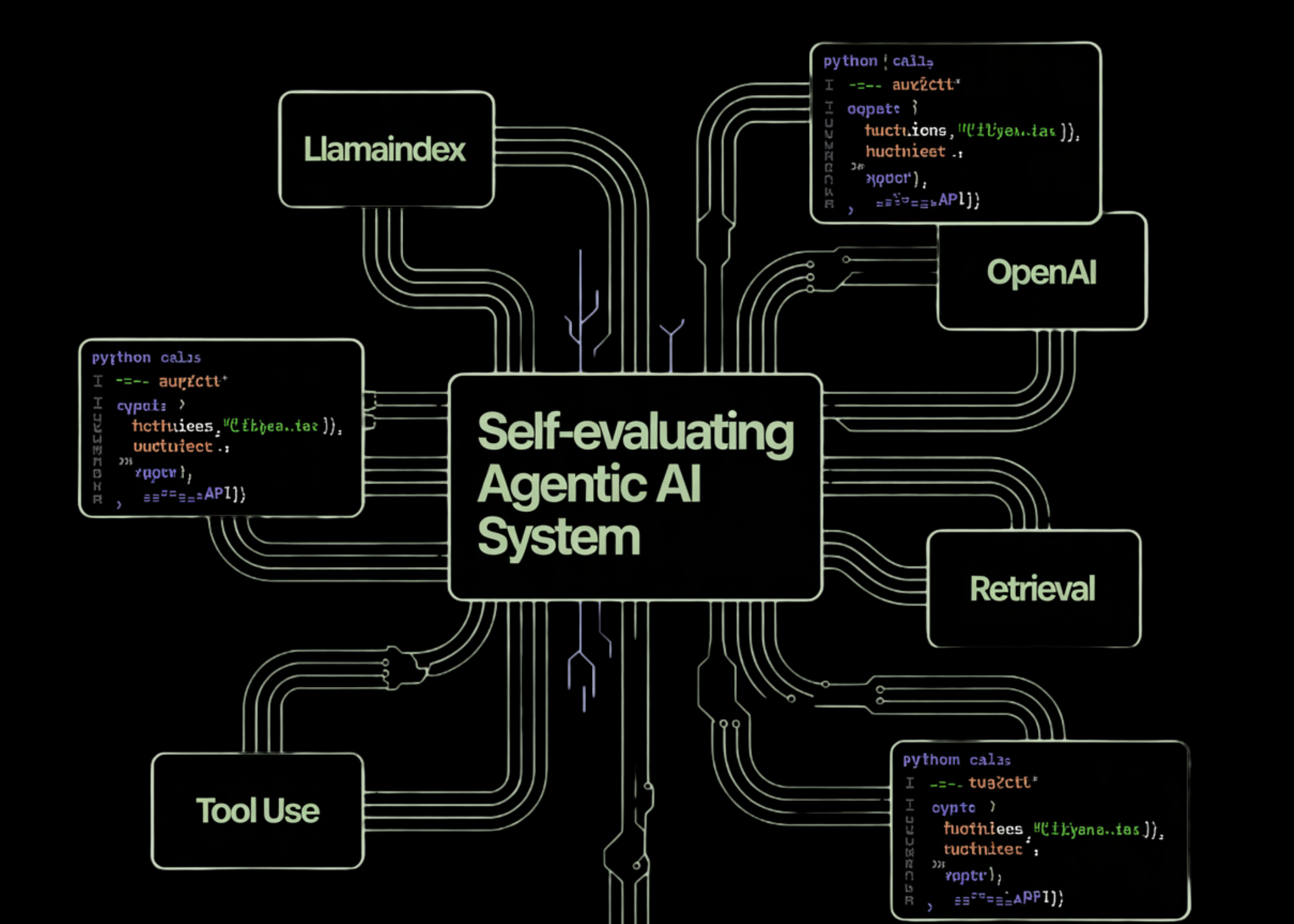

On this tutorial, we construct a complicated agentic AI workflow utilizing LlamaIndex and OpenAI fashions. We deal with designing a dependable retrieval-augmented technology (RAG) agent that may motive over proof, use instruments intentionally, and consider its personal outputs for high quality. By structuring the system round retrieval, reply synthesis, and self-evaluation, we display how agentic patterns transcend easy chatbots and transfer towards extra reliable, controllable AI programs appropriate for analysis and analytical use instances.

!pip -q set up -U llama-index llama-index-llms-openai llama-index-embeddings-openai nest_asyncio

import os

import asyncio

import nest_asyncio

nest_asyncio.apply()

from getpass import getpass

if not os.environ.get("OPENAI_API_KEY"):

os.environ["OPENAI_API_KEY"] = getpass("Enter OPENAI_API_KEY: ")We arrange the atmosphere and set up all required dependencies for working an agentic AI workflow. We securely load the OpenAI API key at runtime, guaranteeing that credentials are by no means hardcoded. We additionally put together the pocket book to deal with asynchronous execution easily.

from llama_index.core import Doc, VectorStoreIndex, Settings

from llama_index.llms.openai import OpenAI

from llama_index.embeddings.openai import OpenAIEmbedding

Settings.llm = OpenAI(mannequin="gpt-4o-mini", temperature=0.2)

Settings.embed_model = OpenAIEmbedding(mannequin="text-embedding-3-small")

texts = [

"Reliable RAG systems separate retrieval, synthesis, and verification. Common failures include hallucination and shallow retrieval.",

"RAG evaluation focuses on faithfulness, answer relevancy, and retrieval quality.",

"Tool-using agents require constrained tools, validation, and self-review loops.",

"A robust workflow follows retrieve, answer, evaluate, and revise steps."

]

docs = [Document(text=t) for t in texts]

index = VectorStoreIndex.from_documents(docs)

query_engine = index.as_query_engine(similarity_top_k=4)We configure the OpenAI language mannequin and embedding mannequin and construct a compact data base for our agent. We remodel uncooked textual content into listed paperwork in order that the agent can retrieve related proof throughout reasoning.

from llama_index.core.analysis import FaithfulnessEvaluator, RelevancyEvaluator

faith_eval = FaithfulnessEvaluator(llm=Settings.llm)

rel_eval = RelevancyEvaluator(llm=Settings.llm)

def retrieve_evidence(q: str) -> str:

r = query_engine.question(q)

out = []

for i, n in enumerate(r.source_nodes or []):

out.append(f"[{i+1}] {n.node.get_content()[:300]}")

return "n".be part of(out)

def score_answer(q: str, a: str) -> str:

r = query_engine.question(q)

ctx = [n.node.get_content() for n in r.source_nodes or []]

f = faith_eval.consider(question=q, response=a, contexts=ctx)

r = rel_eval.consider(question=q, response=a, contexts=ctx)

return f"Faithfulness: {f.rating}nRelevancy: {r.rating}"We outline the core instruments utilized by the agent: proof retrieval and reply analysis. We implement automated scoring for faithfulness and relevancy so the agent can decide the standard of its personal responses.

from llama_index.core.agent.workflow import ReActAgent

from llama_index.core.workflow import Context

agent = ReActAgent(

instruments=[retrieve_evidence, score_answer],

llm=Settings.llm,

system_prompt="""

At all times retrieve proof first.

Produce a structured reply.

Consider the reply and revise as soon as if scores are low.

""",

verbose=True

)

ctx = Context(agent)We create the ReAct-based agent and outline its system habits, guiding the way it retrieves proof, generates solutions, and revises outcomes. We additionally initialize the execution context that maintains the agent’s state throughout interactions. It step brings collectively instruments and reasoning right into a single agentic workflow.

async def run_brief(matter: str):

q = f"Design a dependable RAG + tool-using agent workflow and consider it. Matter: {matter}"

handler = agent.run(q, ctx=ctx)

async for ev in handler.stream_events():

print(getattr(ev, "delta", ""), finish="")

res = await handler

return str(res)

matter = "RAG agent reliability and analysis"

loop = asyncio.get_event_loop()

outcome = loop.run_until_complete(run_brief(matter))

print("nnFINAL OUTPUTn")

print(outcome)We execute the complete agent loop by passing a subject into the system and streaming the agent’s reasoning and output. We enable the agent to finish its retrieval, technology, and analysis cycle asynchronously.

In conclusion, we showcased how an agent can retrieve supporting proof, generate a structured response, and assess its personal faithfulness and relevancy earlier than finalizing a solution. We saved the design modular and clear, making it simple to increase the workflow with extra instruments, evaluators, or domain-specific data sources. This method illustrates how we are able to use agentic AI with LlamaIndex and OpenAI fashions to construct extra succesful programs which can be additionally extra dependable and self-aware of their reasoning and responses.

Try the FULL CODES right here. Additionally, be at liberty to observe us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you may be part of us on telegram as effectively.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.