Evaluating OCR methods that convert PDFs or doc photographs into Markdown is much extra advanced than it seems. Not like plain textual content OCR, OCR-to-Markdown requires fashions to recuperate content material, format, studying order, and illustration selections concurrently. At this time’s benchmarks try to attain this with a mixture of string matching, heuristic alignment, and format-specific guidelines—however in observe, these approaches routinely misclassify right outputs as failures.

This put up outlines why OCR-to-Markdown analysis is inherently underspecified, examines frequent analysis strategies and their failure modes, highlights concrete points noticed in two extensively used benchmarks, and explains why LLM-as-judge is at present probably the most sensible option to consider these methods—regardless of its imperfections .

Why OCR-to-Markdown Is Onerous to Consider

At its core, OCR-to-Markdown doesn’t have a single right output.

A number of outputs will be equally legitimate:

- Multi-column layouts will be linearized in numerous studying orders.

- Equations will be represented utilizing LaTeX, Unicode, HTML, or hybrids.

- Headers, footers, watermarks, and marginal textual content could or is probably not thought-about “content material” relying on activity intent.

- Spacing, punctuation, and Unicode normalization typically differ with out affecting that means.

From a human or downstream-system perspective, these outputs are equal. From a benchmark’s perspective, they typically are usually not.

Frequent Analysis Strategies and Their Limitations

1. String-Based mostly Metrics (Edit Distance, Actual Match)

Most OCR-to-Markdown benchmarks depend on normalized string comparability or edit distance.

Limitations

- Markdown is handled as a flat character sequence, ignoring construction.

- Minor formatting variations produce giant penalties.

- Structurally incorrect outputs can rating nicely if textual content overlaps.

- Scores correlate poorly with human judgment.

These metrics reward formatting compliance reasonably than correctness.

2. Order-Delicate Block Matching

Some benchmarks phase paperwork into blocks and rating ordering and proximity.

Limitations

- Legitimate various studying orders (e.g., multi-column paperwork) are penalized.

- Small footer or marginal textual content can break strict ordering constraints.

- Matching heuristics degrade quickly as format complexity will increase.

Appropriate content material is commonly marked flawed resulting from ordering assumptions.

3. Equation Matching through LaTeX Normalization

Math-heavy benchmarks usually count on equations to be rendered as full LaTeX.

Limitations

- Unicode or partially rendered equations are penalized.

- Equal LaTeX expressions utilizing totally different macros fail to match.

- Blended LaTeX/Markdown/HTML representations are usually not dealt with.

- Rendering-correct equations nonetheless fail string-level checks.

This conflates illustration selection with mathematical correctness.

4. Format-Particular Assumptions

Benchmarks implicitly encode a most well-liked output fashion.

Limitations

- HTML tags (e.g.,

<sub>) trigger matching failures. - Unicode symbols (e.g.,

km²) are penalized towards LaTeX equivalents. - Spacing and punctuation inconsistencies in floor fact amplify errors.

Fashions aligned to benchmark formatting outperform extra normal OCR methods.

Points Noticed in Current Benchmarks

Benchmark A: olmOCRBench

Guide inspection reveals that a number of subsets embed implicit content material omission guidelines:

- Headers, footers, and watermarks which can be visibly current in paperwork are explicitly marked as absent in floor fact.

- Fashions educated to extract all seen textual content are penalized for being right.

- These subsets successfully consider selective suppression, not OCR high quality.

Moreover:

- Math-heavy subsets fail when equations are usually not totally normalized LaTeX.

- Appropriate predictions are penalized resulting from illustration variations.

In consequence, scores strongly depend upon whether or not a mannequin’s output philosophy matches the benchmark’s hidden assumptions.

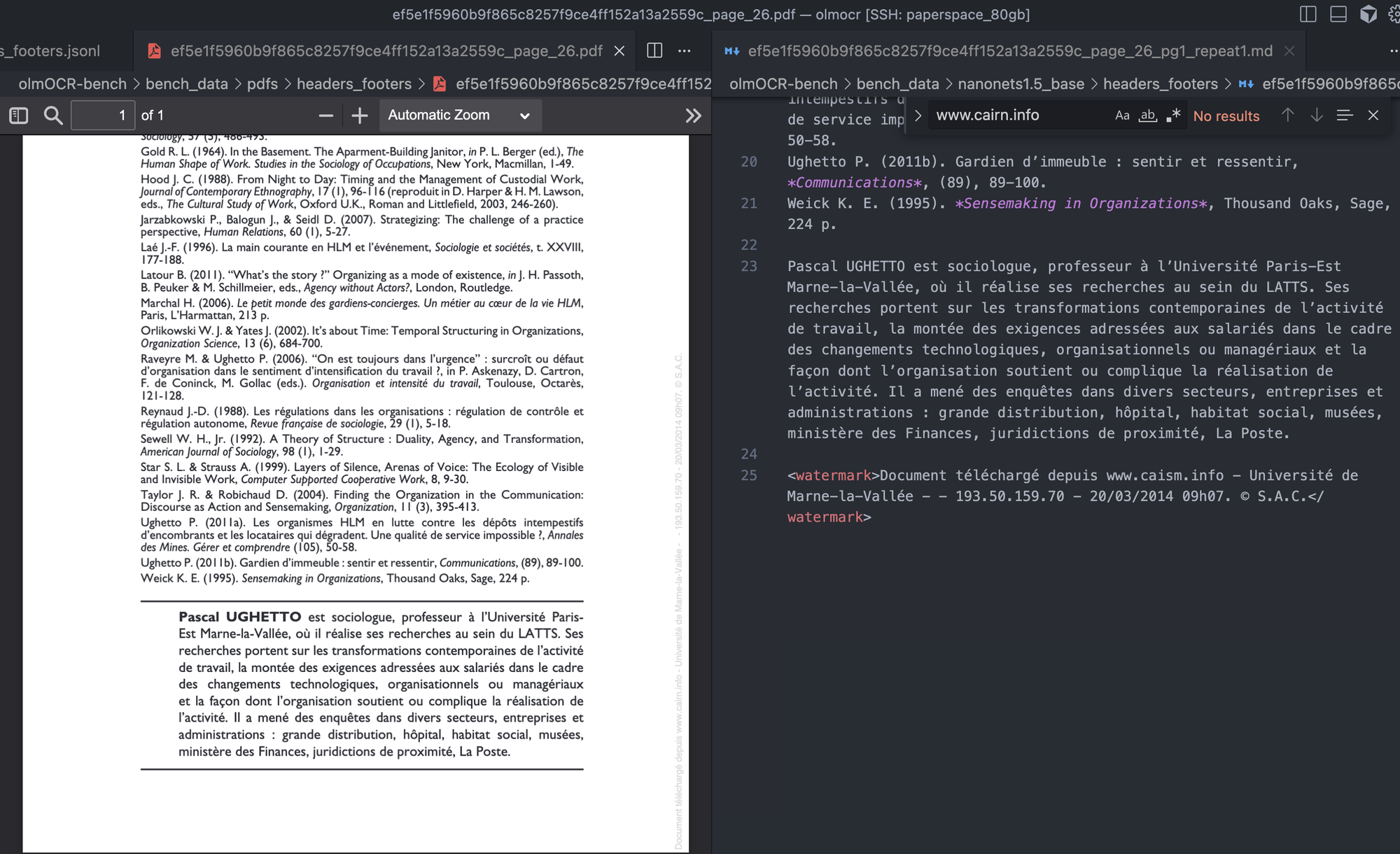

Instance 1

For the above picture, Nanonets-OCR2 accurately predicts the watermark to the correct aspect of the picture, however within the floor fact annotation penalizes the mannequin for predicting it accurately.

{

"pdf": "headers_footers/ef5e1f5960b9f865c8257f9ce4ff152a13a2559c_page_26.pdf",

"web page": 1,

"id": "ef5e1f5960b9f865c8257f9ce4ff152a13a2559c_page_26.pdf_manual_01",

"sort": "absent",

"textual content": "Doc tu00e9lu00e9chargu00e9 depuis www.cairn.data - Universitu00e9 de Marne-la-Vallu00e9e - - 193.50.159.70 - 20/03/2014 09h07. u00a9 S.A.C.", "case_sensitive": false, "max_diffs": 3, "checked": "verified", "first_n": null, "last_n": null, "url": "<https://hal-enpc.archives-ouvertes.fr/hal-01183663/file/14-RAC-RecitsDesTempsDHier.pdf>"}

Sort absent implies that within the prediction information, that textual content shouldn’t be current.

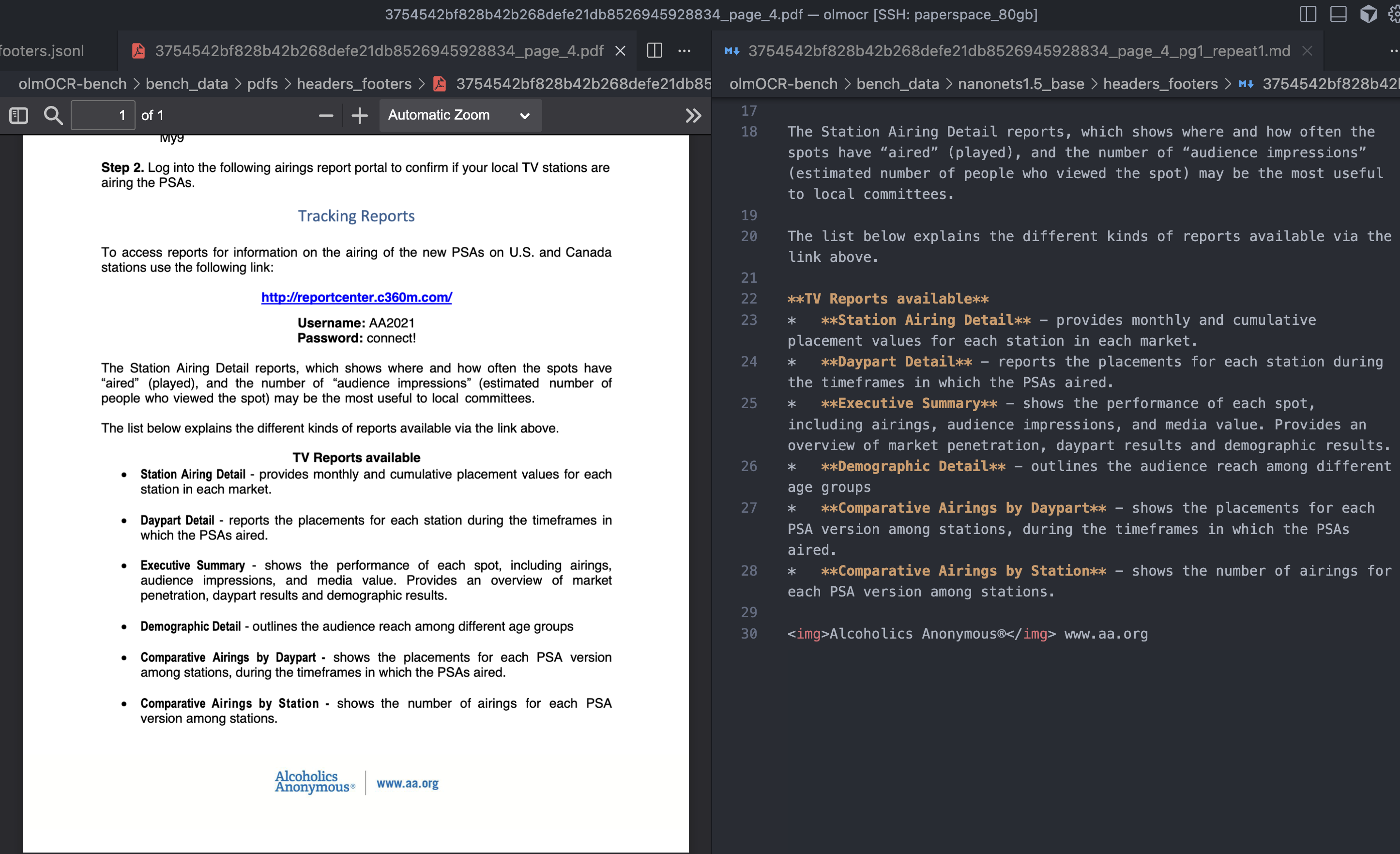

Instance 2

The benchmark additionally doesn’t contemplate texts which can be current within the doc footer.

Instance on this doc, the Alcoholics Namelessu00ae and www.aa.org shouldn’t be current within the doc in keeping with the ground-truth, which is wrong

{

"pdf": "headers_footers/3754542bf828b42b268defe21db8526945928834_page_4.pdf",

"web page": 1,

"id": "3754542bf828b42b268defe21db8526945928834_page_4_header_00",

"sort": "absent",

"max_diffs": 0,

"checked": "verified",

"url": "<https://www.aa.org/websites/default/information/literature/PIpercent20Infopercent20Packetpercent20EN.pdf>",

"textual content": "Alcoholics Namelessu00ae",

"case_sensitive": false, "first_n": null, "last_n": null

}

{

"pdf": "headers_footers/3754542bf828b42b268defe21db8526945928834_page_4.pdf",

"web page": 1,

"id": "3754542bf828b42b268defe21db8526945928834_page_4_header_01",

"sort": "absent",

"max_diffs": 0,

"checked": "verified",

"url": "<https://www.aa.org/websites/default/information/literature/PIpercent20Infopercent20Packetpercent20EN.pdf>",

"textual content": "www.aa.org",

"case_sensitive": false, "first_n": null, "last_n": null}

Benchmark B: OmniDocBench

OmniDocBench reveals related points, however extra broadly:

- Equation analysis depends on strict LaTeX string equivalence.

- Semantically similar equations fail resulting from macro, spacing, or image variations.

- Quite a few ground-truth annotation errors had been noticed (lacking tokens, malformed math, incorrect spacing).

- Unicode normalization and spacing variations systematically scale back scores.

- Prediction choice heuristics can fail even when the proper reply is totally current.

In lots of instances, low scores mirror benchmark artifacts, not mannequin errors.

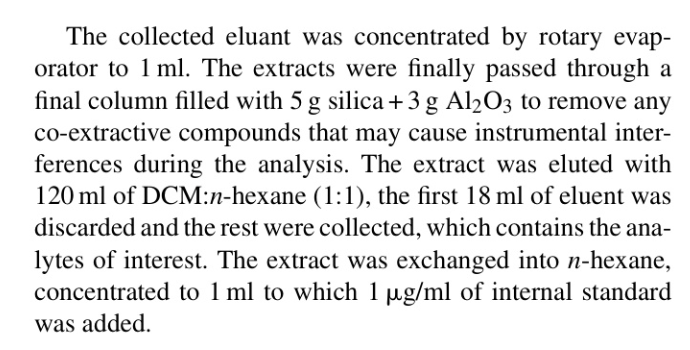

Instance 1

Within the instance above, the Nanonets-OCR2-3B predicts 5 g silica + 3 g Al$_2$O$_3$ however the floor fact expects as $ 5g \mathrm{\ s i l i c a}+3g \mathrm{\ A l}*{2} \mathrm{O*{3}} $ . This flags the mannequin prediction as incorrect, even when each are right.

Full Floor Reality and Prediction, and the check case shared under:

'pred': 'The collected eluant was concentrated by rotary evaporator to 1 ml. The extracts had been lastly handed by a remaining column full of 5 g silica + 3 g Al$_2$O$_3$ to take away any co-extractive compounds which will trigger instrumental interferences durin the evaluation. The extract was eluted with 120 ml of DCM:n-hexane (1:1), the primary 18 ml of eluent was discarded and the remaining had been collected, which accommodates the analytes of curiosity. The extract was exchanged into n-hexane, concentrated to 1 ml to which 1 μg/ml of inner normal was added.'

'gt': 'The collected eluant was concentrated by rotary evaporator to 1 ml .The extracts had been lastly handed by a remaining column full of $ 5g \mathrm{\ s i l i c a}+3g \mathrm{\ A l}*{2} \mathrm{O*{3}} $ to take away any co-extractive compounds which will trigger instrumental

interferences through the evaluation. The extract was eluted with 120 ml of DCM:n-hexane (1:1), the primary 18 ml of eluent was discarded and the remaining had been collected, which accommodates the analytes of curiosity. The extract was exchanged into n - hexane, concentrated to 1 ml to which $ \mu\mathrm{g / ml} $ of inner normal was added.'Instance 2

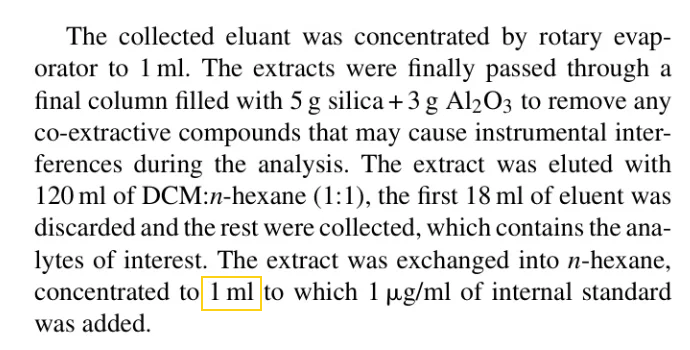

We discovered considerably extra incorrect annotations with OmniDocBench

Within the ground-truth annotation 1 is lacking in 1 ml .

'textual content': 'The collected eluant was concentrated by rotary evaporator to 1 ml .The extracts had been lastly handed by a remaining column full of $ 5g \mathrm{\ s i l i c a}+3g \mathrm{\ A l}*{2} \mathrm{O*{3}} $ to take away any co-extractive compounds which will trigger instrumental interferences through the evaluation. The extract was eluted with 120 ml of DCM:n-hexane (1:1), the primary 18 ml of eluent was discarded and the remaining had been collected, which accommodates the analytes of curiosity. The extract was exchanged into n - hexane, concentrated to 1 ml to which $ \mu\mathrm{g / ml} $ of inner normal was added.'

Why LLM-as-Choose Is the Least-Unhealthy Choice At this time

Given these limitations, LLM-as-judge is at present probably the most sensible option to consider OCR-to-Markdown methods.

This isn’t as a result of LLM judges are excellent—however as a result of the issue is essentially semantic.

What LLM-as-Choose Handles Properly

- Semantic Equivalence Throughout Representations

LLMs can acknowledge that:- LaTeX, Unicode, and HTML equations will be equal

- Macro-level variations (

A^Tvsmathbf{A}^T) don’t change that means - Spacing and normalization variations are irrelevant

- Versatile Studying Order Reasoning

LLMs can assess whether or not content material is full even when:- Sections are reordered

- Multi-column layouts are linearized in another way

- Context-Conscious Content material Inclusion

LLMs can cause about whether or not:- Footers, headers, or watermarks ought to fairly be included

- Textual content inside logos or figures counts as content material

- Tolerance to Annotation Noise

When floor fact is incomplete or incorrect, LLMs can nonetheless choose correctness relative to the doc, reasonably than blindly implementing flawed annotations.

Why Metric Engineering Doesn’t Scale

Many benchmark failures are addressed by:

- Including normalization guidelines

- Increasing equivalence courses

- Introducing heuristic margins

These fixes don’t generalize. Each new doc sort—scientific papers, scanned books, multilingual PDFs, kinds—introduces new edge instances. LLMs generalize throughout these instances with out task-specific rule engineering.

Acknowledged Limitations of LLM-as-Choose

LLM-based analysis has actual drawbacks:

- Non-determinism

- Sensitivity to immediate design

- Larger price and latency

- Diminished reproducibility in comparison with static scripts

Nevertheless, these are operational limitations, not conceptual ones. In distinction, string- and rule-based metrics are conceptually misaligned with the duty itself.

Last Takeaway

OCR-to-Markdown analysis is underspecified by nature. Current benchmarks conflate formatting, illustration selections, and semantic correctness—typically penalizing fashions for being right in methods the benchmark didn’t anticipate.

Till benchmarks explicitly embrace semantic equivalence, LLM-as-judge stays the closest approximation to human judgment and probably the most dependable analysis sign accessible in the present day. Benchmark scores ought to due to this fact be handled as partial indicators, not definitive measures of OCR high quality.