Alibaba Tongyi Lab have launched MAI-UI—a household of basis GUI brokers. It natively integrates MCP device use, agent person interplay, gadget–cloud collaboration, and on-line RL, establishing state-of-the-art outcomes usually GUI grounding and cellular GUI navigation, surpassing Gemini-2.5-Professional, Seed1.8, and UI-Tars-2 on AndroidWorld. The system targets three particular gaps that early GUI brokers usually ignore, native agent person interplay, MCP device integration, and a tool cloud collaboration structure that retains privateness delicate work on gadget whereas nonetheless utilizing massive cloud fashions when wanted.

What’s MAI-UI?

MAI-UI is a household of multimodal GUI brokers constructed on Qwen3 VL, with mannequin sizes 2B, 8B, 32B and 235B A22B. These fashions take pure language directions and rendered UI screenshots as enter, then output structured actions for a stay Android surroundings.

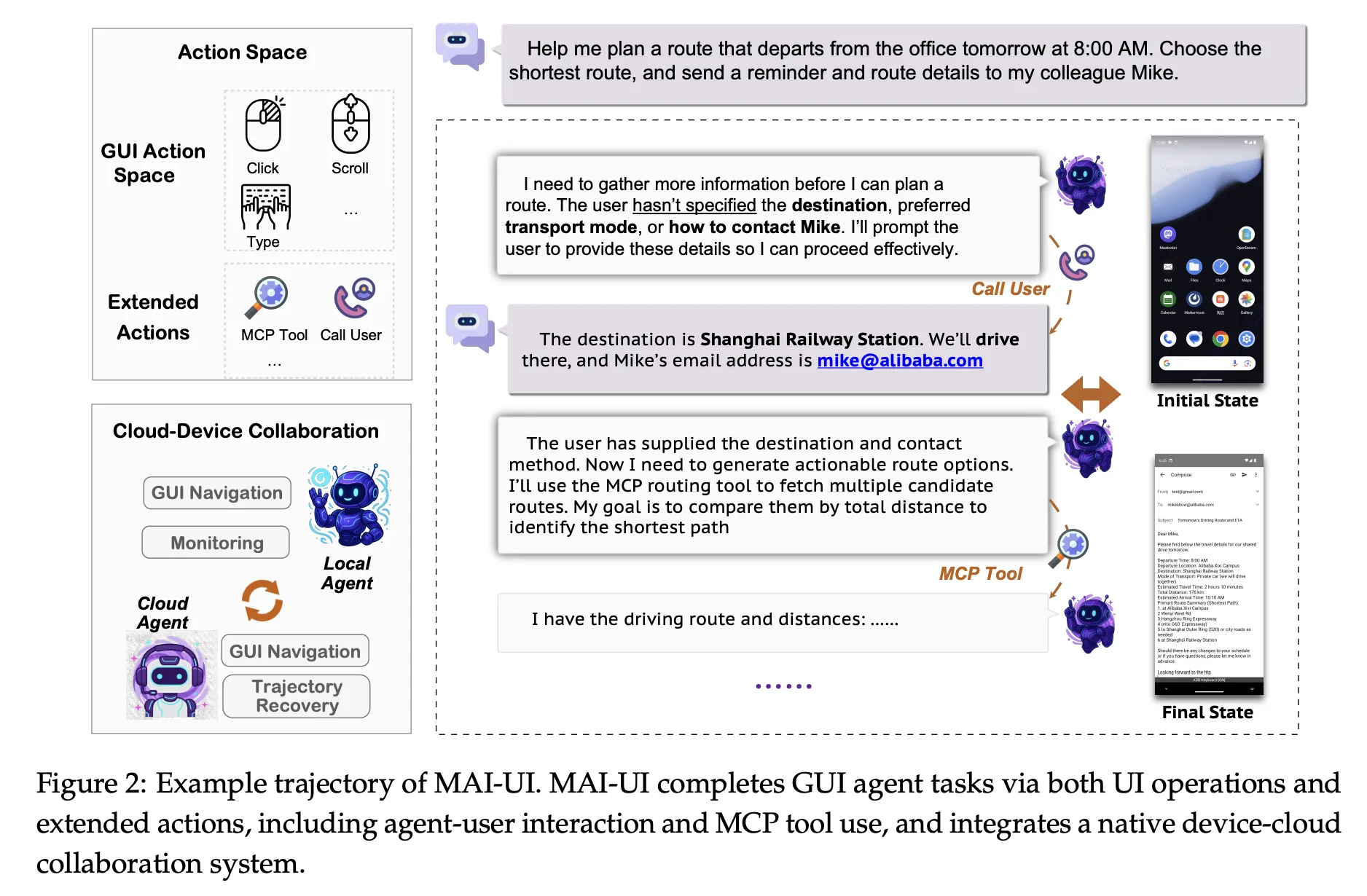

The motion area covers normal operations comparable to clicking parts, swiping, getting into textual content and urgent system buttons. On high of that, MAI-UI introduces specific actions for answering person questions, asking the person for clarification when the purpose is ambiguous, and invoking exterior instruments by way of MCP device calls. This makes the agent able to mixing GUI steps, direct language responses and API degree operations in a single trajectory.

From a modeling perspective, MAI UI unifies three elements, a self evolving navigation knowledge pipeline that features person interplay and MCP instances, an internet RL framework that scales to a whole bunch of parallel Android cases and lengthy contexts, and a local gadget cloud collaboration system that routes execution based mostly on activity state and privateness constraints.

GUI grounding with instruction reasoning

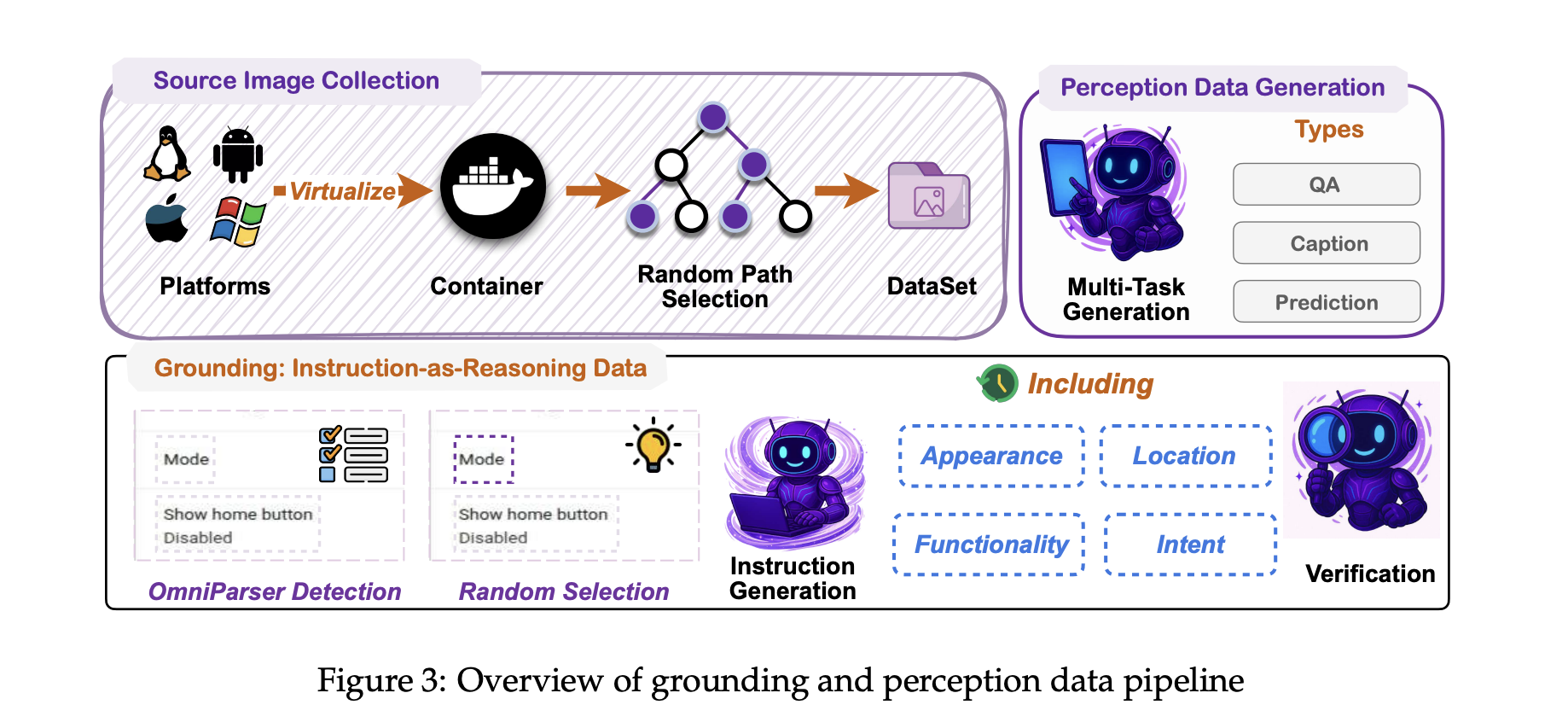

A core requirement for any GUI agent is grounding, mapping free kind language like ‘open month-to-month billing settings’ to the right on display screen management. MAI-UI adopts a UI grounding technique impressed by the sooner UI-Ins work on multi perspective instruction descriptions.

For every UI component, the coaching pipeline doesn’t depend on a single caption. As an alternative, it generates a number of views of the identical component, for instance look, operate, spatial location and person intent. These a number of directions are handled as reasoning proof for the mannequin, which should choose some extent inside the right bounding field. This reduces the affect of flawed or underspecified directions, a difficulty that UI Ins quantified in current datasets.

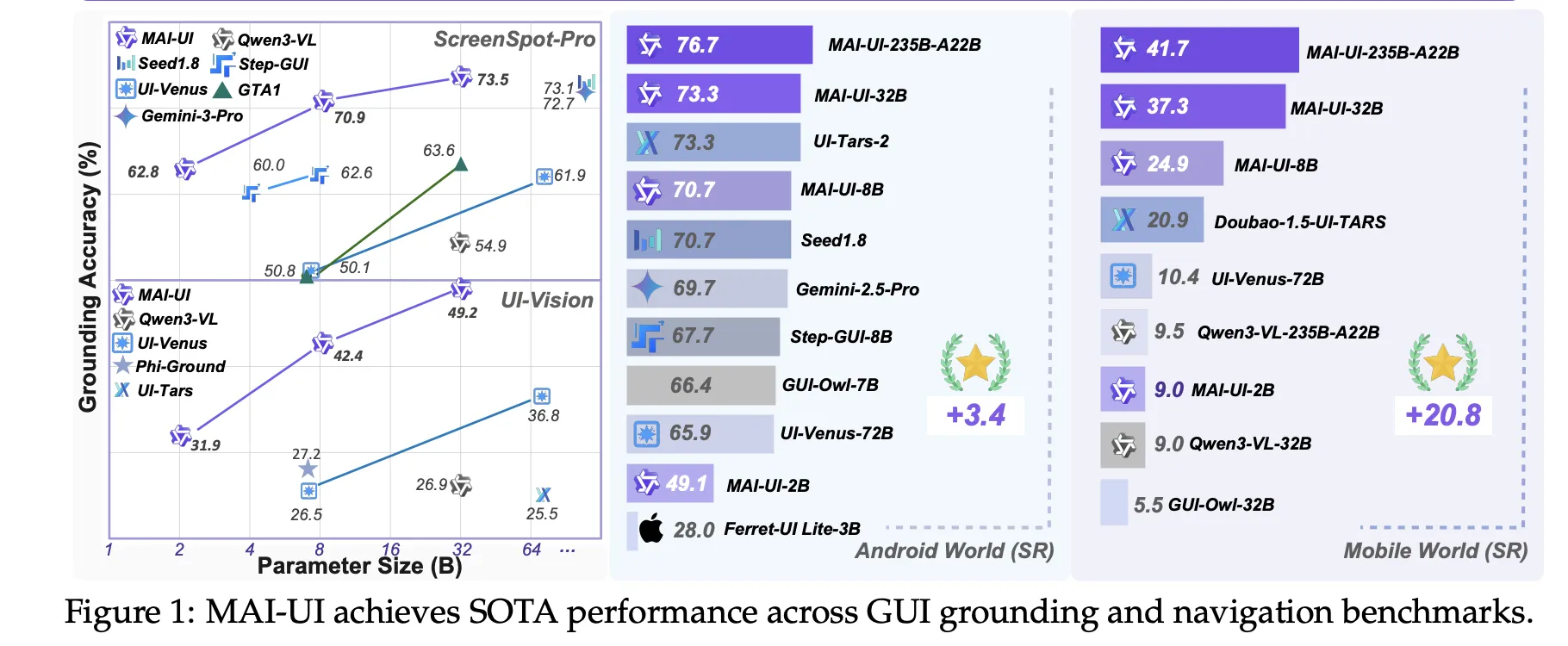

Floor reality packing containers are collected from a mixture of curated GUI datasets and huge scale exploration of virtualized working programs in containerized environments. Accessibility bushes or OCR based mostly parsers are used to align textual metadata with pixel places. The coaching goal combines supervised wonderful tuning with a easy reinforcement sign that rewards right level in field predictions and legitimate output format.

On public GUI grounding benchmarks, the ensuing MAI-UI fashions attain 73.5 % accuracy on ScreenSpot Professional with adaptive zoom in, 91.3 % on MMBench GUI L2, 70.9 % on OSWorld G and 49.2 % on UI Imaginative and prescient. These numbers surpass Gemini 3 Professional and Seed1.8 on ScreenSpot Professional, and considerably outperform earlier open fashions on UI Imaginative and prescient.

Self evolving navigation knowledge and MobileWorld

Navigation is tougher than grounding as a result of the agent should keep context throughout many steps, presumably throughout purposes, whereas interacting with the person and instruments. To construct sturdy navigation habits, Tongyi Lab makes use of a self evolving knowledge pipeline.

Seed duties come from app manuals, hand designed eventualities and filtered public knowledge. Parameters comparable to dates, limits and filter values are perturbed to develop protection, and object degree substitutions are utilized whereas staying inside the identical use case. A number of brokers, along with human annotators, execute these duties in Android environments to provide trajectories. A decide mannequin then evaluates these trajectories, retains the longest right prefixes and filters out low high quality segments. The following supervised coaching spherical makes use of the union of recent human traces and prime quality mannequin rollouts, so the info distribution regularly follows the present coverage.

MAI UI is evaluated on MobileWorld, a benchmark from the identical group that features 201 duties throughout 20 purposes. MobileWorld explicitly mixes three classes, pure GUI duties, agent person interplay duties that require pure language backwards and forwards with the person, and MCP augmented duties that require device calls.

On MobileWorld, MAI UI reaches 41.7 % general success, a acquire of about 20.8 factors over the strongest finish to finish GUI baselines, and aggressive with agentic frameworks that use bigger proprietary planners comparable to Gemini 3 Professional.

On-line RL in containerized Android environments

Static knowledge shouldn’t be sufficient for robustness in dynamic cellular apps. MAI-UI due to this fact makes use of an internet RL framework the place the agent interacts instantly with containerized Android Digital Units. The surroundings stack packs rooted AVD pictures and backend companies into Docker containers, exposes normal reset and step operations over a service layer and helps greater than 35 self hosted apps from e commerce, social, productiveness and enterprise classes.

The RL setup makes use of an asynchronous on coverage methodology, GRPO, applied on high of verl. It combines tensor, pipeline and context parallelism, much like Megatron fashion coaching, in order that the mannequin can be taught from trajectories with as much as 50 steps and really lengthy token sequences. Rewards come from rule based mostly verifiers or mannequin judges that detect activity completion, together with penalties for apparent looping behaviors. Solely latest profitable trajectories are saved in activity particular buffers to stabilize studying.

Scaling this RL surroundings issues in observe. The analysis group exhibits that rising the variety of parallel GUI environments from 32 to 512 yields about 5.2 proportion factors enchancment on navigation success, and rising the allowed surroundings steps from 15 to 50 provides about 4.3 factors.

On the AndroidWorld benchmark, which evaluates on-line navigation in a normal Android app suite, the biggest MAI UI variant reaches 76.7 % success, surpassing UI-Tars-2, Gemini 2.5 Professional and Seed1.8.

Key Takeaways

- Unified GUI agent household for cellular: MAI-UI is a Qwen3 VL based mostly household of GUI brokers from 2B to 235B A22B, designed particularly for actual world cellular deployment with native agent person interplay, MCP device calls and gadget cloud routing, fairly than solely static benchmarks.

- State-of-the-art GUI grounding and navigation: The fashions attain 73.5 % on ScreenSpot Professional, 91.3 % on MMBench GUI L2, 70.9 % on OSWorld G and 49.2 % on UI Imaginative and prescient, and set a brand new 76.7 % SOTA on AndroidWorld cellular navigation, surpassing UI Tars 2, Gemini 2.5 Professional and Seed1.8.

- Life like MobileWorld efficiency with interplay and instruments: On the MobileWorld benchmark with 201 duties throughout 20 apps, MAI UI 235B A22B reaches 41.7 % general success, with 39.7 % on pure GUI duties, 51.1 % on agent person interplay duties and 37.5 % on MCP augmented duties, beating one of the best finish to finish GUI baseline Doubao 1.5 UI TARS at 20.9 %.

- Scalable on-line RL in containerized Android: MAI-UI makes use of an internet GRPO based mostly RL framework over containerized Android environments, the place scaling from 32 to 512 parallel environments provides about plus 5.2 factors in navigation success and rising the surroundings step finances from 15 to 50 provides one other plus 4.3 factors.

Take a look at the Paper and GitHub Repo. Additionally, be happy to observe us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our Publication. Wait! are you on telegram? now you’ll be able to be part of us on telegram as properly.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.