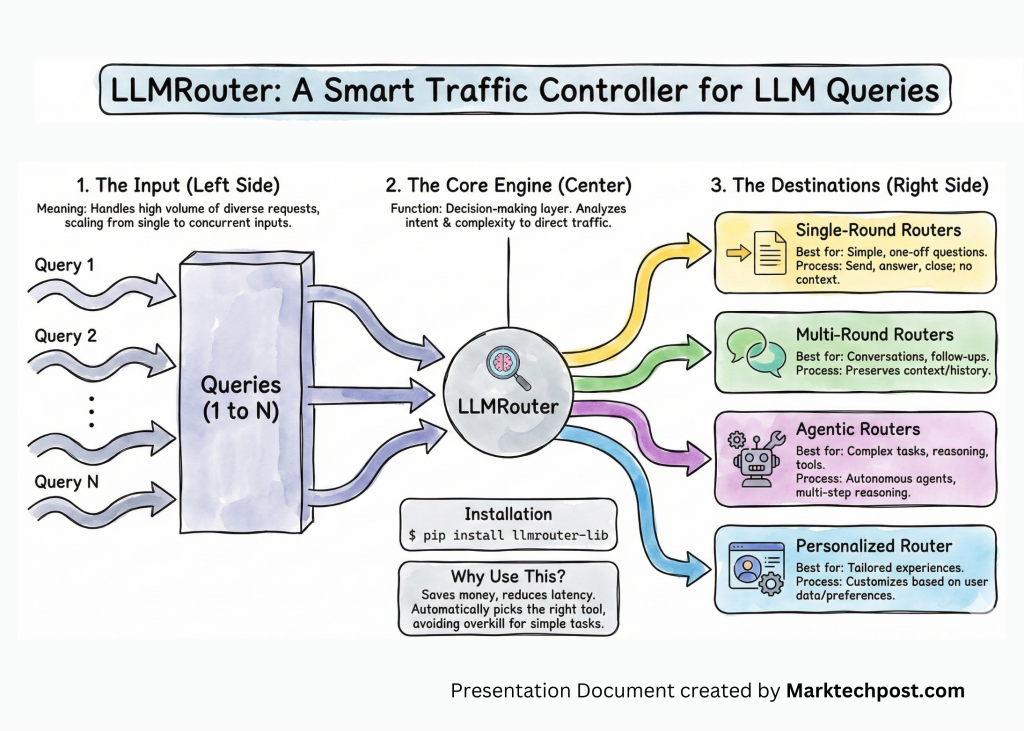

LLMRouter is an open supply routing library from the U Lab on the College of Illinois Urbana Champaign that treats mannequin choice as a firstclass system downside. It sits between purposes and a pool of LLMs and chooses a mannequin for every question based mostly on activity complexity, high quality targets, and price, all uncovered by means of a unified Python API and CLI. The mission ships with greater than 16 routing fashions, a knowledge technology pipeline over 11 benchmarks, and a plugin system for customized routers.

Router households and supported fashions

LLMRouter organizes routing algorithms into 4 households, Single-Spherical Routers, Multi-Spherical Routers, Personalised Routers, and Agentic Routers. Single spherical routers embody knnrouter, svmrouter, mlprouter, mfrouter, elorouter, routerdc, automix, hybrid_llm, graphrouter, causallm_router, and the baselines smallest_llm and largest_llm. These fashions implement methods comparable to ok nearest neighbors, assist vector machines, multilayer perceptrons, matrix factorization, Elo ranking, twin contrastive studying, automated mannequin mixing, and graph based mostly routing.

Multi spherical routing is uncovered by means of router_r1, a pre educated occasion of Router R1 built-in into LLMRouter. Router R1 formulates multi LLM routing and aggregation as a sequential choice course of the place the router itself is an LLM that alternates between inner reasoning steps and exterior mannequin calls. It’s educated with reinforcement studying utilizing a rule based mostly reward that balances format, end result, and price. In LLMRouter, router_r1 is out there as an additional set up goal with pinned dependencies examined on vllm==0.6.3 and torch==2.4.0.

Personalised routing is dealt with by gmtrouter, described as a graph based mostly customized router with person choice studying. GMTRouter represents multi flip person LLM interactions as a heterogeneous graph over customers, queries, responses, and fashions. It runs a message passing structure over this graph to deduce person particular routing preferences from few shot interplay knowledge, and experiments present accuracy and AUC good points over non customized baselines.

Agentic routers in LLMRouter lengthen routing to multi step reasoning workflows. knnmultiroundrouter makes use of ok nearest neighbor reasoning over multi flip traces and is meant for advanced duties. llmmultiroundrouter exposes an LLM based mostly agentic router that performs multi step routing with out its personal coaching loop. These agentic routers share the identical configuration and knowledge codecs as the opposite router households and might be swapped by means of a single CLI flag.

Information technology pipeline for routing datasets

LLMRouter ships with a full knowledge technology pipeline that turns customary benchmarks and LLM outputs into routing datasets. The pipeline helps 11 benchmarks, Pure QA, Trivia QA, MMLU, GPQA, MBPP, HumanEval, GSM8K, CommonsenseQA, MATH, OpenBookQA, and ARC Problem. It runs in three express levels. First, data_generation.py extracts queries and floor fact labels and creates prepare and check JSONL splits. Second, generate_llm_embeddings.py builds embeddings for candidate LLMs from metadata. Third, api_calling_evaluation.py calls LLM APIs, evaluates responses, and fuses scores with embeddings into routing information. (GitHub)

The pipeline outputs question information, LLM embedding JSON, question embedding tensors, and routing knowledge JSONL information. A routing entry contains fields comparable to task_name, question, ground_truth, metric, model_name, response, efficiency, embedding_id, and token_num. Configuration is dealt with completely by means of YAML, so engineers level the scripts to new datasets and candidate mannequin lists with out modifying code.

Chat interface and plugin system

For interactive use, llmrouter chat launches a Gradio based mostly chat frontend over any router and configuration. The server can bind to a customized host and port and might expose a public sharing hyperlink. Question modes management how routing sees context. current_only makes use of solely the most recent person message, full_context concatenates the dialogue historical past, and retrieval augments the question with the highest ok comparable historic queries. The UI visualizes mannequin selections in actual time and is pushed by the identical router configuration used for batch inference.

LLMRouter additionally offers a plugin system for customized routers. New routers stay below custom_routers, subclass MetaRouter, and implement route_single and route_batch. Configuration information below that listing outline knowledge paths, hyperparameters, and non-compulsory default API endpoints. Plugin discovery scans the mission custom_routers folder, a ~/.llmrouter/plugins listing, and any additional paths within the LLMROUTER_PLUGINS setting variable. Instance customized routers embody randomrouter, which selects a mannequin at random, and thresholdrouter, which is a trainable router that estimates question issue.

Key Takeaways

- Routing as a firstclass abstraction: LLMRouter is an open supply routing layer from UIUC that sits between purposes and heterogeneous LLM swimming pools and centralizes mannequin choice as a price and high quality conscious prediction activity slightly than advert hoc scripts.

- 4 router households masking 16 plus algorithms: The library standardizes greater than 16 routers into 4 households, single spherical, multi spherical, customized, and agentic, together with

knnrouter,graphrouter,routerdc,router_r1, andgmtrouter, all uncovered by means of a unified config and CLI. - Multi spherical RL routing through Router R1:

router_r1integrates the Router R1 framework, the place an LLM router interleaves inner “assume” steps with exterior “route” calls and is educated with a rule based mostly reward that mixes format, end result, and price to optimize efficiency price commerce offs. - Graph based mostly personalization with GMTRouter:

gmtrouterfashions customers, queries, responses and LLMs as nodes in a heterogeneous graph and makes use of message passing to study person particular routing preferences from few shot histories, attaining as much as round 21% accuracy good points and substantial AUC enhancements over sturdy baselines. - Finish to finish pipeline and extensibility: LLMRouter offers a benchmark pushed knowledge pipeline, CLI for coaching and inference, a Gradio chat UI, centralized API key dealing with, and a plugin system based mostly on

MetaRouterthat enables groups to register customized routers whereas reusing the identical routing datasets and infrastructure.

Try the GitHub Repo and Technical particulars. Additionally, be at liberty to comply with us on Twitter and don’t neglect to affix our 100k+ ML SubReddit and Subscribe to our E-newsletter. Wait! are you on telegram? now you may be part of us on telegram as properly.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.