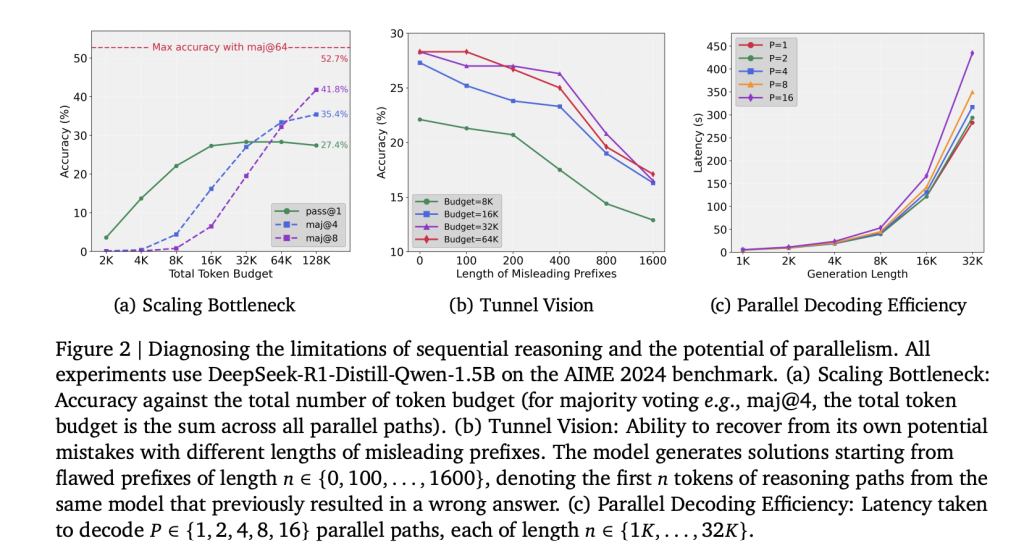

Why Do Sequential LLMs Hit a Bottleneck?

Check-time compute scaling in LLMs has historically relied on extending single reasoning paths. Whereas this method improves reasoning for a restricted vary, efficiency plateaus rapidly. Experiments on DeepSeek-R1-distill-Qwen-1.5B present that growing token budgets past 32K (as much as 128K) yields negligible accuracy good points. The bottleneck arises from early token dedication, the place preliminary errors propagate via your entire chain-of-thought. This impact, known as Tunnel Imaginative and prescient, signifies that the scaling challenge is methodological reasonably than a elementary restrict of mannequin capability.

Tunnel Imaginative and prescient and How Is It Identified?

Researchers quantified restoration capability by forcing fashions to proceed from faulty prefixes of various lengths (100–1600 tokens). Accuracy declined monotonically as prefix size elevated, demonstrating that after dedicated to a flawed trajectory, the mannequin can not recuperate—even when given further computation funds. This confirms that sequential scaling allocates compute inefficiently.

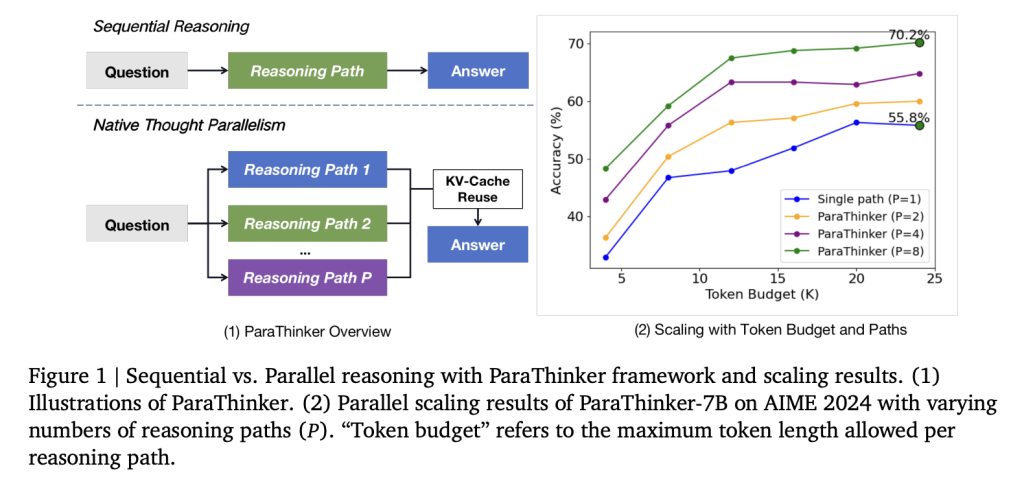

How Does ParaThinker Introduce Parallel Considering?

A workforce of researchers from Tsinghua College introduce ParaThinker, an end-to-end framework that trains an LLM to generate a number of, numerous reasoning paths in parallel and synthesize them right into a superior last reply. ParaThinker operationalizes native thought parallelism by producing a number of reasoning trajectories in parallel and merging them right into a last response.

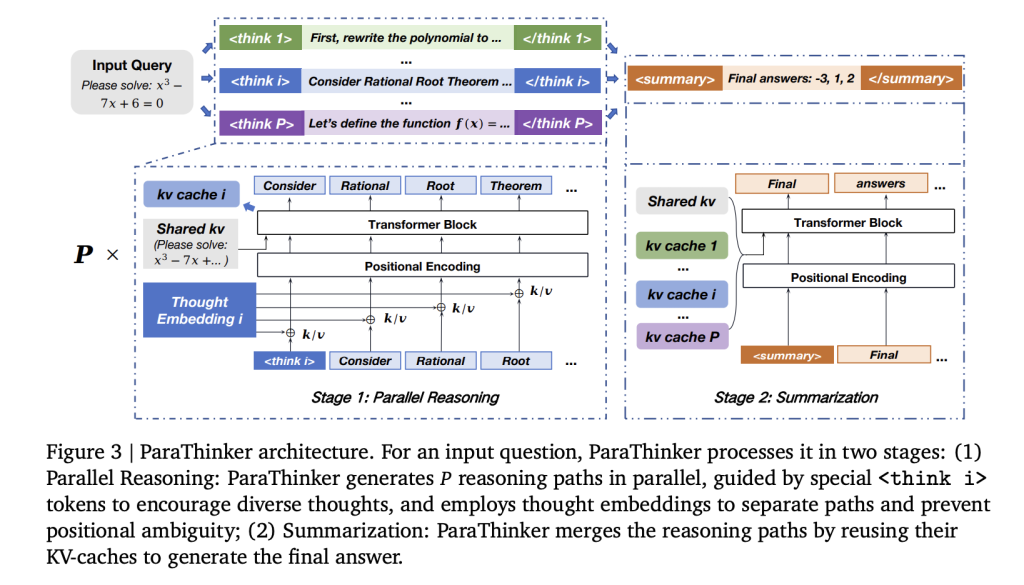

Key architectural elements embody:

- Specialised management tokens (

<suppose i>) to provoke distinct reasoning paths. - Thought-specific positional embeddings to disambiguate tokens throughout paths and stop collapse throughout summarization.

- Two-phase consideration masks imposing path independence throughout reasoning and managed integration throughout reply era.

A vital effectivity acquire comes from reusing KV-caches from the reasoning stage within the summarization section, eliminating redundant re-prefilling.

How Is ParaThinker Skilled for Parallel Reasoning?

Supervised fine-tuning (SFT) was carried out utilizing multi-path reasoning datasets. Coaching information was constructed by sampling a number of answer paths from trainer fashions (DeepSeek-R1, GPT-OSS-20B). Every instance included a number of <suppose i> trajectories and a last <abstract> answer. Randomized token sampling ensured generalization to extra paths at inference than seen in coaching.

The fine-tuning used Qwen-2.5 fashions (1.5B and 7B parameters), with most context size 28K tokens. Information sources included Open-R1, DeepMath, s1k, and LIMO, supplemented with further options sampled at temperature 0.8. Coaching was run on a number of A800 GPUs.

What Are the Experimental Outcomes?

Analysis on AIME 2024, AIME 2025, AMC 2023, and MATH-500 yields the next:

- Accuracy:

- 1.5B ParaThinker achieved +12.3% accuracy over sequential baselines and +4.3% over majority voting.

- 7B ParaThinker achieved +7.5% accuracy over sequential and +2.0% over majority voting.

- With 8 reasoning paths, ParaThinker-1.5B reached 63.2% move@1, exceeding sequential 7B fashions at equal budgets.

- Effectivity:

- Latency overhead of parallel reasoning was 7.1% on common.

- Producing 16 paths was lower than 2× the latency of producing a single path resulting from improved GPU reminiscence utilization.

- Termination technique: The First-End method, the place reasoning ends when the primary path terminates, outperformed Final-End and Half-End methods each in accuracy and latency.

What Do Ablation Research Point out?

- Dataset-only fine-tuning (with out ParaThinker modifications) failed to enhance efficiency, confirming that good points derive from architectural improvements reasonably than coaching information alone.

- Eradicating thought embeddings diminished accuracy, whereas naïve flattened encodings brought about extreme degradation resulting from long-range positional decay.

- Re-prefilling baselines degraded because the variety of paths elevated, validating the computational advantages of KV-cache reuse.

How Does ParaThinker Evaluate to Different Strategies?

Typical parallel methods equivalent to majority voting, self-consistency, and Tree of Ideas require exterior verifiers or post-hoc choice, limiting scalability. Diffusion-based token-parallel strategies carry out poorly on reasoning duties resulting from sequential dependency. Architectural approaches like PARSCALE demand structural adjustments and pretraining. In distinction, ParaThinker preserves the Transformer spine and introduces parallelism on the reasoning stage, integrating a number of KV-caches right into a unified summarization step.

Abstract

ParaThinker demonstrates that test-time scaling bottlenecks are an artifact of sequential reasoning methods. By allocating compute throughout width (parallel trajectories) reasonably than depth (longer chains), smaller fashions can outperform considerably bigger baselines with minimal latency overhead. This establishes native thought parallelism as a vital dimension for future LLM scaling.

Try the PAPER right here. Be happy to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be happy to observe us on Twitter and don’t neglect to affix our 100k+ ML SubReddit and Subscribe to our Publication.